feat: Expose unified VisionCameraProxy object, make FrameProcessorPlugins object-oriented (#1660)

* feat: Replace `FrameProcessorRuntimeManager` with `VisionCameraProxy` (iOS) * Make `FrameProcessorPlugin` a constructable HostObject * fix: Fix `name` override * Simplify `useFrameProcessor * fix: Fix lint errors * Remove FrameProcessorPlugin::name * JSIUtils -> JSINSObjectConversion

This commit is contained in:

@@ -120,6 +120,36 @@ const frameProcessor = useFrameProcessor((frame) => {

|

||||

}, [onQRCodeDetected])

|

||||

```

|

||||

|

||||

### Running asynchronously

|

||||

|

||||

Since Frame Processors run synchronously with the Camera Pipeline, anything taking longer than one Frame interval might block the Camera from streaming new Frames. To avoid this, you can use `runAsync` to run code asynchronously on a different Thread:

|

||||

|

||||

```ts

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

console.log('I'm running synchronously at 60 FPS!')

|

||||

runAsync(() => {

|

||||

'worklet'

|

||||

console.log('I'm running asynchronously, possibly at a lower FPS rate!')

|

||||

})

|

||||

}, [])

|

||||

```

|

||||

|

||||

### Running at a throttled FPS rate

|

||||

|

||||

Some Frame Processor Plugins don't need to run on every Frame, for example a Frame Processor that detects the brightness in a Frame only needs to run twice per second:

|

||||

|

||||

```ts

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

console.log('I'm running synchronously at 60 FPS!')

|

||||

runAtTargetFps(2, () => {

|

||||

'worklet'

|

||||

console.log('I'm running synchronously at 2 FPS!')

|

||||

})

|

||||

}, [])

|

||||

```

|

||||

|

||||

### Using Frame Processor Plugins

|

||||

|

||||

Frame Processor Plugins are distributed through npm. To install the [**vision-camera-image-labeler**](https://github.com/mrousavy/vision-camera-image-labeler) plugin, run:

|

||||

@@ -204,7 +234,7 @@ The Frame Processor API spawns a secondary JavaScript Runtime which consumes a s

|

||||

|

||||

Inside your `gradle.properties` file, add the `disableFrameProcessors` flag:

|

||||

|

||||

```

|

||||

```groovy

|

||||

disableFrameProcessors=true

|

||||

```

|

||||

|

||||

@@ -212,18 +242,12 @@ Then, clean and rebuild your project.

|

||||

|

||||

#### iOS

|

||||

|

||||

Inside your `project.pbxproj`, find the `GCC_PREPROCESSOR_DEFINITIONS` group and add the flag:

|

||||

Inside your `Podfile`, add the `VCDisableFrameProcessors` flag:

|

||||

|

||||

```txt {3}

|

||||

GCC_PREPROCESSOR_DEFINITIONS = (

|

||||

"DEBUG=1",

|

||||

"VISION_CAMERA_DISABLE_FRAME_PROCESSORS=1",

|

||||

"$(inherited)",

|

||||

);

|

||||

```ruby

|

||||

$VCDisableFrameProcessors = true

|

||||

```

|

||||

|

||||

Make sure to add this to your Debug-, as well as your Release-configuration.

|

||||

|

||||

</TabItem>

|

||||

|

||||

<TabItem value="expo">

|

||||

|

||||

@@ -12,14 +12,14 @@ import TabItem from '@theme/TabItem';

|

||||

|

||||

Frame Processor Plugins are **native functions** which can be directly called from a JS Frame Processor. (See ["Frame Processors"](frame-processors))

|

||||

|

||||

They **receive a frame from the Camera** as an input and can return any kind of output. For example, a `scanQRCodes` function returns an array of detected QR code strings in the frame:

|

||||

They **receive a frame from the Camera** as an input and can return any kind of output. For example, a `detectFaces` function returns an array of detected faces in the frame:

|

||||

|

||||

```tsx {4-5}

|

||||

function App() {

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

const qrCodes = scanQRCodes(frame)

|

||||

console.log(`QR Codes in Frame: ${qrCodes}`)

|

||||

const faces = detectFaces(frame)

|

||||

console.log(`Faces in Frame: ${faces}`)

|

||||

}, [])

|

||||

|

||||

return (

|

||||

@@ -28,7 +28,7 @@ function App() {

|

||||

}

|

||||

```

|

||||

|

||||

To achieve **maximum performance**, the `scanQRCodes` function is written in a native language (e.g. Objective-C), but it will be directly called from the VisionCamera Frame Processor JavaScript-Runtime.

|

||||

To achieve **maximum performance**, the `detectFaces` function is written in a native language (e.g. Objective-C), but it will be directly called from the VisionCamera Frame Processor JavaScript-Runtime.

|

||||

|

||||

### Types

|

||||

|

||||

@@ -43,7 +43,7 @@ Similar to a TurboModule, the Frame Processor Plugin Registry API automatically

|

||||

| `{}` | `NSDictionary*` | `ReadableNativeMap` |

|

||||

| `undefined` / `null` | `nil` | `null` |

|

||||

| `(any, any) => void` | [`RCTResponseSenderBlock`][4] | `(Object, Object) -> void` |

|

||||

| [`Frame`][1] | [`Frame*`][2] | [`ImageProxy`][3] |

|

||||

| [`Frame`][1] | [`Frame*`][2] | [`Frame`][3] |

|

||||

|

||||

### Return values

|

||||

|

||||

@@ -51,7 +51,7 @@ Return values will automatically be converted to JS values, assuming they are re

|

||||

|

||||

```java

|

||||

@Override

|

||||

public Object callback(ImageProxy image, Object[] params) {

|

||||

public Object callback(Frame frame, Object[] params) {

|

||||

return "cat";

|

||||

}

|

||||

```

|

||||

@@ -66,13 +66,13 @@ export function detectObject(frame: Frame): string {

|

||||

}

|

||||

```

|

||||

|

||||

You can also manipulate the buffer and return it (or a copy of it) by returning a [`Frame`][2]/[`ImageProxy`][3] instance:

|

||||

You can also manipulate the buffer and return it (or a copy of it) by returning a [`Frame`][2]/[`Frame`][3] instance:

|

||||

|

||||

```java

|

||||

@Override

|

||||

public Object callback(ImageProxy image, Object[] params) {

|

||||

ImageProxy resizedImage = new ImageProxy(/* ... */);

|

||||

return resizedImage;

|

||||

public Object callback(Frame frame, Object[] params) {

|

||||

Frame resizedFrame = new Frame(/* ... */);

|

||||

return resizedFrame;

|

||||

}

|

||||

```

|

||||

|

||||

@@ -97,16 +97,7 @@ Frame Processors can also accept parameters, following the same type convention

|

||||

```ts

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

const codes = scanCodes(frame, ['qr', 'barcode'])

|

||||

}, [])

|

||||

```

|

||||

|

||||

Or with multiple ("variadic") parameters:

|

||||

|

||||

```ts

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

const codes = scanCodes(frame, true, 'hello-world', 42)

|

||||

const codes = scanCodes(frame, { codes: ['qr', 'barcode'] })

|

||||

}, [])

|

||||

```

|

||||

|

||||

@@ -116,7 +107,7 @@ To let the user know that something went wrong you can use Exceptions:

|

||||

|

||||

```java

|

||||

@Override

|

||||

public Object callback(ImageProxy image, Object[] params) {

|

||||

public Object callback(Frame frame, Object[] params) {

|

||||

if (params[0] instanceof String) {

|

||||

// ...

|

||||

} else {

|

||||

@@ -152,13 +143,13 @@ For example, a realtime video chat application might use WebRTC to send the fram

|

||||

|

||||

```java

|

||||

@Override

|

||||

public Object callback(ImageProxy image, Object[] params) {

|

||||

public Object callback(Frame frame, Object[] params) {

|

||||

String serverURL = (String)params[0];

|

||||

ImageProxy imageCopy = new ImageProxy(/* ... */);

|

||||

Frame frameCopy = new Frame(/* ... */);

|

||||

|

||||

uploaderQueue.runAsync(() -> {

|

||||

WebRTC.uploadImage(imageCopy, serverURL);

|

||||

imageCopy.close();

|

||||

WebRTC.uploadImage(frameCopy, serverURL);

|

||||

frameCopy.close();

|

||||

});

|

||||

|

||||

return null;

|

||||

@@ -195,14 +186,7 @@ This way you can handle queueing up the frames yourself and asynchronously call

|

||||

|

||||

### Benchmarking Frame Processor Plugins

|

||||

|

||||

Your Frame Processor Plugins have to be fast. VisionCamera automatically detects slow Frame Processors and outputs relevant information in the native console (Xcode: **Debug Area**, Android Studio: **Logcat**):

|

||||

|

||||

<div align="center">

|

||||

<img src={useBaseUrl("img/slow-log.png")} width="80%" />

|

||||

</div>

|

||||

<div align="center">

|

||||

<img src={useBaseUrl("img/slow-log-2.png")} width="80%" />

|

||||

</div>

|

||||

Your Frame Processor Plugins have to be fast. Use the FPS Graph (`enableFpsGraph`) to see how fast your Camera is running, if it is not running at the target FPS, your Frame Processor is too slow.

|

||||

|

||||

<br />

|

||||

|

||||

|

||||

110

docs/docs/guides/FRAME_PROCESSORS_SKIA.mdx

Normal file

110

docs/docs/guides/FRAME_PROCESSORS_SKIA.mdx

Normal file

@@ -0,0 +1,110 @@

|

||||

---

|

||||

id: frame-processors-skia

|

||||

title: Skia Frame Processors

|

||||

sidebar_label: Skia Frame Processors

|

||||

---

|

||||

|

||||

import Tabs from '@theme/Tabs';

|

||||

import TabItem from '@theme/TabItem';

|

||||

import useBaseUrl from '@docusaurus/useBaseUrl';

|

||||

|

||||

<div>

|

||||

<svg xmlns="http://www.w3.org/2000/svg" width="283" height="535" style={{ float: 'right' }}>

|

||||

<image href={useBaseUrl("img/frame-processors.gif")} x="18" y="33" width="247" height="469" />

|

||||

<image href={useBaseUrl("img/frame.png")} width="283" height="535" />

|

||||

</svg>

|

||||

</div>

|

||||

|

||||

### What are Skia Frame Processors?

|

||||

|

||||

Skia Frame Processors are [Frame Processors](frame-processors) that allow you to draw onto the Frame using [react-native-skia](https://github.com/Shopify/react-native-skia).

|

||||

|

||||

For example, you might want to draw a rectangle around a user's face **without writing any native code**, while still **achieving native performance**:

|

||||

|

||||

```jsx

|

||||

function App() {

|

||||

const frameProcessor = useSkiaFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

const faces = detectFaces(frame)

|

||||

faces.forEach((face) => {

|

||||

frame.drawRect(face.rectangle, redPaint)

|

||||

})

|

||||

}, [])

|

||||

|

||||

return (

|

||||

<Camera

|

||||

{...cameraProps}

|

||||

frameProcessor={frameProcessor}

|

||||

/>

|

||||

)

|

||||

}

|

||||

```

|

||||

|

||||

With Skia, you can also implement realtime filters, blurring, shaders, and much more. For example, this is how you invert the colors in a Frame:

|

||||

|

||||

```jsx

|

||||

const INVERTED_COLORS_SHADER = `

|

||||

uniform shader image;

|

||||

|

||||

half4 main(vec2 pos) {

|

||||

vec4 color = image.eval(pos);

|

||||

return vec4(1.0 - color.rgb, 1.0);

|

||||

}

|

||||

`;

|

||||

|

||||

function App() {

|

||||

const imageFilter = Skia.ImageFilter.MakeRuntimeShader(/* INVERTED_COLORS_SHADER */)

|

||||

const paint = Skia.Paint()

|

||||

paint.setImageFilter(imageFilter)

|

||||

|

||||

const frameProcessor = useSkiaFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

frame.render(paint)

|

||||

}, [])

|

||||

|

||||

return (

|

||||

<Camera

|

||||

{...cameraProps}

|

||||

frameProcessor={frameProcessor}

|

||||

/>

|

||||

)

|

||||

}

|

||||

```

|

||||

|

||||

### Rendered outputs

|

||||

|

||||

The rendered results of the Skia Frame Processor are rendered to an offscreen context and will be displayed in the Camera Preview, recorded to a video file (`startRecording()`) and captured in a photo (`takePhoto()`). In other words, you draw into the Frame, not just ontop of it.

|

||||

|

||||

### Performance

|

||||

|

||||

VisionCamera sets up an additional Skia rendering context which requires a few resources.

|

||||

|

||||

On iOS, Metal is used for GPU Acceleration. On Android, OpenGL is used for GPU Acceleration.

|

||||

C++/JSI is used for highly efficient communication between JS and Skia.

|

||||

|

||||

### Disabling Skia Frame Processors

|

||||

|

||||

Skia Frame Processors ship with additional C++ files which might slightly increase the app's build time. If you're not using Skia Frame Processors at all, you can disable them:

|

||||

|

||||

#### Android

|

||||

|

||||

Inside your `gradle.properties` file, add the `disableSkia` flag:

|

||||

|

||||

```groovy

|

||||

disableSkia=true

|

||||

```

|

||||

|

||||

Then, clean and rebuild your project.

|

||||

|

||||

#### iOS

|

||||

|

||||

Inside your `Podfile`, add the `VCDisableSkia` flag:

|

||||

|

||||

```ruby

|

||||

$VCDisableSkia = true

|

||||

```

|

||||

|

||||

|

||||

<br />

|

||||

|

||||

#### 🚀 Next section: [Zooming](/docs/guides/zooming) (or [creating a Frame Processor Plugin](/docs/guides/frame-processors-plugins-overview))

|

||||

@@ -9,14 +9,16 @@ sidebar_label: Finish creating your Frame Processor Plugin

|

||||

To make the Frame Processor Plugin available to the Frame Processor Worklet Runtime, create the following wrapper function in JS/TS:

|

||||

|

||||

```ts

|

||||

import { FrameProcessorPlugins, Frame } from 'react-native-vision-camera'

|

||||

import { VisionCameraProxy, Frame } from 'react-native-vision-camera'

|

||||

|

||||

const plugin = VisionCameraProxy.getFrameProcessorPlugin('scanFaces')

|

||||

|

||||

/**

|

||||

* Scans QR codes.

|

||||

* Scans faces.

|

||||

*/

|

||||

export function scanQRCodes(frame: Frame): string[] {

|

||||

export function scanFaces(frame: Frame): object {

|

||||

'worklet'

|

||||

return FrameProcessorPlugins.scanQRCodes(frame)

|

||||

return plugin.call(frame)

|

||||

}

|

||||

```

|

||||

|

||||

@@ -28,8 +30,8 @@ Simply call the wrapper Worklet in your Frame Processor:

|

||||

function App() {

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

const qrCodes = scanQRCodes(frame)

|

||||

console.log(`QR Codes in Frame: ${qrCodes}`)

|

||||

const faces = scanFaces(frame)

|

||||

console.log(`Faces in Frame: ${faces}`)

|

||||

}, [])

|

||||

|

||||

return (

|

||||

|

||||

@@ -10,7 +10,7 @@ import TabItem from '@theme/TabItem';

|

||||

## Creating a Frame Processor Plugin for Android

|

||||

|

||||

The Frame Processor Plugin API is built to be as extensible as possible, which allows you to create custom Frame Processor Plugins.

|

||||

In this guide we will create a custom QR Code Scanner Plugin which can be used from JS.

|

||||

In this guide we will create a custom Face Detector Plugin which can be used from JS.

|

||||

|

||||

Android Frame Processor Plugins can be written in either **Java**, **Kotlin** or **C++ (JNI)**.

|

||||

|

||||

@@ -23,7 +23,7 @@ npx vision-camera-plugin-builder android

|

||||

```

|

||||

|

||||

:::info

|

||||

The CLI will ask you for the path to project's Android Manifest file, name of the plugin (e.g. `QRCodeFrameProcessor`), name of the exposed method (e.g. `scanQRCodes`) and language you want to use for plugin development (Java or Kotlin).

|

||||

The CLI will ask you for the path to project's Android Manifest file, name of the plugin (e.g. `FaceDetectorFrameProcessorPlugin`), name of the exposed method (e.g. `detectFaces`) and language you want to use for plugin development (Java or Kotlin).

|

||||

For reference see the [CLI's docs](https://github.com/mateusz1913/vision-camera-plugin-builder#%EF%B8%8F-options).

|

||||

:::

|

||||

|

||||

@@ -35,7 +35,7 @@ For reference see the [CLI's docs](https://github.com/mateusz1913/vision-camera-

|

||||

@SuppressWarnings("UnnecessaryLocalVariable")

|

||||

List<ReactPackage> packages = new PackageList(this).getPackages();

|

||||

...

|

||||

packages.add(new QRCodeFrameProcessorPluginPackage()); // <- add

|

||||

packages.add(new FaceDetectorFrameProcessorPluginPackage()); // <- add

|

||||

return packages;

|

||||

}

|

||||

```

|

||||

@@ -51,33 +51,34 @@ For reference see the [CLI's docs](https://github.com/mateusz1913/vision-camera-

|

||||

<TabItem value="java">

|

||||

|

||||

1. Open your Project in Android Studio

|

||||

2. Create a Java source file, for the QR Code Plugin this will be called `QRCodeFrameProcessorPlugin.java`.

|

||||

2. Create a Java source file, for the Face Detector Plugin this will be called `FaceDetectorFrameProcessorPlugin.java`.

|

||||

3. Add the following code:

|

||||

|

||||

```java {8}

|

||||

import androidx.camera.core.ImageProxy;

|

||||

import com.mrousavy.camera.frameprocessor.FrameProcessorPlugin;

|

||||

|

||||

public class QRCodeFrameProcessorPlugin extends FrameProcessorPlugin {

|

||||

public class FaceDetectorFrameProcessorPlugin extends FrameProcessorPlugin {

|

||||

|

||||

@Override

|

||||

public Object callback(ImageProxy image, Object[] params) {

|

||||

public Object callback(ImageProxy image, ReadableNativeMap arguments) {

|

||||

// code goes here

|

||||

return null;

|

||||

}

|

||||

|

||||

QRCodeFrameProcessorPlugin() {

|

||||

super("scanQRCodes");

|

||||

@Override

|

||||

public String getName() {

|

||||

return "detectFaces";

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

:::note

|

||||

The Frame Processor Plugin will be exposed to JS through the `FrameProcessorPlugins` object using the name you pass to the `super(...)` call. In this case, it would be `FrameProcessorPlugins.scanQRCodes(...)`.

|

||||

The Frame Processor Plugin will be exposed to JS through the `VisionCameraProxy` object. In this case, it would be `VisionCameraProxy.getFrameProcessorPlugin("detectFaces")`.

|

||||

:::

|

||||

|

||||

4. **Implement your Frame Processing.** See the [Example Plugin (Java)](https://github.com/mrousavy/react-native-vision-camera/blob/main/example/android/app/src/main/java/com/mrousavy/camera/example/ExampleFrameProcessorPlugin.java) for reference.

|

||||

5. Create a new Java file which registers the Frame Processor Plugin in a React Package, for the QR Code Scanner plugin this file will be called `QRCodeFrameProcessorPluginPackage.java`:

|

||||

5. Create a new Java file which registers the Frame Processor Plugin in a React Package, for the Face Detector plugin this file will be called `FaceDetectorFrameProcessorPluginPackage.java`:

|

||||

|

||||

```java {12}

|

||||

import com.facebook.react.ReactPackage;

|

||||

@@ -87,11 +88,11 @@ import com.facebook.react.uimanager.ViewManager;

|

||||

import com.mrousavy.camera.frameprocessor.FrameProcessorPlugin;

|

||||

import javax.annotation.Nonnull;

|

||||

|

||||

public class QRCodeFrameProcessorPluginPackage implements ReactPackage {

|

||||

public class FaceDetectorFrameProcessorPluginPackage implements ReactPackage {

|

||||

@NonNull

|

||||

@Override

|

||||

public List<NativeModule> createNativeModules(@NonNull ReactApplicationContext reactContext) {

|

||||

FrameProcessorPlugin.register(new QRCodeFrameProcessorPlugin());

|

||||

FrameProcessorPlugin.register(new FaceDetectorFrameProcessorPlugin());

|

||||

return Collections.emptyList();

|

||||

}

|

||||

|

||||

@@ -111,7 +112,7 @@ public class QRCodeFrameProcessorPluginPackage implements ReactPackage {

|

||||

@SuppressWarnings("UnnecessaryLocalVariable")

|

||||

List<ReactPackage> packages = new PackageList(this).getPackages();

|

||||

...

|

||||

packages.add(new QRCodeFrameProcessorPluginPackage()); // <- add

|

||||

packages.add(new FaceDetectorFrameProcessorPluginPackage()); // <- add

|

||||

return packages;

|

||||

}

|

||||

```

|

||||

@@ -120,28 +121,32 @@ public class QRCodeFrameProcessorPluginPackage implements ReactPackage {

|

||||

<TabItem value="kotlin">

|

||||

|

||||

1. Open your Project in Android Studio

|

||||

2. Create a Kotlin source file, for the QR Code Plugin this will be called `QRCodeFrameProcessorPlugin.kt`.

|

||||

2. Create a Kotlin source file, for the Face Detector Plugin this will be called `FaceDetectorFrameProcessorPlugin.kt`.

|

||||

3. Add the following code:

|

||||

|

||||

```kotlin {7}

|

||||

import androidx.camera.core.ImageProxy

|

||||

import com.mrousavy.camera.frameprocessor.FrameProcessorPlugin

|

||||

|

||||

class ExampleFrameProcessorPluginKotlin: FrameProcessorPlugin("scanQRCodes") {

|

||||

class FaceDetectorFrameProcessorPlugin: FrameProcessorPlugin() {

|

||||

|

||||

override fun callback(image: ImageProxy, params: Array<Any>): Any? {

|

||||

override fun callback(image: ImageProxy, arguments: ReadableNativeMap): Any? {

|

||||

// code goes here

|

||||

return null

|

||||

}

|

||||

|

||||

override fun getName(): String {

|

||||

return "detectFaces"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

:::note

|

||||

The Frame Processor Plugin will be exposed to JS through the `FrameProcessorPlugins` object using the name you pass to the `FrameProcessorPlugin(...)` call. In this case, it would be `FrameProcessorPlugins.scanQRCodes(...)`.

|

||||

The Frame Processor Plugin will be exposed to JS through the `VisionCameraProxy` object. In this case, it would be `VisionCameraProxy.getFrameProcessorPlugin("detectFaces")`.

|

||||

:::

|

||||

|

||||

4. **Implement your Frame Processing.** See the [Example Plugin (Java)](https://github.com/mrousavy/react-native-vision-camera/blob/main/example/android/app/src/main/java/com/mrousavy/camera/example/ExampleFrameProcessorPlugin.java) for reference.

|

||||

5. Create a new Kotlin file which registers the Frame Processor Plugin in a React Package, for the QR Code Scanner plugin this file will be called `QRCodeFrameProcessorPluginPackage.kt`:

|

||||

5. Create a new Kotlin file which registers the Frame Processor Plugin in a React Package, for the Face Detector plugin this file will be called `FaceDetectorFrameProcessorPluginPackage.kt`:

|

||||

|

||||

```kotlin {9}

|

||||

import com.facebook.react.ReactPackage

|

||||

@@ -150,9 +155,9 @@ import com.facebook.react.bridge.ReactApplicationContext

|

||||

import com.facebook.react.uimanager.ViewManager

|

||||

import com.mrousavy.camera.frameprocessor.FrameProcessorPlugin

|

||||

|

||||

class QRCodeFrameProcessorPluginPackage : ReactPackage {

|

||||

class FaceDetectorFrameProcessorPluginPackage : ReactPackage {

|

||||

override fun createNativeModules(reactContext: ReactApplicationContext): List<NativeModule> {

|

||||

FrameProcessorPlugin.register(ExampleFrameProcessorPluginKotlin())

|

||||

FrameProcessorPlugin.register(FaceDetectorFrameProcessorPlugin())

|

||||

return emptyList()

|

||||

}

|

||||

|

||||

@@ -170,7 +175,7 @@ class QRCodeFrameProcessorPluginPackage : ReactPackage {

|

||||

@SuppressWarnings("UnnecessaryLocalVariable")

|

||||

List<ReactPackage> packages = new PackageList(this).getPackages();

|

||||

...

|

||||

packages.add(new QRCodeFrameProcessorPluginPackage()); // <- add

|

||||

packages.add(new FaceDetectorFrameProcessorPluginPackage()); // <- add

|

||||

return packages;

|

||||

}

|

||||

```

|

||||

|

||||

@@ -10,7 +10,7 @@ import TabItem from '@theme/TabItem';

|

||||

## Creating a Frame Processor Plugin for iOS

|

||||

|

||||

The Frame Processor Plugin API is built to be as extensible as possible, which allows you to create custom Frame Processor Plugins.

|

||||

In this guide we will create a custom QR Code Scanner Plugin which can be used from JS.

|

||||

In this guide we will create a custom Face Detector Plugin which can be used from JS.

|

||||

|

||||

iOS Frame Processor Plugins can be written in either **Objective-C** or **Swift**.

|

||||

|

||||

@@ -23,7 +23,7 @@ npx vision-camera-plugin-builder ios

|

||||

```

|

||||

|

||||

:::info

|

||||

The CLI will ask you for the path to project's .xcodeproj file, name of the plugin (e.g. `QRCodeFrameProcessor`), name of the exposed method (e.g. `scanQRCodes`) and language you want to use for plugin development (Objective-C, Objective-C++ or Swift).

|

||||

The CLI will ask you for the path to project's .xcodeproj file, name of the plugin (e.g. `FaceDetectorFrameProcessorPlugin`), name of the exposed method (e.g. `detectFaces`) and language you want to use for plugin development (Objective-C, Objective-C++ or Swift).

|

||||

For reference see the [CLI's docs](https://github.com/mateusz1913/vision-camera-plugin-builder#%EF%B8%8F-options).

|

||||

:::

|

||||

|

||||

@@ -38,23 +38,25 @@ For reference see the [CLI's docs](https://github.com/mateusz1913/vision-camera-

|

||||

<TabItem value="objc">

|

||||

|

||||

1. Open your Project in Xcode

|

||||

2. Create an Objective-C source file, for the QR Code Plugin this will be called `QRCodeFrameProcessorPlugin.m`.

|

||||

2. Create an Objective-C source file, for the Face Detector Plugin this will be called `FaceDetectorFrameProcessorPlugin.m`.

|

||||

3. Add the following code:

|

||||

|

||||

```objc

|

||||

#import <VisionCamera/FrameProcessorPlugin.h>

|

||||

#import <VisionCamera/FrameProcessorPluginRegistry.h>

|

||||

#import <VisionCamera/Frame.h>

|

||||

|

||||

@interface QRCodeFrameProcessorPlugin : FrameProcessorPlugin

|

||||

@interface FaceDetectorFrameProcessorPlugin : FrameProcessorPlugin

|

||||

@end

|

||||

|

||||

@implementation QRCodeFrameProcessorPlugin

|

||||

@implementation FaceDetectorFrameProcessorPlugin

|

||||

|

||||

- (NSString *)name {

|

||||

return @"scanQRCodes";

|

||||

- (instancetype) initWithOptions:(NSDictionary*)options; {

|

||||

self = [super init];

|

||||

return self;

|

||||

}

|

||||

|

||||

- (id)callback:(Frame *)frame withArguments:(NSArray<id> *)arguments {

|

||||

- (id)callback:(Frame*)frame withArguments:(NSDictionary*)arguments {

|

||||

CMSampleBufferRef buffer = frame.buffer;

|

||||

UIImageOrientation orientation = frame.orientation;

|

||||

// code goes here

|

||||

@@ -62,14 +64,17 @@ For reference see the [CLI's docs](https://github.com/mateusz1913/vision-camera-

|

||||

}

|

||||

|

||||

+ (void) load {

|

||||

[self registerPlugin:[[ExampleFrameProcessorPlugin alloc] init]];

|

||||

[FrameProcessorPluginRegistry addFrameProcessorPlugin:@"detectFaces"

|

||||

withInitializer:^FrameProcessorPlugin*(NSDictionary* options) {

|

||||

return [[FaceDetectorFrameProcessorPlugin alloc] initWithOptions:options];

|

||||

}];

|

||||

}

|

||||

|

||||

@end

|

||||

```

|

||||

|

||||

:::note

|

||||

The Frame Processor Plugin will be exposed to JS through the `FrameProcessorPlugins` object using the name returned from the `name` getter. In this case, it would be `FrameProcessorPlugins.scanQRCodes(...)`.

|

||||

The Frame Processor Plugin will be exposed to JS through the `VisionCameraProxy` object. In this case, it would be `VisionCameraProxy.getFrameProcessorPlugin("detectFaces")`.

|

||||

:::

|

||||

|

||||

4. **Implement your Frame Processing.** See the [Example Plugin (Objective-C)](https://github.com/mrousavy/react-native-vision-camera/blob/main/example/ios/Frame%20Processor%20Plugins/Example%20Plugin%20%28Objective%2DC%29) for reference.

|

||||

@@ -78,7 +83,7 @@ The Frame Processor Plugin will be exposed to JS through the `FrameProcessorPlug

|

||||

<TabItem value="swift">

|

||||

|

||||

1. Open your Project in Xcode

|

||||

2. Create a Swift file, for the QR Code Plugin this will be `QRCodeFrameProcessorPlugin.swift`. If Xcode asks you to create a Bridging Header, press **create**.

|

||||

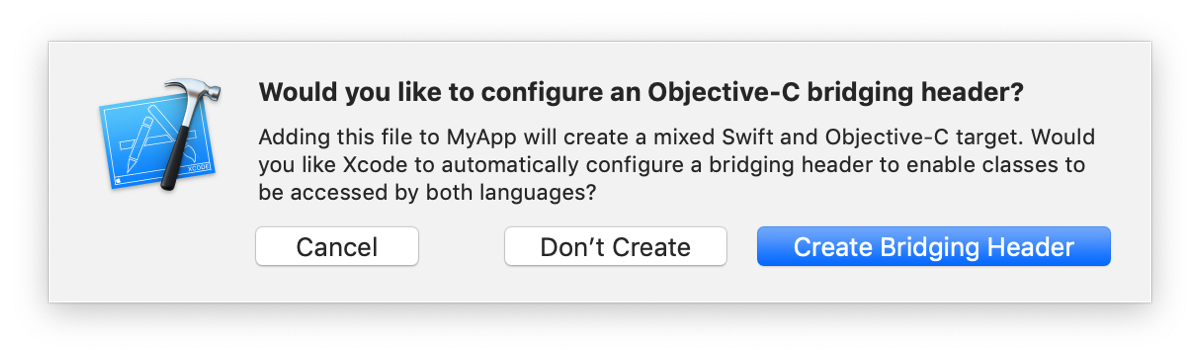

2. Create a Swift file, for the Face Detector Plugin this will be `FaceDetectorFrameProcessorPlugin.swift`. If Xcode asks you to create a Bridging Header, press **create**.

|

||||

|

||||

|

||||

|

||||

@@ -92,13 +97,9 @@ The Frame Processor Plugin will be exposed to JS through the `FrameProcessorPlug

|

||||

4. In the Swift file, add the following code:

|

||||

|

||||

```swift

|

||||

@objc(QRCodeFrameProcessorPlugin)

|

||||

public class QRCodeFrameProcessorPlugin: FrameProcessorPlugin {

|

||||

override public func name() -> String! {

|

||||

return "scanQRCodes"

|

||||

}

|

||||

|

||||

public override func callback(_ frame: Frame!, withArguments arguments: [Any]!) -> Any! {

|

||||

@objc(FaceDetectorFrameProcessorPlugin)

|

||||

public class FaceDetectorFrameProcessorPlugin: FrameProcessorPlugin {

|

||||

public override func callback(_ frame: Frame!, withArguments arguments: [String:Any]) -> Any {

|

||||

let buffer = frame.buffer

|

||||

let orientation = frame.orientation

|

||||

// code goes here

|

||||

@@ -107,11 +108,12 @@ public class QRCodeFrameProcessorPlugin: FrameProcessorPlugin {

|

||||

}

|

||||

```

|

||||

|

||||

5. In your `AppDelegate.m`, add the following imports (you can skip this if your AppDelegate is in Swift):

|

||||

5. In your `AppDelegate.m`, add the following imports:

|

||||

|

||||

```objc

|

||||

#import "YOUR_XCODE_PROJECT_NAME-Swift.h"

|

||||

#import <VisionCamera/FrameProcessorPlugin.h>

|

||||

#import <VisionCamera/FrameProcessorPluginRegistry.h>

|

||||

```

|

||||

|

||||

6. In your `AppDelegate.m`, add the following code to `application:didFinishLaunchingWithOptions:`:

|

||||

@@ -121,7 +123,10 @@ public class QRCodeFrameProcessorPlugin: FrameProcessorPlugin {

|

||||

{

|

||||

...

|

||||

|

||||

[FrameProcessorPlugin registerPlugin:[[QRCodeFrameProcessorPlugin alloc] init]];

|

||||

[FrameProcessorPluginRegistry addFrameProcessorPlugin:@"detectFaces"

|

||||

withInitializer:^FrameProcessorPlugin*(NSDictionary* options) {

|

||||

return [[FaceDetectorFrameProcessorPlugin alloc] initWithOptions:options];

|

||||

}];

|

||||

|

||||

return [super application:application didFinishLaunchingWithOptions:launchOptions];

|

||||

}

|

||||

|

||||

@@ -21,10 +21,10 @@ Before opening an issue, make sure you try the following:

|

||||

npm i # or "yarn"

|

||||

cd ios && pod repo update && pod update && pod install

|

||||

```

|

||||

2. Check your minimum iOS version. VisionCamera requires a minimum iOS version of **11.0**.

|

||||

2. Check your minimum iOS version. VisionCamera requires a minimum iOS version of **12.4**.

|

||||

1. Open your `Podfile`

|

||||

2. Make sure `platform :ios` is set to `11.0` or higher

|

||||

3. Make sure `iOS Deployment Target` is set to `11.0` or higher (`IPHONEOS_DEPLOYMENT_TARGET` in `project.pbxproj`)

|

||||

2. Make sure `platform :ios` is set to `12.4` or higher

|

||||

3. Make sure `iOS Deployment Target` is set to `12.4` or higher (`IPHONEOS_DEPLOYMENT_TARGET` in `project.pbxproj`)

|

||||

3. Check your Swift version. VisionCamera requires a minimum Swift version of **5.2**.

|

||||

1. Open `project.pbxproj` in a Text Editor

|

||||

2. If the `LIBRARY_SEARCH_PATH` value is set, make sure there is no explicit reference to Swift-5.0. If there is, remove it. See [this StackOverflow answer](https://stackoverflow.com/a/66281846/1123156).

|

||||

@@ -35,9 +35,12 @@ Before opening an issue, make sure you try the following:

|

||||

3. Select **Swift File** and press **Next**

|

||||

4. Choose whatever name you want, e.g. `File.swift` and press **Create**

|

||||

5. Press **Create Bridging Header** when promted.

|

||||

5. If you're having runtime issues, check the logs in Xcode to find out more. In Xcode, go to **View** > **Debug Area** > **Activate Console** (<kbd>⇧</kbd>+<kbd>⌘</kbd>+<kbd>C</kbd>).

|

||||

5. If you're having build issues, try:

|

||||

1. Building without Skia. Set `$VCDisableSkia = true` in the top of your Podfile, and try rebuilding.

|

||||

2. Building without Frame Processors. Set `$VCDisableFrameProcessors = true` in the top of your Podfile, and try rebuilding.

|

||||

6. If you're having runtime issues, check the logs in Xcode to find out more. In Xcode, go to **View** > **Debug Area** > **Activate Console** (<kbd>⇧</kbd>+<kbd>⌘</kbd>+<kbd>C</kbd>).

|

||||

* For errors without messages, there's often an error code attached. Look up the error code on [osstatus.com](https://www.osstatus.com) to get more information about a specific error.

|

||||

6. If your Frame Processor is not running, make sure you check the native Xcode logs to find out why. Also make sure you are not using a remote JS debugger such as Google Chrome, since those don't work with JSI.

|

||||

7. If your Frame Processor is not running, make sure you check the native Xcode logs to find out why. Also make sure you are not using a remote JS debugger such as Google Chrome, since those don't work with JSI.

|

||||

|

||||

## Android

|

||||

|

||||

@@ -64,9 +67,12 @@ Before opening an issue, make sure you try the following:

|

||||

```

|

||||

distributionUrl=https\://services.gradle.org/distributions/gradle-7.5.1-all.zip

|

||||

```

|

||||

5. If you're having runtime issues, check the logs in Android Studio/Logcat to find out more. In Android Studio, go to **View** > **Tool Windows** > **Logcat** (<kbd>⌘</kbd>+<kbd>6</kbd>) or run `adb logcat` in Terminal.

|

||||

6. If a camera device is not being returned by [`Camera.getAvailableCameraDevices()`](/docs/api/classes/Camera#getavailablecameradevices), make sure it is a Camera2 compatible device. See [this section in the Android docs](https://developer.android.com/reference/android/hardware/camera2/CameraDevice#reprocessing) for more information.

|

||||

7. If your Frame Processor is not running, make sure you check the native Android Studio/Logcat logs to find out why. Also make sure you are not using a remote JS debugger such as Google Chrome, since those don't work with JSI.

|

||||

5. If you're having build issues, try:

|

||||

1. Building without Skia. Set `disableSkia = true` in your `gradle.properties`, and try rebuilding.

|

||||

2. Building without Frame Processors. Set `disableFrameProcessors = true` in your `gradle.properties`, and try rebuilding.

|

||||

6. If you're having runtime issues, check the logs in Android Studio/Logcat to find out more. In Android Studio, go to **View** > **Tool Windows** > **Logcat** (<kbd>⌘</kbd>+<kbd>6</kbd>) or run `adb logcat` in Terminal.

|

||||

7. If a camera device is not being returned by [`Camera.getAvailableCameraDevices()`](/docs/api/classes/Camera#getavailablecameradevices), make sure it is a Camera2 compatible device. See [this section in the Android docs](https://developer.android.com/reference/android/hardware/camera2/CameraDevice#reprocessing) for more information.

|

||||

8. If your Frame Processor is not running, make sure you check the native Android Studio/Logcat logs to find out why. Also make sure you are not using a remote JS debugger such as Google Chrome, since those don't work with JSI.

|

||||

|

||||

## Issues

|

||||

|

||||

|

||||

BIN

docs/static/img/slow-log-2.png

vendored

BIN

docs/static/img/slow-log-2.png

vendored

Binary file not shown.

|

Before Width: | Height: | Size: 20 KiB |

BIN

docs/static/img/slow-log.png

vendored

BIN

docs/static/img/slow-log.png

vendored

Binary file not shown.

|

Before Width: | Height: | Size: 29 KiB |

Reference in New Issue

Block a user