feat: Expose unified VisionCameraProxy object, make FrameProcessorPlugins object-oriented (#1660)

* feat: Replace `FrameProcessorRuntimeManager` with `VisionCameraProxy` (iOS) * Make `FrameProcessorPlugin` a constructable HostObject * fix: Fix `name` override * Simplify `useFrameProcessor * fix: Fix lint errors * Remove FrameProcessorPlugin::name * JSIUtils -> JSINSObjectConversion

This commit is contained in:

@@ -10,14 +10,25 @@ while !Dir.exist?(File.join(nodeModules, "node_modules")) && tries < 10

|

||||

end

|

||||

nodeModules = File.join(nodeModules, "node_modules")

|

||||

|

||||

puts("[VisionCamera] node modules #{Dir.exist?(nodeModules) ? "found at #{nodeModules}" : "not found!"}")

|

||||

forceDisableFrameProcessors = false

|

||||

if defined?($VCDisableFrameProcessors)

|

||||

Pod::UI.puts "[VisionCamera] $VCDisableFrameProcesors is set to #{$VCDisableFrameProcessors}!"

|

||||

forceDisableFrameProcessors = $VCDisableFrameProcessors

|

||||

end

|

||||

forceDisableSkia = false

|

||||

if defined?($VCDisableSkia)

|

||||

Pod::UI.puts "[VisionCamera] $VCDisableSkia is set to #{$VCDisableSkia}!"

|

||||

forceDisableSkia = $VCDisableSkia

|

||||

end

|

||||

|

||||

Pod::UI.puts("[VisionCamera] node modules #{Dir.exist?(nodeModules) ? "found at #{nodeModules}" : "not found!"}")

|

||||

workletsPath = File.join(nodeModules, "react-native-worklets")

|

||||

hasWorklets = File.exist?(workletsPath)

|

||||

puts "[VisionCamera] react-native-worklets #{hasWorklets ? "found" : "not found"}, Frame Processors #{hasWorklets ? "enabled" : "disabled"}!"

|

||||

hasWorklets = File.exist?(workletsPath) && !forceDisableFrameProcessors

|

||||

Pod::UI.puts("[VisionCamera] react-native-worklets #{hasWorklets ? "found" : "not found"}, Frame Processors #{hasWorklets ? "enabled" : "disabled"}!")

|

||||

|

||||

skiaPath = File.join(nodeModules, "@shopify", "react-native-skia")

|

||||

hasSkia = hasWorklets && File.exist?(skiaPath)

|

||||

puts "[VisionCamera] react-native-skia #{hasSkia ? "found" : "not found"}, Skia Frame Processors #{hasSkia ? "enabled" : "disabled"}!"

|

||||

hasSkia = hasWorklets && File.exist?(skiaPath) && !forceDisableSkia

|

||||

Pod::UI.puts("[VisionCamera] react-native-skia #{hasSkia ? "found" : "not found"}, Skia Frame Processors #{hasSkia ? "enabled" : "disabled"}!")

|

||||

|

||||

Pod::Spec.new do |s|

|

||||

s.name = "VisionCamera"

|

||||

@@ -54,8 +65,9 @@ Pod::Spec.new do |s|

|

||||

hasWorklets ? "ios/Frame Processor/*.{m,mm,swift}" : "",

|

||||

hasWorklets ? "ios/Frame Processor/Frame.h" : "",

|

||||

hasWorklets ? "ios/Frame Processor/FrameProcessor.h" : "",

|

||||

hasWorklets ? "ios/Frame Processor/FrameProcessorRuntimeManager.h" : "",

|

||||

hasWorklets ? "ios/Frame Processor/FrameProcessorPlugin.h" : "",

|

||||

hasWorklets ? "ios/Frame Processor/FrameProcessorPluginRegistry.h" : "",

|

||||

hasWorklets ? "ios/Frame Processor/VisionCameraProxy.h" : "",

|

||||

hasWorklets ? "cpp/**/*.{cpp}" : "",

|

||||

|

||||

# Skia Frame Processors

|

||||

|

||||

@@ -73,10 +73,8 @@ class PropNameIDCache {

|

||||

|

||||

PropNameIDCache propNameIDCache;

|

||||

|

||||

InvalidateCacheOnDestroy::InvalidateCacheOnDestroy(jsi::Runtime &runtime) {

|

||||

key = reinterpret_cast<uintptr_t>(&runtime);

|

||||

}

|

||||

InvalidateCacheOnDestroy::~InvalidateCacheOnDestroy() {

|

||||

void invalidateArrayBufferCache(jsi::Runtime& runtime) {

|

||||

auto key = reinterpret_cast<uintptr_t>(&runtime);

|

||||

propNameIDCache.invalidate(key);

|

||||

}

|

||||

|

||||

|

||||

@@ -74,24 +74,7 @@ struct typedArrayTypeMap<TypedArrayKind::Float64Array> {

|

||||

typedef double type;

|

||||

};

|

||||

|

||||

// Instance of this class will invalidate PropNameIDCache when destructor is called.

|

||||

// Attach this object to global in specific jsi::Runtime to make sure lifecycle of

|

||||

// the cache object is connected to the lifecycle of the js runtime

|

||||

class InvalidateCacheOnDestroy : public jsi::HostObject {

|

||||

public:

|

||||

explicit InvalidateCacheOnDestroy(jsi::Runtime &runtime);

|

||||

virtual ~InvalidateCacheOnDestroy();

|

||||

virtual jsi::Value get(jsi::Runtime &, const jsi::PropNameID &name) {

|

||||

return jsi::Value::null();

|

||||

}

|

||||

virtual void set(jsi::Runtime &, const jsi::PropNameID &name, const jsi::Value &value) {}

|

||||

virtual std::vector<jsi::PropNameID> getPropertyNames(jsi::Runtime &rt) {

|

||||

return {};

|

||||

}

|

||||

|

||||

private:

|

||||

uintptr_t key;

|

||||

};

|

||||

void invalidateArrayBufferCache(jsi::Runtime& runtime);

|

||||

|

||||

class TypedArrayBase : public jsi::Object {

|

||||

public:

|

||||

|

||||

@@ -120,6 +120,36 @@ const frameProcessor = useFrameProcessor((frame) => {

|

||||

}, [onQRCodeDetected])

|

||||

```

|

||||

|

||||

### Running asynchronously

|

||||

|

||||

Since Frame Processors run synchronously with the Camera Pipeline, anything taking longer than one Frame interval might block the Camera from streaming new Frames. To avoid this, you can use `runAsync` to run code asynchronously on a different Thread:

|

||||

|

||||

```ts

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

console.log('I'm running synchronously at 60 FPS!')

|

||||

runAsync(() => {

|

||||

'worklet'

|

||||

console.log('I'm running asynchronously, possibly at a lower FPS rate!')

|

||||

})

|

||||

}, [])

|

||||

```

|

||||

|

||||

### Running at a throttled FPS rate

|

||||

|

||||

Some Frame Processor Plugins don't need to run on every Frame, for example a Frame Processor that detects the brightness in a Frame only needs to run twice per second:

|

||||

|

||||

```ts

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

console.log('I'm running synchronously at 60 FPS!')

|

||||

runAtTargetFps(2, () => {

|

||||

'worklet'

|

||||

console.log('I'm running synchronously at 2 FPS!')

|

||||

})

|

||||

}, [])

|

||||

```

|

||||

|

||||

### Using Frame Processor Plugins

|

||||

|

||||

Frame Processor Plugins are distributed through npm. To install the [**vision-camera-image-labeler**](https://github.com/mrousavy/vision-camera-image-labeler) plugin, run:

|

||||

@@ -204,7 +234,7 @@ The Frame Processor API spawns a secondary JavaScript Runtime which consumes a s

|

||||

|

||||

Inside your `gradle.properties` file, add the `disableFrameProcessors` flag:

|

||||

|

||||

```

|

||||

```groovy

|

||||

disableFrameProcessors=true

|

||||

```

|

||||

|

||||

@@ -212,18 +242,12 @@ Then, clean and rebuild your project.

|

||||

|

||||

#### iOS

|

||||

|

||||

Inside your `project.pbxproj`, find the `GCC_PREPROCESSOR_DEFINITIONS` group and add the flag:

|

||||

Inside your `Podfile`, add the `VCDisableFrameProcessors` flag:

|

||||

|

||||

```txt {3}

|

||||

GCC_PREPROCESSOR_DEFINITIONS = (

|

||||

"DEBUG=1",

|

||||

"VISION_CAMERA_DISABLE_FRAME_PROCESSORS=1",

|

||||

"$(inherited)",

|

||||

);

|

||||

```ruby

|

||||

$VCDisableFrameProcessors = true

|

||||

```

|

||||

|

||||

Make sure to add this to your Debug-, as well as your Release-configuration.

|

||||

|

||||

</TabItem>

|

||||

|

||||

<TabItem value="expo">

|

||||

|

||||

@@ -12,14 +12,14 @@ import TabItem from '@theme/TabItem';

|

||||

|

||||

Frame Processor Plugins are **native functions** which can be directly called from a JS Frame Processor. (See ["Frame Processors"](frame-processors))

|

||||

|

||||

They **receive a frame from the Camera** as an input and can return any kind of output. For example, a `scanQRCodes` function returns an array of detected QR code strings in the frame:

|

||||

They **receive a frame from the Camera** as an input and can return any kind of output. For example, a `detectFaces` function returns an array of detected faces in the frame:

|

||||

|

||||

```tsx {4-5}

|

||||

function App() {

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

const qrCodes = scanQRCodes(frame)

|

||||

console.log(`QR Codes in Frame: ${qrCodes}`)

|

||||

const faces = detectFaces(frame)

|

||||

console.log(`Faces in Frame: ${faces}`)

|

||||

}, [])

|

||||

|

||||

return (

|

||||

@@ -28,7 +28,7 @@ function App() {

|

||||

}

|

||||

```

|

||||

|

||||

To achieve **maximum performance**, the `scanQRCodes` function is written in a native language (e.g. Objective-C), but it will be directly called from the VisionCamera Frame Processor JavaScript-Runtime.

|

||||

To achieve **maximum performance**, the `detectFaces` function is written in a native language (e.g. Objective-C), but it will be directly called from the VisionCamera Frame Processor JavaScript-Runtime.

|

||||

|

||||

### Types

|

||||

|

||||

@@ -43,7 +43,7 @@ Similar to a TurboModule, the Frame Processor Plugin Registry API automatically

|

||||

| `{}` | `NSDictionary*` | `ReadableNativeMap` |

|

||||

| `undefined` / `null` | `nil` | `null` |

|

||||

| `(any, any) => void` | [`RCTResponseSenderBlock`][4] | `(Object, Object) -> void` |

|

||||

| [`Frame`][1] | [`Frame*`][2] | [`ImageProxy`][3] |

|

||||

| [`Frame`][1] | [`Frame*`][2] | [`Frame`][3] |

|

||||

|

||||

### Return values

|

||||

|

||||

@@ -51,7 +51,7 @@ Return values will automatically be converted to JS values, assuming they are re

|

||||

|

||||

```java

|

||||

@Override

|

||||

public Object callback(ImageProxy image, Object[] params) {

|

||||

public Object callback(Frame frame, Object[] params) {

|

||||

return "cat";

|

||||

}

|

||||

```

|

||||

@@ -66,13 +66,13 @@ export function detectObject(frame: Frame): string {

|

||||

}

|

||||

```

|

||||

|

||||

You can also manipulate the buffer and return it (or a copy of it) by returning a [`Frame`][2]/[`ImageProxy`][3] instance:

|

||||

You can also manipulate the buffer and return it (or a copy of it) by returning a [`Frame`][2]/[`Frame`][3] instance:

|

||||

|

||||

```java

|

||||

@Override

|

||||

public Object callback(ImageProxy image, Object[] params) {

|

||||

ImageProxy resizedImage = new ImageProxy(/* ... */);

|

||||

return resizedImage;

|

||||

public Object callback(Frame frame, Object[] params) {

|

||||

Frame resizedFrame = new Frame(/* ... */);

|

||||

return resizedFrame;

|

||||

}

|

||||

```

|

||||

|

||||

@@ -97,16 +97,7 @@ Frame Processors can also accept parameters, following the same type convention

|

||||

```ts

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

const codes = scanCodes(frame, ['qr', 'barcode'])

|

||||

}, [])

|

||||

```

|

||||

|

||||

Or with multiple ("variadic") parameters:

|

||||

|

||||

```ts

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

const codes = scanCodes(frame, true, 'hello-world', 42)

|

||||

const codes = scanCodes(frame, { codes: ['qr', 'barcode'] })

|

||||

}, [])

|

||||

```

|

||||

|

||||

@@ -116,7 +107,7 @@ To let the user know that something went wrong you can use Exceptions:

|

||||

|

||||

```java

|

||||

@Override

|

||||

public Object callback(ImageProxy image, Object[] params) {

|

||||

public Object callback(Frame frame, Object[] params) {

|

||||

if (params[0] instanceof String) {

|

||||

// ...

|

||||

} else {

|

||||

@@ -152,13 +143,13 @@ For example, a realtime video chat application might use WebRTC to send the fram

|

||||

|

||||

```java

|

||||

@Override

|

||||

public Object callback(ImageProxy image, Object[] params) {

|

||||

public Object callback(Frame frame, Object[] params) {

|

||||

String serverURL = (String)params[0];

|

||||

ImageProxy imageCopy = new ImageProxy(/* ... */);

|

||||

Frame frameCopy = new Frame(/* ... */);

|

||||

|

||||

uploaderQueue.runAsync(() -> {

|

||||

WebRTC.uploadImage(imageCopy, serverURL);

|

||||

imageCopy.close();

|

||||

WebRTC.uploadImage(frameCopy, serverURL);

|

||||

frameCopy.close();

|

||||

});

|

||||

|

||||

return null;

|

||||

@@ -195,14 +186,7 @@ This way you can handle queueing up the frames yourself and asynchronously call

|

||||

|

||||

### Benchmarking Frame Processor Plugins

|

||||

|

||||

Your Frame Processor Plugins have to be fast. VisionCamera automatically detects slow Frame Processors and outputs relevant information in the native console (Xcode: **Debug Area**, Android Studio: **Logcat**):

|

||||

|

||||

<div align="center">

|

||||

<img src={useBaseUrl("img/slow-log.png")} width="80%" />

|

||||

</div>

|

||||

<div align="center">

|

||||

<img src={useBaseUrl("img/slow-log-2.png")} width="80%" />

|

||||

</div>

|

||||

Your Frame Processor Plugins have to be fast. Use the FPS Graph (`enableFpsGraph`) to see how fast your Camera is running, if it is not running at the target FPS, your Frame Processor is too slow.

|

||||

|

||||

<br />

|

||||

|

||||

|

||||

110

docs/docs/guides/FRAME_PROCESSORS_SKIA.mdx

Normal file

110

docs/docs/guides/FRAME_PROCESSORS_SKIA.mdx

Normal file

@@ -0,0 +1,110 @@

|

||||

---

|

||||

id: frame-processors-skia

|

||||

title: Skia Frame Processors

|

||||

sidebar_label: Skia Frame Processors

|

||||

---

|

||||

|

||||

import Tabs from '@theme/Tabs';

|

||||

import TabItem from '@theme/TabItem';

|

||||

import useBaseUrl from '@docusaurus/useBaseUrl';

|

||||

|

||||

<div>

|

||||

<svg xmlns="http://www.w3.org/2000/svg" width="283" height="535" style={{ float: 'right' }}>

|

||||

<image href={useBaseUrl("img/frame-processors.gif")} x="18" y="33" width="247" height="469" />

|

||||

<image href={useBaseUrl("img/frame.png")} width="283" height="535" />

|

||||

</svg>

|

||||

</div>

|

||||

|

||||

### What are Skia Frame Processors?

|

||||

|

||||

Skia Frame Processors are [Frame Processors](frame-processors) that allow you to draw onto the Frame using [react-native-skia](https://github.com/Shopify/react-native-skia).

|

||||

|

||||

For example, you might want to draw a rectangle around a user's face **without writing any native code**, while still **achieving native performance**:

|

||||

|

||||

```jsx

|

||||

function App() {

|

||||

const frameProcessor = useSkiaFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

const faces = detectFaces(frame)

|

||||

faces.forEach((face) => {

|

||||

frame.drawRect(face.rectangle, redPaint)

|

||||

})

|

||||

}, [])

|

||||

|

||||

return (

|

||||

<Camera

|

||||

{...cameraProps}

|

||||

frameProcessor={frameProcessor}

|

||||

/>

|

||||

)

|

||||

}

|

||||

```

|

||||

|

||||

With Skia, you can also implement realtime filters, blurring, shaders, and much more. For example, this is how you invert the colors in a Frame:

|

||||

|

||||

```jsx

|

||||

const INVERTED_COLORS_SHADER = `

|

||||

uniform shader image;

|

||||

|

||||

half4 main(vec2 pos) {

|

||||

vec4 color = image.eval(pos);

|

||||

return vec4(1.0 - color.rgb, 1.0);

|

||||

}

|

||||

`;

|

||||

|

||||

function App() {

|

||||

const imageFilter = Skia.ImageFilter.MakeRuntimeShader(/* INVERTED_COLORS_SHADER */)

|

||||

const paint = Skia.Paint()

|

||||

paint.setImageFilter(imageFilter)

|

||||

|

||||

const frameProcessor = useSkiaFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

frame.render(paint)

|

||||

}, [])

|

||||

|

||||

return (

|

||||

<Camera

|

||||

{...cameraProps}

|

||||

frameProcessor={frameProcessor}

|

||||

/>

|

||||

)

|

||||

}

|

||||

```

|

||||

|

||||

### Rendered outputs

|

||||

|

||||

The rendered results of the Skia Frame Processor are rendered to an offscreen context and will be displayed in the Camera Preview, recorded to a video file (`startRecording()`) and captured in a photo (`takePhoto()`). In other words, you draw into the Frame, not just ontop of it.

|

||||

|

||||

### Performance

|

||||

|

||||

VisionCamera sets up an additional Skia rendering context which requires a few resources.

|

||||

|

||||

On iOS, Metal is used for GPU Acceleration. On Android, OpenGL is used for GPU Acceleration.

|

||||

C++/JSI is used for highly efficient communication between JS and Skia.

|

||||

|

||||

### Disabling Skia Frame Processors

|

||||

|

||||

Skia Frame Processors ship with additional C++ files which might slightly increase the app's build time. If you're not using Skia Frame Processors at all, you can disable them:

|

||||

|

||||

#### Android

|

||||

|

||||

Inside your `gradle.properties` file, add the `disableSkia` flag:

|

||||

|

||||

```groovy

|

||||

disableSkia=true

|

||||

```

|

||||

|

||||

Then, clean and rebuild your project.

|

||||

|

||||

#### iOS

|

||||

|

||||

Inside your `Podfile`, add the `VCDisableSkia` flag:

|

||||

|

||||

```ruby

|

||||

$VCDisableSkia = true

|

||||

```

|

||||

|

||||

|

||||

<br />

|

||||

|

||||

#### 🚀 Next section: [Zooming](/docs/guides/zooming) (or [creating a Frame Processor Plugin](/docs/guides/frame-processors-plugins-overview))

|

||||

@@ -9,14 +9,16 @@ sidebar_label: Finish creating your Frame Processor Plugin

|

||||

To make the Frame Processor Plugin available to the Frame Processor Worklet Runtime, create the following wrapper function in JS/TS:

|

||||

|

||||

```ts

|

||||

import { FrameProcessorPlugins, Frame } from 'react-native-vision-camera'

|

||||

import { VisionCameraProxy, Frame } from 'react-native-vision-camera'

|

||||

|

||||

const plugin = VisionCameraProxy.getFrameProcessorPlugin('scanFaces')

|

||||

|

||||

/**

|

||||

* Scans QR codes.

|

||||

* Scans faces.

|

||||

*/

|

||||

export function scanQRCodes(frame: Frame): string[] {

|

||||

export function scanFaces(frame: Frame): object {

|

||||

'worklet'

|

||||

return FrameProcessorPlugins.scanQRCodes(frame)

|

||||

return plugin.call(frame)

|

||||

}

|

||||

```

|

||||

|

||||

@@ -28,8 +30,8 @@ Simply call the wrapper Worklet in your Frame Processor:

|

||||

function App() {

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

const qrCodes = scanQRCodes(frame)

|

||||

console.log(`QR Codes in Frame: ${qrCodes}`)

|

||||

const faces = scanFaces(frame)

|

||||

console.log(`Faces in Frame: ${faces}`)

|

||||

}, [])

|

||||

|

||||

return (

|

||||

|

||||

@@ -10,7 +10,7 @@ import TabItem from '@theme/TabItem';

|

||||

## Creating a Frame Processor Plugin for Android

|

||||

|

||||

The Frame Processor Plugin API is built to be as extensible as possible, which allows you to create custom Frame Processor Plugins.

|

||||

In this guide we will create a custom QR Code Scanner Plugin which can be used from JS.

|

||||

In this guide we will create a custom Face Detector Plugin which can be used from JS.

|

||||

|

||||

Android Frame Processor Plugins can be written in either **Java**, **Kotlin** or **C++ (JNI)**.

|

||||

|

||||

@@ -23,7 +23,7 @@ npx vision-camera-plugin-builder android

|

||||

```

|

||||

|

||||

:::info

|

||||

The CLI will ask you for the path to project's Android Manifest file, name of the plugin (e.g. `QRCodeFrameProcessor`), name of the exposed method (e.g. `scanQRCodes`) and language you want to use for plugin development (Java or Kotlin).

|

||||

The CLI will ask you for the path to project's Android Manifest file, name of the plugin (e.g. `FaceDetectorFrameProcessorPlugin`), name of the exposed method (e.g. `detectFaces`) and language you want to use for plugin development (Java or Kotlin).

|

||||

For reference see the [CLI's docs](https://github.com/mateusz1913/vision-camera-plugin-builder#%EF%B8%8F-options).

|

||||

:::

|

||||

|

||||

@@ -35,7 +35,7 @@ For reference see the [CLI's docs](https://github.com/mateusz1913/vision-camera-

|

||||

@SuppressWarnings("UnnecessaryLocalVariable")

|

||||

List<ReactPackage> packages = new PackageList(this).getPackages();

|

||||

...

|

||||

packages.add(new QRCodeFrameProcessorPluginPackage()); // <- add

|

||||

packages.add(new FaceDetectorFrameProcessorPluginPackage()); // <- add

|

||||

return packages;

|

||||

}

|

||||

```

|

||||

@@ -51,33 +51,34 @@ For reference see the [CLI's docs](https://github.com/mateusz1913/vision-camera-

|

||||

<TabItem value="java">

|

||||

|

||||

1. Open your Project in Android Studio

|

||||

2. Create a Java source file, for the QR Code Plugin this will be called `QRCodeFrameProcessorPlugin.java`.

|

||||

2. Create a Java source file, for the Face Detector Plugin this will be called `FaceDetectorFrameProcessorPlugin.java`.

|

||||

3. Add the following code:

|

||||

|

||||

```java {8}

|

||||

import androidx.camera.core.ImageProxy;

|

||||

import com.mrousavy.camera.frameprocessor.FrameProcessorPlugin;

|

||||

|

||||

public class QRCodeFrameProcessorPlugin extends FrameProcessorPlugin {

|

||||

public class FaceDetectorFrameProcessorPlugin extends FrameProcessorPlugin {

|

||||

|

||||

@Override

|

||||

public Object callback(ImageProxy image, Object[] params) {

|

||||

public Object callback(ImageProxy image, ReadableNativeMap arguments) {

|

||||

// code goes here

|

||||

return null;

|

||||

}

|

||||

|

||||

QRCodeFrameProcessorPlugin() {

|

||||

super("scanQRCodes");

|

||||

@Override

|

||||

public String getName() {

|

||||

return "detectFaces";

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

:::note

|

||||

The Frame Processor Plugin will be exposed to JS through the `FrameProcessorPlugins` object using the name you pass to the `super(...)` call. In this case, it would be `FrameProcessorPlugins.scanQRCodes(...)`.

|

||||

The Frame Processor Plugin will be exposed to JS through the `VisionCameraProxy` object. In this case, it would be `VisionCameraProxy.getFrameProcessorPlugin("detectFaces")`.

|

||||

:::

|

||||

|

||||

4. **Implement your Frame Processing.** See the [Example Plugin (Java)](https://github.com/mrousavy/react-native-vision-camera/blob/main/example/android/app/src/main/java/com/mrousavy/camera/example/ExampleFrameProcessorPlugin.java) for reference.

|

||||

5. Create a new Java file which registers the Frame Processor Plugin in a React Package, for the QR Code Scanner plugin this file will be called `QRCodeFrameProcessorPluginPackage.java`:

|

||||

5. Create a new Java file which registers the Frame Processor Plugin in a React Package, for the Face Detector plugin this file will be called `FaceDetectorFrameProcessorPluginPackage.java`:

|

||||

|

||||

```java {12}

|

||||

import com.facebook.react.ReactPackage;

|

||||

@@ -87,11 +88,11 @@ import com.facebook.react.uimanager.ViewManager;

|

||||

import com.mrousavy.camera.frameprocessor.FrameProcessorPlugin;

|

||||

import javax.annotation.Nonnull;

|

||||

|

||||

public class QRCodeFrameProcessorPluginPackage implements ReactPackage {

|

||||

public class FaceDetectorFrameProcessorPluginPackage implements ReactPackage {

|

||||

@NonNull

|

||||

@Override

|

||||

public List<NativeModule> createNativeModules(@NonNull ReactApplicationContext reactContext) {

|

||||

FrameProcessorPlugin.register(new QRCodeFrameProcessorPlugin());

|

||||

FrameProcessorPlugin.register(new FaceDetectorFrameProcessorPlugin());

|

||||

return Collections.emptyList();

|

||||

}

|

||||

|

||||

@@ -111,7 +112,7 @@ public class QRCodeFrameProcessorPluginPackage implements ReactPackage {

|

||||

@SuppressWarnings("UnnecessaryLocalVariable")

|

||||

List<ReactPackage> packages = new PackageList(this).getPackages();

|

||||

...

|

||||

packages.add(new QRCodeFrameProcessorPluginPackage()); // <- add

|

||||

packages.add(new FaceDetectorFrameProcessorPluginPackage()); // <- add

|

||||

return packages;

|

||||

}

|

||||

```

|

||||

@@ -120,28 +121,32 @@ public class QRCodeFrameProcessorPluginPackage implements ReactPackage {

|

||||

<TabItem value="kotlin">

|

||||

|

||||

1. Open your Project in Android Studio

|

||||

2. Create a Kotlin source file, for the QR Code Plugin this will be called `QRCodeFrameProcessorPlugin.kt`.

|

||||

2. Create a Kotlin source file, for the Face Detector Plugin this will be called `FaceDetectorFrameProcessorPlugin.kt`.

|

||||

3. Add the following code:

|

||||

|

||||

```kotlin {7}

|

||||

import androidx.camera.core.ImageProxy

|

||||

import com.mrousavy.camera.frameprocessor.FrameProcessorPlugin

|

||||

|

||||

class ExampleFrameProcessorPluginKotlin: FrameProcessorPlugin("scanQRCodes") {

|

||||

class FaceDetectorFrameProcessorPlugin: FrameProcessorPlugin() {

|

||||

|

||||

override fun callback(image: ImageProxy, params: Array<Any>): Any? {

|

||||

override fun callback(image: ImageProxy, arguments: ReadableNativeMap): Any? {

|

||||

// code goes here

|

||||

return null

|

||||

}

|

||||

|

||||

override fun getName(): String {

|

||||

return "detectFaces"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

:::note

|

||||

The Frame Processor Plugin will be exposed to JS through the `FrameProcessorPlugins` object using the name you pass to the `FrameProcessorPlugin(...)` call. In this case, it would be `FrameProcessorPlugins.scanQRCodes(...)`.

|

||||

The Frame Processor Plugin will be exposed to JS through the `VisionCameraProxy` object. In this case, it would be `VisionCameraProxy.getFrameProcessorPlugin("detectFaces")`.

|

||||

:::

|

||||

|

||||

4. **Implement your Frame Processing.** See the [Example Plugin (Java)](https://github.com/mrousavy/react-native-vision-camera/blob/main/example/android/app/src/main/java/com/mrousavy/camera/example/ExampleFrameProcessorPlugin.java) for reference.

|

||||

5. Create a new Kotlin file which registers the Frame Processor Plugin in a React Package, for the QR Code Scanner plugin this file will be called `QRCodeFrameProcessorPluginPackage.kt`:

|

||||

5. Create a new Kotlin file which registers the Frame Processor Plugin in a React Package, for the Face Detector plugin this file will be called `FaceDetectorFrameProcessorPluginPackage.kt`:

|

||||

|

||||

```kotlin {9}

|

||||

import com.facebook.react.ReactPackage

|

||||

@@ -150,9 +155,9 @@ import com.facebook.react.bridge.ReactApplicationContext

|

||||

import com.facebook.react.uimanager.ViewManager

|

||||

import com.mrousavy.camera.frameprocessor.FrameProcessorPlugin

|

||||

|

||||

class QRCodeFrameProcessorPluginPackage : ReactPackage {

|

||||

class FaceDetectorFrameProcessorPluginPackage : ReactPackage {

|

||||

override fun createNativeModules(reactContext: ReactApplicationContext): List<NativeModule> {

|

||||

FrameProcessorPlugin.register(ExampleFrameProcessorPluginKotlin())

|

||||

FrameProcessorPlugin.register(FaceDetectorFrameProcessorPlugin())

|

||||

return emptyList()

|

||||

}

|

||||

|

||||

@@ -170,7 +175,7 @@ class QRCodeFrameProcessorPluginPackage : ReactPackage {

|

||||

@SuppressWarnings("UnnecessaryLocalVariable")

|

||||

List<ReactPackage> packages = new PackageList(this).getPackages();

|

||||

...

|

||||

packages.add(new QRCodeFrameProcessorPluginPackage()); // <- add

|

||||

packages.add(new FaceDetectorFrameProcessorPluginPackage()); // <- add

|

||||

return packages;

|

||||

}

|

||||

```

|

||||

|

||||

@@ -10,7 +10,7 @@ import TabItem from '@theme/TabItem';

|

||||

## Creating a Frame Processor Plugin for iOS

|

||||

|

||||

The Frame Processor Plugin API is built to be as extensible as possible, which allows you to create custom Frame Processor Plugins.

|

||||

In this guide we will create a custom QR Code Scanner Plugin which can be used from JS.

|

||||

In this guide we will create a custom Face Detector Plugin which can be used from JS.

|

||||

|

||||

iOS Frame Processor Plugins can be written in either **Objective-C** or **Swift**.

|

||||

|

||||

@@ -23,7 +23,7 @@ npx vision-camera-plugin-builder ios

|

||||

```

|

||||

|

||||

:::info

|

||||

The CLI will ask you for the path to project's .xcodeproj file, name of the plugin (e.g. `QRCodeFrameProcessor`), name of the exposed method (e.g. `scanQRCodes`) and language you want to use for plugin development (Objective-C, Objective-C++ or Swift).

|

||||

The CLI will ask you for the path to project's .xcodeproj file, name of the plugin (e.g. `FaceDetectorFrameProcessorPlugin`), name of the exposed method (e.g. `detectFaces`) and language you want to use for plugin development (Objective-C, Objective-C++ or Swift).

|

||||

For reference see the [CLI's docs](https://github.com/mateusz1913/vision-camera-plugin-builder#%EF%B8%8F-options).

|

||||

:::

|

||||

|

||||

@@ -38,23 +38,25 @@ For reference see the [CLI's docs](https://github.com/mateusz1913/vision-camera-

|

||||

<TabItem value="objc">

|

||||

|

||||

1. Open your Project in Xcode

|

||||

2. Create an Objective-C source file, for the QR Code Plugin this will be called `QRCodeFrameProcessorPlugin.m`.

|

||||

2. Create an Objective-C source file, for the Face Detector Plugin this will be called `FaceDetectorFrameProcessorPlugin.m`.

|

||||

3. Add the following code:

|

||||

|

||||

```objc

|

||||

#import <VisionCamera/FrameProcessorPlugin.h>

|

||||

#import <VisionCamera/FrameProcessorPluginRegistry.h>

|

||||

#import <VisionCamera/Frame.h>

|

||||

|

||||

@interface QRCodeFrameProcessorPlugin : FrameProcessorPlugin

|

||||

@interface FaceDetectorFrameProcessorPlugin : FrameProcessorPlugin

|

||||

@end

|

||||

|

||||

@implementation QRCodeFrameProcessorPlugin

|

||||

@implementation FaceDetectorFrameProcessorPlugin

|

||||

|

||||

- (NSString *)name {

|

||||

return @"scanQRCodes";

|

||||

- (instancetype) initWithOptions:(NSDictionary*)options; {

|

||||

self = [super init];

|

||||

return self;

|

||||

}

|

||||

|

||||

- (id)callback:(Frame *)frame withArguments:(NSArray<id> *)arguments {

|

||||

- (id)callback:(Frame*)frame withArguments:(NSDictionary*)arguments {

|

||||

CMSampleBufferRef buffer = frame.buffer;

|

||||

UIImageOrientation orientation = frame.orientation;

|

||||

// code goes here

|

||||

@@ -62,14 +64,17 @@ For reference see the [CLI's docs](https://github.com/mateusz1913/vision-camera-

|

||||

}

|

||||

|

||||

+ (void) load {

|

||||

[self registerPlugin:[[ExampleFrameProcessorPlugin alloc] init]];

|

||||

[FrameProcessorPluginRegistry addFrameProcessorPlugin:@"detectFaces"

|

||||

withInitializer:^FrameProcessorPlugin*(NSDictionary* options) {

|

||||

return [[FaceDetectorFrameProcessorPlugin alloc] initWithOptions:options];

|

||||

}];

|

||||

}

|

||||

|

||||

@end

|

||||

```

|

||||

|

||||

:::note

|

||||

The Frame Processor Plugin will be exposed to JS through the `FrameProcessorPlugins` object using the name returned from the `name` getter. In this case, it would be `FrameProcessorPlugins.scanQRCodes(...)`.

|

||||

The Frame Processor Plugin will be exposed to JS through the `VisionCameraProxy` object. In this case, it would be `VisionCameraProxy.getFrameProcessorPlugin("detectFaces")`.

|

||||

:::

|

||||

|

||||

4. **Implement your Frame Processing.** See the [Example Plugin (Objective-C)](https://github.com/mrousavy/react-native-vision-camera/blob/main/example/ios/Frame%20Processor%20Plugins/Example%20Plugin%20%28Objective%2DC%29) for reference.

|

||||

@@ -78,7 +83,7 @@ The Frame Processor Plugin will be exposed to JS through the `FrameProcessorPlug

|

||||

<TabItem value="swift">

|

||||

|

||||

1. Open your Project in Xcode

|

||||

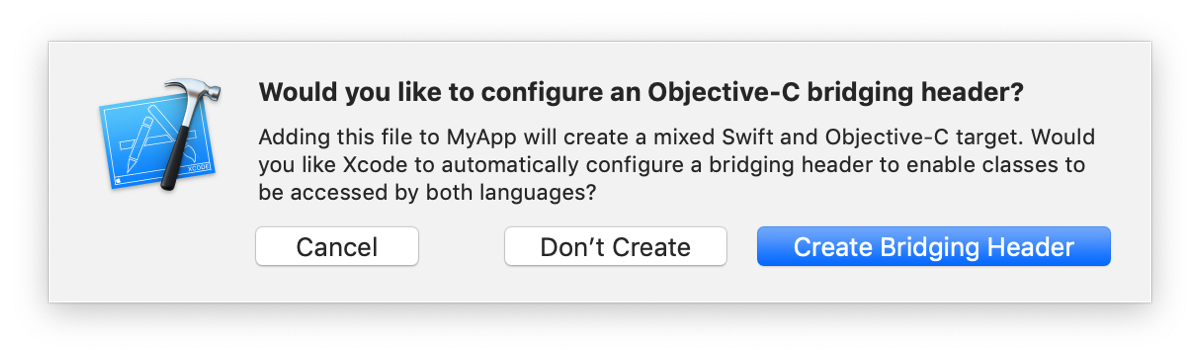

2. Create a Swift file, for the QR Code Plugin this will be `QRCodeFrameProcessorPlugin.swift`. If Xcode asks you to create a Bridging Header, press **create**.

|

||||

2. Create a Swift file, for the Face Detector Plugin this will be `FaceDetectorFrameProcessorPlugin.swift`. If Xcode asks you to create a Bridging Header, press **create**.

|

||||

|

||||

|

||||

|

||||

@@ -92,13 +97,9 @@ The Frame Processor Plugin will be exposed to JS through the `FrameProcessorPlug

|

||||

4. In the Swift file, add the following code:

|

||||

|

||||

```swift

|

||||

@objc(QRCodeFrameProcessorPlugin)

|

||||

public class QRCodeFrameProcessorPlugin: FrameProcessorPlugin {

|

||||

override public func name() -> String! {

|

||||

return "scanQRCodes"

|

||||

}

|

||||

|

||||

public override func callback(_ frame: Frame!, withArguments arguments: [Any]!) -> Any! {

|

||||

@objc(FaceDetectorFrameProcessorPlugin)

|

||||

public class FaceDetectorFrameProcessorPlugin: FrameProcessorPlugin {

|

||||

public override func callback(_ frame: Frame!, withArguments arguments: [String:Any]) -> Any {

|

||||

let buffer = frame.buffer

|

||||

let orientation = frame.orientation

|

||||

// code goes here

|

||||

@@ -107,11 +108,12 @@ public class QRCodeFrameProcessorPlugin: FrameProcessorPlugin {

|

||||

}

|

||||

```

|

||||

|

||||

5. In your `AppDelegate.m`, add the following imports (you can skip this if your AppDelegate is in Swift):

|

||||

5. In your `AppDelegate.m`, add the following imports:

|

||||

|

||||

```objc

|

||||

#import "YOUR_XCODE_PROJECT_NAME-Swift.h"

|

||||

#import <VisionCamera/FrameProcessorPlugin.h>

|

||||

#import <VisionCamera/FrameProcessorPluginRegistry.h>

|

||||

```

|

||||

|

||||

6. In your `AppDelegate.m`, add the following code to `application:didFinishLaunchingWithOptions:`:

|

||||

@@ -121,7 +123,10 @@ public class QRCodeFrameProcessorPlugin: FrameProcessorPlugin {

|

||||

{

|

||||

...

|

||||

|

||||

[FrameProcessorPlugin registerPlugin:[[QRCodeFrameProcessorPlugin alloc] init]];

|

||||

[FrameProcessorPluginRegistry addFrameProcessorPlugin:@"detectFaces"

|

||||

withInitializer:^FrameProcessorPlugin*(NSDictionary* options) {

|

||||

return [[FaceDetectorFrameProcessorPlugin alloc] initWithOptions:options];

|

||||

}];

|

||||

|

||||

return [super application:application didFinishLaunchingWithOptions:launchOptions];

|

||||

}

|

||||

|

||||

@@ -21,10 +21,10 @@ Before opening an issue, make sure you try the following:

|

||||

npm i # or "yarn"

|

||||

cd ios && pod repo update && pod update && pod install

|

||||

```

|

||||

2. Check your minimum iOS version. VisionCamera requires a minimum iOS version of **11.0**.

|

||||

2. Check your minimum iOS version. VisionCamera requires a minimum iOS version of **12.4**.

|

||||

1. Open your `Podfile`

|

||||

2. Make sure `platform :ios` is set to `11.0` or higher

|

||||

3. Make sure `iOS Deployment Target` is set to `11.0` or higher (`IPHONEOS_DEPLOYMENT_TARGET` in `project.pbxproj`)

|

||||

2. Make sure `platform :ios` is set to `12.4` or higher

|

||||

3. Make sure `iOS Deployment Target` is set to `12.4` or higher (`IPHONEOS_DEPLOYMENT_TARGET` in `project.pbxproj`)

|

||||

3. Check your Swift version. VisionCamera requires a minimum Swift version of **5.2**.

|

||||

1. Open `project.pbxproj` in a Text Editor

|

||||

2. If the `LIBRARY_SEARCH_PATH` value is set, make sure there is no explicit reference to Swift-5.0. If there is, remove it. See [this StackOverflow answer](https://stackoverflow.com/a/66281846/1123156).

|

||||

@@ -35,9 +35,12 @@ Before opening an issue, make sure you try the following:

|

||||

3. Select **Swift File** and press **Next**

|

||||

4. Choose whatever name you want, e.g. `File.swift` and press **Create**

|

||||

5. Press **Create Bridging Header** when promted.

|

||||

5. If you're having runtime issues, check the logs in Xcode to find out more. In Xcode, go to **View** > **Debug Area** > **Activate Console** (<kbd>⇧</kbd>+<kbd>⌘</kbd>+<kbd>C</kbd>).

|

||||

5. If you're having build issues, try:

|

||||

1. Building without Skia. Set `$VCDisableSkia = true` in the top of your Podfile, and try rebuilding.

|

||||

2. Building without Frame Processors. Set `$VCDisableFrameProcessors = true` in the top of your Podfile, and try rebuilding.

|

||||

6. If you're having runtime issues, check the logs in Xcode to find out more. In Xcode, go to **View** > **Debug Area** > **Activate Console** (<kbd>⇧</kbd>+<kbd>⌘</kbd>+<kbd>C</kbd>).

|

||||

* For errors without messages, there's often an error code attached. Look up the error code on [osstatus.com](https://www.osstatus.com) to get more information about a specific error.

|

||||

6. If your Frame Processor is not running, make sure you check the native Xcode logs to find out why. Also make sure you are not using a remote JS debugger such as Google Chrome, since those don't work with JSI.

|

||||

7. If your Frame Processor is not running, make sure you check the native Xcode logs to find out why. Also make sure you are not using a remote JS debugger such as Google Chrome, since those don't work with JSI.

|

||||

|

||||

## Android

|

||||

|

||||

@@ -64,9 +67,12 @@ Before opening an issue, make sure you try the following:

|

||||

```

|

||||

distributionUrl=https\://services.gradle.org/distributions/gradle-7.5.1-all.zip

|

||||

```

|

||||

5. If you're having runtime issues, check the logs in Android Studio/Logcat to find out more. In Android Studio, go to **View** > **Tool Windows** > **Logcat** (<kbd>⌘</kbd>+<kbd>6</kbd>) or run `adb logcat` in Terminal.

|

||||

6. If a camera device is not being returned by [`Camera.getAvailableCameraDevices()`](/docs/api/classes/Camera#getavailablecameradevices), make sure it is a Camera2 compatible device. See [this section in the Android docs](https://developer.android.com/reference/android/hardware/camera2/CameraDevice#reprocessing) for more information.

|

||||

7. If your Frame Processor is not running, make sure you check the native Android Studio/Logcat logs to find out why. Also make sure you are not using a remote JS debugger such as Google Chrome, since those don't work with JSI.

|

||||

5. If you're having build issues, try:

|

||||

1. Building without Skia. Set `disableSkia = true` in your `gradle.properties`, and try rebuilding.

|

||||

2. Building without Frame Processors. Set `disableFrameProcessors = true` in your `gradle.properties`, and try rebuilding.

|

||||

6. If you're having runtime issues, check the logs in Android Studio/Logcat to find out more. In Android Studio, go to **View** > **Tool Windows** > **Logcat** (<kbd>⌘</kbd>+<kbd>6</kbd>) or run `adb logcat` in Terminal.

|

||||

7. If a camera device is not being returned by [`Camera.getAvailableCameraDevices()`](/docs/api/classes/Camera#getavailablecameradevices), make sure it is a Camera2 compatible device. See [this section in the Android docs](https://developer.android.com/reference/android/hardware/camera2/CameraDevice#reprocessing) for more information.

|

||||

8. If your Frame Processor is not running, make sure you check the native Android Studio/Logcat logs to find out why. Also make sure you are not using a remote JS debugger such as Google Chrome, since those don't work with JSI.

|

||||

|

||||

## Issues

|

||||

|

||||

|

||||

BIN

docs/static/img/slow-log-2.png

vendored

BIN

docs/static/img/slow-log-2.png

vendored

Binary file not shown.

|

Before Width: | Height: | Size: 20 KiB |

BIN

docs/static/img/slow-log.png

vendored

BIN

docs/static/img/slow-log.png

vendored

Binary file not shown.

|

Before Width: | Height: | Size: 29 KiB |

@@ -1,48 +0,0 @@

|

||||

//

|

||||

// ExamplePluginSwift.swift

|

||||

// VisionCamera

|

||||

//

|

||||

// Created by Marc Rousavy on 30.04.21.

|

||||

// Copyright © 2021 mrousavy. All rights reserved.

|

||||

//

|

||||

|

||||

import AVKit

|

||||

import Vision

|

||||

|

||||

#if VISION_CAMERA_ENABLE_FRAME_PROCESSORS

|

||||

@objc

|

||||

public class ExamplePluginSwift : FrameProcessorPlugin {

|

||||

|

||||

override public func name() -> String! {

|

||||

return "example_plugin_swift"

|

||||

}

|

||||

|

||||

public override func callback(_ frame: Frame!, withArguments arguments: [Any]!) -> Any! {

|

||||

guard let imageBuffer = CMSampleBufferGetImageBuffer(frame.buffer) else {

|

||||

return nil

|

||||

}

|

||||

NSLog("ExamplePlugin: \(CVPixelBufferGetWidth(imageBuffer)) x \(CVPixelBufferGetHeight(imageBuffer)) Image. Logging \(arguments.count) parameters:")

|

||||

|

||||

arguments.forEach { arg in

|

||||

var string = "\(arg)"

|

||||

if let array = arg as? NSArray {

|

||||

string = (array as Array).description

|

||||

} else if let map = arg as? NSDictionary {

|

||||

string = (map as Dictionary).description

|

||||

}

|

||||

NSLog("ExamplePlugin: -> \(string) (\(type(of: arg)))")

|

||||

}

|

||||

|

||||

return [

|

||||

"example_str": "Test",

|

||||

"example_bool": true,

|

||||

"example_double": 5.3,

|

||||

"example_array": [

|

||||

"Hello",

|

||||

true,

|

||||

17.38,

|

||||

],

|

||||

]

|

||||

}

|

||||

}

|

||||

#endif

|

||||

@@ -8,6 +8,7 @@

|

||||

#if __has_include(<VisionCamera/FrameProcessorPlugin.h>)

|

||||

#import <Foundation/Foundation.h>

|

||||

#import <VisionCamera/FrameProcessorPlugin.h>

|

||||

#import <VisionCamera/FrameProcessorPluginRegistry.h>

|

||||

#import <VisionCamera/Frame.h>

|

||||

|

||||

// Example for an Objective-C Frame Processor plugin

|

||||

@@ -17,18 +18,14 @@

|

||||

|

||||

@implementation ExampleFrameProcessorPlugin

|

||||

|

||||

- (NSString *)name {

|

||||

return @"example_plugin";

|

||||

}

|

||||

|

||||

- (id)callback:(Frame *)frame withArguments:(NSArray<id> *)arguments {

|

||||

CVPixelBufferRef imageBuffer = CMSampleBufferGetImageBuffer(frame.buffer);

|

||||

NSLog(@"ExamplePlugin: %zu x %zu Image. Logging %lu parameters:", CVPixelBufferGetWidth(imageBuffer), CVPixelBufferGetHeight(imageBuffer), (unsigned long)arguments.count);

|

||||

|

||||

|

||||

for (id param in arguments) {

|

||||

NSLog(@"ExamplePlugin: -> %@ (%@)", param == nil ? @"(nil)" : [param description], NSStringFromClass([param classForCoder]));

|

||||

}

|

||||

|

||||

|

||||

return @{

|

||||

@"example_str": @"Test",

|

||||

@"example_bool": @true,

|

||||

@@ -42,7 +39,10 @@

|

||||

}

|

||||

|

||||

+ (void) load {

|

||||

[self registerPlugin:[[ExampleFrameProcessorPlugin alloc] init]];

|

||||

[FrameProcessorPluginRegistry addFrameProcessorPlugin:@"example_plugin"

|

||||

withInitializer:^FrameProcessorPlugin*(NSDictionary* options) {

|

||||

return [[ExampleFrameProcessorPlugin alloc] initWithOptions:options];

|

||||

}];

|

||||

}

|

||||

|

||||

@end

|

||||

@@ -713,7 +713,7 @@ SPEC CHECKSUMS:

|

||||

RNStaticSafeAreaInsets: 055ddbf5e476321720457cdaeec0ff2ba40ec1b8

|

||||

RNVectorIcons: fcc2f6cb32f5735b586e66d14103a74ce6ad61f8

|

||||

SocketRocket: f32cd54efbe0f095c4d7594881e52619cfe80b17

|

||||

VisionCamera: b4e91836f577249470ae42707782f4b44d875cd9

|

||||

VisionCamera: 29727c3ed48328b246e3a7448f7c14cc12d2fd11

|

||||

Yoga: 65286bb6a07edce5e4fe8c90774da977ae8fc009

|

||||

|

||||

PODFILE CHECKSUM: ab9c06b18c63e741c04349c0fd630c6d3145081c

|

||||

|

||||

@@ -13,7 +13,6 @@

|

||||

81AB9BB82411601600AC10FF /* LaunchScreen.storyboard in Resources */ = {isa = PBXBuildFile; fileRef = 81AB9BB72411601600AC10FF /* LaunchScreen.storyboard */; };

|

||||

B8DB3BD5263DE8B7004C18D7 /* BuildFile in Sources */ = {isa = PBXBuildFile; };

|

||||

B8DB3BDC263DEA31004C18D7 /* ExampleFrameProcessorPlugin.m in Sources */ = {isa = PBXBuildFile; fileRef = B8DB3BD8263DEA31004C18D7 /* ExampleFrameProcessorPlugin.m */; };

|

||||

B8DB3BDD263DEA31004C18D7 /* ExamplePluginSwift.swift in Sources */ = {isa = PBXBuildFile; fileRef = B8DB3BDA263DEA31004C18D7 /* ExamplePluginSwift.swift */; };

|

||||

B8F0E10825E0199F00586F16 /* File.swift in Sources */ = {isa = PBXBuildFile; fileRef = B8F0E10725E0199F00586F16 /* File.swift */; };

|

||||

C0B129659921D2EA967280B2 /* libPods-VisionCameraExample.a in Frameworks */ = {isa = PBXBuildFile; fileRef = 3CDCFE89C25C89320B98945E /* libPods-VisionCameraExample.a */; };

|

||||

/* End PBXBuildFile section */

|

||||

@@ -29,7 +28,6 @@

|

||||

3CDCFE89C25C89320B98945E /* libPods-VisionCameraExample.a */ = {isa = PBXFileReference; explicitFileType = archive.ar; includeInIndex = 0; path = "libPods-VisionCameraExample.a"; sourceTree = BUILT_PRODUCTS_DIR; };

|

||||

81AB9BB72411601600AC10FF /* LaunchScreen.storyboard */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = file.storyboard; name = LaunchScreen.storyboard; path = VisionCameraExample/LaunchScreen.storyboard; sourceTree = "<group>"; };

|

||||

B8DB3BD8263DEA31004C18D7 /* ExampleFrameProcessorPlugin.m */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.c.objc; path = ExampleFrameProcessorPlugin.m; sourceTree = "<group>"; };

|

||||

B8DB3BDA263DEA31004C18D7 /* ExamplePluginSwift.swift */ = {isa = PBXFileReference; fileEncoding = 4; lastKnownFileType = sourcecode.swift; path = ExamplePluginSwift.swift; sourceTree = "<group>"; };

|

||||

B8F0E10625E0199F00586F16 /* VisionCameraExample-Bridging-Header.h */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.c.h; path = "VisionCameraExample-Bridging-Header.h"; sourceTree = "<group>"; };

|

||||

B8F0E10725E0199F00586F16 /* File.swift */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.swift; path = File.swift; sourceTree = "<group>"; };

|

||||

C1D342AD8210E7627A632602 /* Pods-VisionCameraExample.debug.xcconfig */ = {isa = PBXFileReference; includeInIndex = 1; lastKnownFileType = text.xcconfig; name = "Pods-VisionCameraExample.debug.xcconfig"; path = "Target Support Files/Pods-VisionCameraExample/Pods-VisionCameraExample.debug.xcconfig"; sourceTree = "<group>"; };

|

||||

@@ -118,26 +116,17 @@

|

||||

B8DB3BD6263DEA31004C18D7 /* Frame Processor Plugins */ = {

|

||||

isa = PBXGroup;

|

||||

children = (

|

||||

B8DB3BD7263DEA31004C18D7 /* Example Plugin (Objective-C) */,

|

||||

B8DB3BD9263DEA31004C18D7 /* Example Plugin (Swift) */,

|

||||

B8DB3BD7263DEA31004C18D7 /* Example Plugin */,

|

||||

);

|

||||

path = "Frame Processor Plugins";

|

||||

sourceTree = "<group>";

|

||||

};

|

||||

B8DB3BD7263DEA31004C18D7 /* Example Plugin (Objective-C) */ = {

|

||||

B8DB3BD7263DEA31004C18D7 /* Example Plugin */ = {

|

||||

isa = PBXGroup;

|

||||

children = (

|

||||

B8DB3BD8263DEA31004C18D7 /* ExampleFrameProcessorPlugin.m */,

|

||||

);

|

||||

path = "Example Plugin (Objective-C)";

|

||||

sourceTree = "<group>";

|

||||

};

|

||||

B8DB3BD9263DEA31004C18D7 /* Example Plugin (Swift) */ = {

|

||||

isa = PBXGroup;

|

||||

children = (

|

||||

B8DB3BDA263DEA31004C18D7 /* ExamplePluginSwift.swift */,

|

||||

);

|

||||

path = "Example Plugin (Swift)";

|

||||

path = "Example Plugin";

|

||||

sourceTree = "<group>";

|

||||

};

|

||||

/* End PBXGroup section */

|

||||

@@ -381,7 +370,6 @@

|

||||

13B07FBC1A68108700A75B9A /* AppDelegate.mm in Sources */,

|

||||

B8DB3BDC263DEA31004C18D7 /* ExampleFrameProcessorPlugin.m in Sources */,

|

||||

B8DB3BD5263DE8B7004C18D7 /* BuildFile in Sources */,

|

||||

B8DB3BDD263DEA31004C18D7 /* ExamplePluginSwift.swift in Sources */,

|

||||

B8F0E10825E0199F00586F16 /* File.swift in Sources */,

|

||||

13B07FC11A68108700A75B9A /* main.m in Sources */,

|

||||

);

|

||||

|

||||

@@ -1,16 +1,17 @@

|

||||

import { FrameProcessorPlugins, Frame } from 'react-native-vision-camera';

|

||||

import { VisionCameraProxy, Frame } from 'react-native-vision-camera';

|

||||

|

||||

export function examplePluginSwift(frame: Frame): string[] {

|

||||

'worklet';

|

||||

// @ts-expect-error because this function is dynamically injected by VisionCamera

|

||||

return FrameProcessorPlugins.example_plugin_swift(frame, 'hello!', 'parameter2', true, 42, { test: 0, second: 'test' }, [

|

||||

'another test',

|

||||

5,

|

||||

]);

|

||||

}

|

||||

const plugin = VisionCameraProxy.getFrameProcessorPlugin('example_plugin');

|

||||

|

||||

export function examplePlugin(frame: Frame): string[] {

|

||||

'worklet';

|

||||

// @ts-expect-error because this function is dynamically injected by VisionCamera

|

||||

return FrameProcessorPlugins.example_plugin(frame, 'hello!', 'parameter2', true, 42, { test: 0, second: 'test' }, ['another test', 5]);

|

||||

|

||||

if (plugin == null) throw new Error('Failed to load Frame Processor Plugin "example_plugin"!');

|

||||

|

||||

return plugin.call(frame, {

|

||||

someString: 'hello!',

|

||||

someBoolean: true,

|

||||

someNumber: 42,

|

||||

someObject: { test: 0, second: 'test' },

|

||||

someArray: ['another test', 5],

|

||||

}) as string[];

|

||||

}

|

||||

|

||||

@@ -17,6 +17,6 @@

|

||||

|

||||

#if VISION_CAMERA_ENABLE_FRAME_PROCESSORS

|

||||

#import "FrameProcessor.h"

|

||||

#import "FrameProcessorRuntimeManager.h"

|

||||

#import "Frame.h"

|

||||

#import "VisionCameraProxy.h"

|

||||

#endif

|

||||

|

||||

@@ -13,10 +13,6 @@ import Foundation

|

||||

final class CameraViewManager: RCTViewManager {

|

||||

// pragma MARK: Properties

|

||||

|

||||

#if VISION_CAMERA_ENABLE_FRAME_PROCESSORS

|

||||

private var runtimeManager: FrameProcessorRuntimeManager?

|

||||

#endif

|

||||

|

||||

override var methodQueue: DispatchQueue! {

|

||||

return DispatchQueue.main

|

||||

}

|

||||

@@ -34,10 +30,9 @@ final class CameraViewManager: RCTViewManager {

|

||||

@objc

|

||||

final func installFrameProcessorBindings() -> NSNumber {

|

||||

#if VISION_CAMERA_ENABLE_FRAME_PROCESSORS

|

||||

// Runs on JS Thread

|

||||

runtimeManager = FrameProcessorRuntimeManager()

|

||||

runtimeManager!.installFrameProcessorBindings()

|

||||

return true as NSNumber

|

||||

// Called on JS Thread (blocking sync method)

|

||||

let result = VisionCameraInstaller.install(to: bridge)

|

||||

return NSNumber(value: result)

|

||||

#else

|

||||

return false as NSNumber

|

||||

#endif

|

||||

|

||||

@@ -22,8 +22,8 @@

|

||||

@interface FrameProcessor : NSObject

|

||||

|

||||

#ifdef __cplusplus

|

||||

- (instancetype _Nonnull)initWithWorklet:(std::shared_ptr<RNWorklet::JsiWorkletContext>)context

|

||||

worklet:(std::shared_ptr<RNWorklet::JsiWorklet>)worklet;

|

||||

- (instancetype _Nonnull)initWithWorklet:(std::shared_ptr<RNWorklet::JsiWorklet>)worklet

|

||||

context:(std::shared_ptr<RNWorklet::JsiWorkletContext>)context;

|

||||

|

||||

- (void)callWithFrameHostObject:(std::shared_ptr<FrameHostObject>)frameHostObject;

|

||||

#endif

|

||||

|

||||

@@ -21,11 +21,11 @@ using namespace facebook;

|

||||

std::shared_ptr<RNWorklet::WorkletInvoker> _workletInvoker;

|

||||

}

|

||||

|

||||

- (instancetype)initWithWorklet:(std::shared_ptr<RNWorklet::JsiWorkletContext>)context

|

||||

worklet:(std::shared_ptr<RNWorklet::JsiWorklet>)worklet {

|

||||

- (instancetype)initWithWorklet:(std::shared_ptr<RNWorklet::JsiWorklet>)worklet

|

||||

context:(std::shared_ptr<RNWorklet::JsiWorkletContext>)context {

|

||||

if (self = [super init]) {

|

||||

_workletContext = context;

|

||||

_workletInvoker = std::make_shared<RNWorklet::WorkletInvoker>(worklet);

|

||||

_workletContext = context;

|

||||

}

|

||||

return self;

|

||||

}

|

||||

|

||||

@@ -15,18 +15,24 @@

|

||||

///

|

||||

/// Subclass this class in a Swift or Objective-C class and override the `callback:withArguments:` method, and

|

||||

/// implement your Frame Processing there.

|

||||

/// Then, in your App's startup (AppDelegate.m), call `FrameProcessorPluginBase.registerPlugin(YourNewPlugin())`

|

||||

///

|

||||

/// Use `[FrameProcessorPluginRegistry addFrameProcessorPlugin:]` to register the Plugin to the VisionCamera Runtime.

|

||||

@interface FrameProcessorPlugin : NSObject

|

||||

|

||||

/// Get the name of the Frame Processor Plugin.

|

||||

/// This will be exposed to JS under the `FrameProcessorPlugins` Proxy object.

|

||||

- (NSString * _Nonnull)name;

|

||||

|

||||

/// The actual callback when calling this plugin. Any Frame Processing should be handled there.

|

||||

/// Make sure your code is optimized, as this is a hot path.

|

||||

- (id _Nullable) callback:(Frame* _Nonnull)frame withArguments:(NSArray<id>* _Nullable)arguments;

|

||||

|

||||

/// Register the given plugin in the Plugin Registry. This should be called on App Startup.

|

||||

+ (void) registerPlugin:(FrameProcessorPlugin* _Nonnull)plugin;

|

||||

- (id _Nullable) callback:(Frame* _Nonnull)frame withArguments:(NSDictionary* _Nullable)arguments;

|

||||

|

||||

@end

|

||||

|

||||

|

||||

// Base implementation (empty)

|

||||

@implementation FrameProcessorPlugin

|

||||

|

||||

- (id _Nullable)callback:(Frame* _Nonnull)frame withArguments:(NSDictionary* _Nullable)arguments {

|

||||

[NSException raise:NSInternalInconsistencyException

|

||||

format:@"Frame Processor Plugin does not override the `callback(frame:withArguments:)` method!"];

|

||||

return nil;

|

||||

}

|

||||

|

||||

@end

|

||||

|

||||

@@ -1,31 +0,0 @@

|

||||

//

|

||||

// FrameProcessorPlugin.m

|

||||

// VisionCamera

|

||||

//

|

||||

// Created by Marc Rousavy on 24.02.23.

|

||||

// Copyright © 2023 mrousavy. All rights reserved.

|

||||

//

|

||||

|

||||

#import <Foundation/Foundation.h>

|

||||

#import "FrameProcessorPlugin.h"

|

||||

#import "FrameProcessorPluginRegistry.h"

|

||||

|

||||

@implementation FrameProcessorPlugin

|

||||

|

||||

- (NSString *)name {

|

||||

[NSException raise:NSInternalInconsistencyException

|

||||

format:@"Frame Processor Plugin \"%@\" does not override the `name` getter!", [self name]];

|

||||

return nil;

|

||||

}

|

||||

|

||||

- (id _Nullable)callback:(Frame* _Nonnull)frame withArguments:(NSArray<id>* _Nullable)arguments {

|

||||

[NSException raise:NSInternalInconsistencyException

|

||||

format:@"Frame Processor Plugin \"%@\" does not override the `callback(frame:withArguments:)` method!", [self name]];

|

||||

return nil;

|

||||

}

|

||||

|

||||

+ (void)registerPlugin:(FrameProcessorPlugin* _Nonnull)plugin {

|

||||

[FrameProcessorPluginRegistry addFrameProcessorPlugin:plugin];

|

||||

}

|

||||

|

||||

@end

|

||||

32

ios/Frame Processor/FrameProcessorPluginHostObject.h

Normal file

32

ios/Frame Processor/FrameProcessorPluginHostObject.h

Normal file

@@ -0,0 +1,32 @@

|

||||

//

|

||||

// FrameProcessorPluginHostObject.h

|

||||

// VisionCamera

|

||||

//

|

||||

// Created by Marc Rousavy on 21.07.23.

|

||||

// Copyright © 2023 mrousavy. All rights reserved.

|

||||

//

|

||||

|

||||

#pragma once

|

||||

|

||||

#import <jsi/jsi.h>

|

||||

#import "FrameProcessorPlugin.h"

|

||||

#import <memory>

|

||||

#import <ReactCommon/CallInvoker.h>

|

||||

|

||||

using namespace facebook;

|

||||

|

||||

class FrameProcessorPluginHostObject: public jsi::HostObject {

|

||||

public:

|

||||

explicit FrameProcessorPluginHostObject(FrameProcessorPlugin* plugin,

|

||||

std::shared_ptr<react::CallInvoker> callInvoker):

|

||||

_plugin(plugin), _callInvoker(callInvoker) { }

|

||||

~FrameProcessorPluginHostObject() { }

|

||||

|

||||

public:

|

||||

std::vector<jsi::PropNameID> getPropertyNames(jsi::Runtime& runtime) override;

|

||||

jsi::Value get(jsi::Runtime& runtime, const jsi::PropNameID& name) override;

|

||||

|

||||

private:

|

||||

FrameProcessorPlugin* _plugin;

|

||||

std::shared_ptr<react::CallInvoker> _callInvoker;

|

||||

};

|

||||

52

ios/Frame Processor/FrameProcessorPluginHostObject.mm

Normal file

52

ios/Frame Processor/FrameProcessorPluginHostObject.mm

Normal file

@@ -0,0 +1,52 @@

|

||||

//

|

||||

// FrameProcessorPluginHostObject.mm

|

||||

// VisionCamera

|

||||

//

|

||||

// Created by Marc Rousavy on 21.07.23.

|

||||

// Copyright © 2023 mrousavy. All rights reserved.

|

||||

//

|

||||

|

||||

#import "FrameProcessorPluginHostObject.h"

|

||||

#import <Foundation/Foundation.h>

|

||||

#import <vector>

|

||||

#import "FrameHostObject.h"

|

||||

#import "JSINSObjectConversion.h"

|

||||

|

||||

std::vector<jsi::PropNameID> FrameProcessorPluginHostObject::getPropertyNames(jsi::Runtime& runtime) {

|

||||

std::vector<jsi::PropNameID> result;

|

||||

result.push_back(jsi::PropNameID::forUtf8(runtime, std::string("call")));

|

||||

return result;

|

||||

}

|

||||

|

||||

jsi::Value FrameProcessorPluginHostObject::get(jsi::Runtime& runtime, const jsi::PropNameID& propName) {

|

||||

auto name = propName.utf8(runtime);

|

||||

|

||||

if (name == "call") {

|

||||

return jsi::Function::createFromHostFunction(runtime,

|

||||

jsi::PropNameID::forUtf8(runtime, "call"),

|

||||

2,

|

||||

[=](jsi::Runtime& runtime,

|

||||

const jsi::Value& thisValue,

|

||||

const jsi::Value* arguments,

|

||||

size_t count) -> jsi::Value {

|

||||

// Frame is first argument

|

||||

auto frameHostObject = arguments[0].asObject(runtime).asHostObject<FrameHostObject>(runtime);

|

||||

Frame* frame = frameHostObject->frame;

|

||||

|

||||

// Options are second argument (possibly undefined)

|

||||

NSDictionary* options = nil;

|

||||

if (count > 1) {

|

||||

auto optionsObject = arguments[1].asObject(runtime);

|

||||

options = JSINSObjectConversion::convertJSIObjectToNSDictionary(runtime, optionsObject, _callInvoker);

|

||||

}

|

||||

|

||||

// Call actual Frame Processor Plugin

|

||||

id result = [_plugin callback:frame withArguments:nil];

|

||||

|

||||

// Convert result value to jsi::Value (possibly undefined)

|

||||

return JSINSObjectConversion::convertObjCObjectToJSIValue(runtime, result);

|

||||

});

|

||||

}

|

||||

|

||||

return jsi::Value::undefined();

|

||||

}

|

||||

@@ -14,7 +14,12 @@

|

||||

|

||||

@interface FrameProcessorPluginRegistry : NSObject

|

||||

|

||||

+ (NSMutableDictionary<NSString*, FrameProcessorPlugin*>*)frameProcessorPlugins;

|

||||

+ (void) addFrameProcessorPlugin:(FrameProcessorPlugin* _Nonnull)plugin;

|

||||

typedef FrameProcessorPlugin* _Nonnull (^PluginInitializerFunction)(NSDictionary* _Nullable options);

|

||||

|

||||

+ (void)addFrameProcessorPlugin:(NSString* _Nonnull)name

|

||||

withInitializer:(PluginInitializerFunction _Nonnull)pluginInitializer;

|

||||

|

||||

+ (FrameProcessorPlugin* _Nullable)getPlugin:(NSString* _Nonnull)name

|

||||

withOptions:(NSDictionary* _Nullable)options;

|

||||

|

||||

@end

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

//

|

||||

// FrameProcessorPluginRegistry.mm

|

||||

// FrameProcessorPluginRegistry.m

|

||||

// VisionCamera

|

||||

//

|

||||

// Created by Marc Rousavy on 24.03.21.

|

||||

@@ -11,19 +11,28 @@

|

||||

|

||||

@implementation FrameProcessorPluginRegistry

|

||||

|

||||

+ (NSMutableDictionary<NSString*, FrameProcessorPlugin*>*)frameProcessorPlugins {

|

||||

static NSMutableDictionary<NSString*, FrameProcessorPlugin*>* plugins = nil;

|

||||

+ (NSMutableDictionary<NSString*, PluginInitializerFunction>*)frameProcessorPlugins {

|

||||

static NSMutableDictionary<NSString*, PluginInitializerFunction>* plugins = nil;

|

||||

if (plugins == nil) {

|

||||

plugins = [[NSMutableDictionary alloc] init];

|

||||

}

|

||||

return plugins;

|

||||

}

|

||||

|

||||

+ (void) addFrameProcessorPlugin:(FrameProcessorPlugin*)plugin {

|

||||

BOOL alreadyExists = [[FrameProcessorPluginRegistry frameProcessorPlugins] valueForKey:plugin.name] != nil;

|

||||

NSAssert(!alreadyExists, @"Tried to add a Frame Processor Plugin with a name that already exists! Either choose unique names, or remove the unused plugin. Name: %@", plugin.name);

|

||||

+ (void) addFrameProcessorPlugin:(NSString *)name withInitializer:(PluginInitializerFunction)pluginInitializer {

|

||||

BOOL alreadyExists = [[FrameProcessorPluginRegistry frameProcessorPlugins] valueForKey:name] != nil;

|

||||

NSAssert(!alreadyExists, @"Tried to add a Frame Processor Plugin with a name that already exists! Either choose unique names, or remove the unused plugin. Name: %@", name);

|

||||