150 lines

4.9 KiB

Plaintext

150 lines

4.9 KiB

Plaintext

---

|

|

id: frame-processors-plugins-ios

|

|

title: Creating Frame Processor Plugins

|

|

sidebar_label: Creating Frame Processor Plugins (iOS)

|

|

---

|

|

|

|

import Tabs from '@theme/Tabs'

|

|

import TabItem from '@theme/TabItem'

|

|

|

|

## Creating a Frame Processor Plugin for iOS

|

|

|

|

The Frame Processor Plugin API is built to be as extensible as possible, which allows you to create custom Frame Processor Plugins.

|

|

In this guide we will create a custom Face Detector Plugin which can be used from JS.

|

|

|

|

iOS Frame Processor Plugins can be written in either **Objective-C** or **Swift**.

|

|

|

|

### Automatic setup

|

|

|

|

Run [Vision Camera Plugin Builder CLI](https://github.com/mateusz1913/vision-camera-plugin-builder),

|

|

|

|

```sh

|

|

npx vision-camera-plugin-builder ios

|

|

```

|

|

|

|

:::info

|

|

The CLI will ask you for the path to project's .xcodeproj file, name of the plugin (e.g. `FaceDetectorFrameProcessorPlugin`), name of the exposed method (e.g. `detectFaces`) and language you want to use for plugin development (Objective-C, Objective-C++ or Swift).

|

|

For reference see the [CLI's docs](https://github.com/mateusz1913/vision-camera-plugin-builder#%EF%B8%8F-options).

|

|

:::

|

|

|

|

### Manual setup

|

|

|

|

<Tabs

|

|

defaultValue="objc"

|

|

values={[

|

|

{label: 'Objective-C', value: 'objc'},

|

|

{label: 'Swift', value: 'swift'}

|

|

]}>

|

|

<TabItem value="objc">

|

|

|

|

1. Open your Project in Xcode

|

|

2. Create an Objective-C source file, for the Face Detector Plugin this will be called `FaceDetectorFrameProcessorPlugin.m`.

|

|

3. Add the following code:

|

|

|

|

```objc

|

|

#import <VisionCamera/FrameProcessorPlugin.h>

|

|

#import <VisionCamera/FrameProcessorPluginRegistry.h>

|

|

#import <VisionCamera/Frame.h>

|

|

|

|

@interface FaceDetectorFrameProcessorPlugin : FrameProcessorPlugin

|

|

@end

|

|

|

|

@implementation FaceDetectorFrameProcessorPlugin

|

|

|

|

- (instancetype) initWithOptions:(NSDictionary*)options; {

|

|

self = [super init];

|

|

return self;

|

|

}

|

|

|

|

- (id)callback:(Frame*)frame withArguments:(NSDictionary*)arguments {

|

|

CMSampleBufferRef buffer = frame.buffer;

|

|

UIImageOrientation orientation = frame.orientation;

|

|

// code goes here

|

|

return @[];

|

|

}

|

|

|

|

+ (void) load {

|

|

// highlight-start

|

|

[FrameProcessorPluginRegistry addFrameProcessorPlugin:@"detectFaces"

|

|

withInitializer:^FrameProcessorPlugin*(NSDictionary* options) {

|

|

return [[FaceDetectorFrameProcessorPlugin alloc] initWithOptions:options];

|

|

}];

|

|

// highlight-end

|

|

}

|

|

|

|

@end

|

|

```

|

|

|

|

:::note

|

|

The Frame Processor Plugin will be exposed to JS through the `VisionCameraProxy` object. In this case, it would be `VisionCameraProxy.getFrameProcessorPlugin("detectFaces")`.

|

|

:::

|

|

|

|

4. **Implement your Frame Processing.** See the [Example Plugin (Objective-C)](https://github.com/mrousavy/react-native-vision-camera/blob/main/package/example/ios/Frame%20Processor%20Plugins/Example%20Plugin%20%28Objective%2DC%29) for reference.

|

|

|

|

</TabItem>

|

|

<TabItem value="swift">

|

|

|

|

1. Open your Project in Xcode

|

|

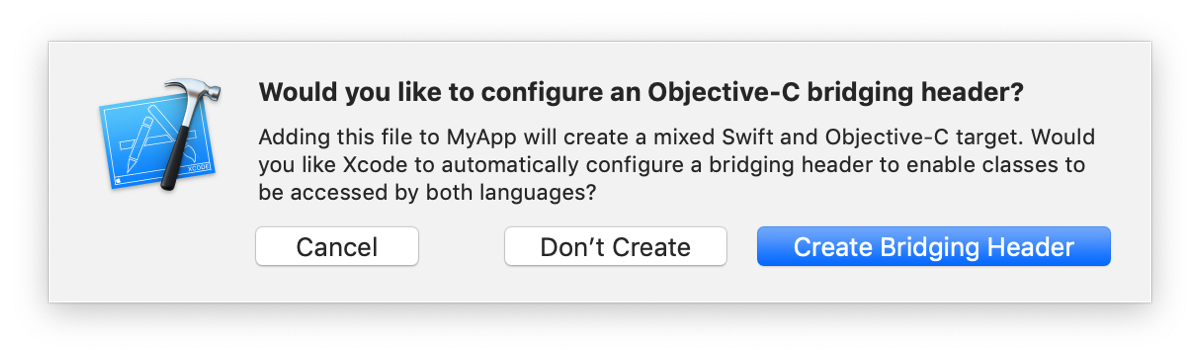

2. Create a Swift file, for the Face Detector Plugin this will be `FaceDetectorFrameProcessorPlugin.swift`. If Xcode asks you to create a Bridging Header, press **create**.

|

|

|

|

|

|

|

|

3. Inside the newly created Bridging Header, add the following code:

|

|

|

|

```objc

|

|

#import <VisionCamera/FrameProcessorPlugin.h>

|

|

#import <VisionCamera/Frame.h>

|

|

```

|

|

|

|

4. In the Swift file, add the following code:

|

|

|

|

```swift

|

|

@objc(FaceDetectorFrameProcessorPlugin)

|

|

public class FaceDetectorFrameProcessorPlugin: FrameProcessorPlugin {

|

|

|

|

public override func callback(_ frame: Frame!,

|

|

withArguments arguments: [String:Any]) -> Any {

|

|

let buffer = frame.buffer

|

|

let orientation = frame.orientation

|

|

// code goes here

|

|

return []

|

|

}

|

|

}

|

|

```

|

|

|

|

5. In your `AppDelegate.m`, add the following imports:

|

|

|

|

```objc

|

|

#import "YOUR_XCODE_PROJECT_NAME-Swift.h"

|

|

#import <VisionCamera/FrameProcessorPlugin.h>

|

|

#import <VisionCamera/FrameProcessorPluginRegistry.h>

|

|

```

|

|

|

|

6. In your `AppDelegate.m`, add the following code to `application:didFinishLaunchingWithOptions:`:

|

|

|

|

```objc

|

|

- (BOOL)application:(UIApplication *)application didFinishLaunchingWithOptions:(NSDictionary *)launchOptions

|

|

{

|

|

// ...

|

|

|

|

// highlight-start

|

|

[FrameProcessorPluginRegistry addFrameProcessorPlugin:@"detectFaces"

|

|

withInitializer:^FrameProcessorPlugin*(NSDictionary* options) {

|

|

return [[FaceDetectorFrameProcessorPlugin alloc] initWithOptions:options];

|

|

}];

|

|

// highlight-end

|

|

|

|

return [super application:application didFinishLaunchingWithOptions:launchOptions];

|

|

}

|

|

```

|

|

|

|

7. **Implement your frame processing.** See [Example Plugin (Swift)](https://github.com/mrousavy/react-native-vision-camera/blob/main/package/example/ios/Frame%20Processor%20Plugins/Example%20Plugin%20%28Swift%29) for reference.

|

|

|

|

|

|

</TabItem>

|

|

</Tabs>

|

|

|

|

<br />

|

|

|

|

#### 🚀 Next section: [Finish creating your Frame Processor Plugin](frame-processors-plugins-final) (or [add Android support to your Frame Processor Plugin](frame-processors-plugins-android))

|