chore: Improve native Frame Processor Plugin documentation (#1877)

This commit is contained in:

4

.github/workflows/build-ios.yml

vendored

4

.github/workflows/build-ios.yml

vendored

@@ -56,7 +56,7 @@ jobs:

|

||||

uses: actions/cache@v3

|

||||

with:

|

||||

path: |

|

||||

example/ios/Pods

|

||||

package/example/ios/Pods

|

||||

~/Library/Caches/CocoaPods

|

||||

~/.cocoapods

|

||||

key: ${{ runner.os }}-pods-${{ hashFiles('**/Podfile.lock') }}

|

||||

@@ -117,7 +117,7 @@ jobs:

|

||||

uses: actions/cache@v3

|

||||

with:

|

||||

path: |

|

||||

example/ios/Pods

|

||||

package/example/ios/Pods

|

||||

~/Library/Caches/CocoaPods

|

||||

~/.cocoapods

|

||||

key: ${{ runner.os }}-pods-${{ hashFiles('**/Podfile.lock') }}

|

||||

|

||||

@@ -51,8 +51,9 @@ Similar to a TurboModule, the Frame Processor Plugin Registry API automatically

|

||||

Return values will automatically be converted to JS values, assuming they are representable in the ["Types" table](#types). So the following Java Frame Processor Plugin:

|

||||

|

||||

```java

|

||||

@Nullable

|

||||

@Override

|

||||

public Object callback(Frame frame, Object[] params) {

|

||||

public Object callback(@NonNull Frame frame, @Nullable Map<String, Object> arguments) {

|

||||

return "cat";

|

||||

}

|

||||

```

|

||||

@@ -70,8 +71,9 @@ export function detectObject(frame: Frame): string {

|

||||

You can also manipulate the buffer and return it (or a copy of it) by returning a [`Frame`][2]/[`Frame`][3] instance:

|

||||

|

||||

```java

|

||||

@Nullable

|

||||

@Override

|

||||

public Object callback(Frame frame, Object[] params) {

|

||||

public Object callback(@NonNull Frame frame, @Nullable Map<String, Object> arguments) {

|

||||

Frame resizedFrame = new Frame(/* ... */);

|

||||

return resizedFrame;

|

||||

}

|

||||

@@ -107,12 +109,13 @@ const frameProcessor = useFrameProcessor((frame) => {

|

||||

To let the user know that something went wrong you can use Exceptions:

|

||||

|

||||

```java

|

||||

@Nullable

|

||||

@Override

|

||||

public Object callback(Frame frame, Object[] params) {

|

||||

if (params[0] instanceof String) {

|

||||

public Object callback(@NonNull Frame frame, @Nullable Map<String, Object> arguments) {

|

||||

if (arguments != null && arguments.get("codeType") instanceof String) {

|

||||

// ...

|

||||

} else {

|

||||

throw new Exception("First argument has to be a string!");

|

||||

throw new Exception("codeType property has to be a string!");

|

||||

}

|

||||

}

|

||||

```

|

||||

@@ -123,7 +126,7 @@ Which will throw a JS-error:

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

try {

|

||||

const codes = scanCodes(frame, true)

|

||||

const codes = scanCodes(frame, { codeType: 1234 })

|

||||

} catch (e) {

|

||||

console.log(`Error: ${e.message}`)

|

||||

}

|

||||

@@ -143,9 +146,14 @@ If your Frame Processor takes longer than a single frame interval to execute, or

|

||||

For example, a realtime video chat application might use WebRTC to send the frames to the server. I/O operations (networking) are asynchronous, and we don't _need_ to wait for the upload to succeed before pushing the next frame, so we copy the frame and perform the upload on another Thread.

|

||||

|

||||

```java

|

||||

@Nullable

|

||||

@Override

|

||||

public Object callback(Frame frame, Object[] params) {

|

||||

String serverURL = (String)params[0];

|

||||

public Object callback(@NonNull Frame frame, @Nullable Map<String, Object> arguments) {

|

||||

if (arguments == null) {

|

||||

return null;

|

||||

}

|

||||

|

||||

String serverURL = (String)arguments.get("serverURL");

|

||||

Frame frameCopy = new Frame(/* ... */);

|

||||

|

||||

uploaderQueue.runAsync(() -> {

|

||||

|

||||

@@ -18,6 +18,7 @@ const plugin = VisionCameraProxy.getFrameProcessorPlugin('scanFaces')

|

||||

*/

|

||||

export function scanFaces(frame: Frame): object {

|

||||

'worklet'

|

||||

if (plugin == null) throw new Error('Failed to load Frame Processor Plugin "scanFaces"!')

|

||||

return plugin.call(frame)

|

||||

}

|

||||

```

|

||||

|

||||

@@ -34,7 +34,7 @@ For reference see the [CLI's docs](https://github.com/mateusz1913/vision-camera-

|

||||

protected List<ReactPackage> getPackages() {

|

||||

@SuppressWarnings("UnnecessaryLocalVariable")

|

||||

List<ReactPackage> packages = new PackageList(this).getPackages();

|

||||

...

|

||||

// ...

|

||||

// highlight-next-line

|

||||

packages.add(new FaceDetectorFrameProcessorPluginPackage()); // <- add

|

||||

return packages;

|

||||

@@ -56,13 +56,16 @@ For reference see the [CLI's docs](https://github.com/mateusz1913/vision-camera-

|

||||

3. Add the following code:

|

||||

|

||||

```java

|

||||

import androidx.annotation.NonNull;

|

||||

import androidx.annotation.Nullable;

|

||||

import com.mrousavy.camera.frameprocessor.Frame;

|

||||

import com.mrousavy.camera.frameprocessor.FrameProcessorPlugin;

|

||||

|

||||

public class FaceDetectorFrameProcessorPlugin extends FrameProcessorPlugin {

|

||||

|

||||

@Nullable

|

||||

@Override

|

||||

public Object callback(Frame frame, Map<String, Object> arguments) {

|

||||

public Object callback(@NonNull Frame frame, @Nullable Map<String, Object> arguments) {

|

||||

// highlight-next-line

|

||||

// code goes here

|

||||

return null;

|

||||

}

|

||||

@@ -73,20 +76,20 @@ public class FaceDetectorFrameProcessorPlugin extends FrameProcessorPlugin {

|

||||

5. Create a new Java file which registers the Frame Processor Plugin in a React Package, for the Face Detector plugin this file will be called `FaceDetectorFrameProcessorPluginPackage.java`:

|

||||

|

||||

```java

|

||||

import androidx.annotation.NonNull;

|

||||

import com.facebook.react.ReactPackage;

|

||||

import com.facebook.react.bridge.NativeModule;

|

||||

import com.facebook.react.bridge.ReactApplicationContext;

|

||||

import com.facebook.react.uimanager.ViewManager;

|

||||

import com.mrousavy.camera.frameprocessor.FrameProcessorPlugin;

|

||||

import com.mrousavy.camera.frameprocessor.FrameProcessorPluginRegistry;

|

||||

import javax.annotation.Nonnull;

|

||||

|

||||

public class FaceDetectorFrameProcessorPluginPackage implements ReactPackage {

|

||||

FaceDetectorFrameProcessorPluginPackage() {

|

||||

// highlight-start

|

||||

FaceDetectorFrameProcessorPluginPackage() {

|

||||

FrameProcessorPluginRegistry.addFrameProcessorPlugin("detectFaces", options -> new FaceDetectorFrameProcessorPlugin());

|

||||

// highlight-end

|

||||

}

|

||||

// highlight-end

|

||||

|

||||

@NonNull

|

||||

@Override

|

||||

@@ -94,9 +97,9 @@ public class FaceDetectorFrameProcessorPluginPackage implements ReactPackage {

|

||||

return Collections.emptyList();

|

||||

}

|

||||

|

||||

@Nonnull

|

||||

@NonNull

|

||||

@Override

|

||||

public List<ViewManager> createViewManagers(@Nonnull ReactApplicationContext reactContext) {

|

||||

public List<ViewManager> createViewManagers(@NonNull ReactApplicationContext reactContext) {

|

||||

return Collections.emptyList();

|

||||

}

|

||||

}

|

||||

@@ -113,7 +116,7 @@ The Frame Processor Plugin will be exposed to JS through the `VisionCameraProxy`

|

||||

protected List<ReactPackage> getPackages() {

|

||||

@SuppressWarnings("UnnecessaryLocalVariable")

|

||||

List<ReactPackage> packages = new PackageList(this).getPackages();

|

||||

...

|

||||

// ...

|

||||

// highlight-next-line

|

||||

packages.add(new FaceDetectorFrameProcessorPluginPackage()); // <- add

|

||||

return packages;

|

||||

@@ -133,7 +136,8 @@ import com.mrousavy.camera.frameprocessor.FrameProcessorPlugin

|

||||

|

||||

class FaceDetectorFrameProcessorPlugin: FrameProcessorPlugin() {

|

||||

|

||||

override fun callback(frame: Frame, arguments: Map<String, Object>): Any? {

|

||||

override fun callback(frame: Frame, arguments: Map<String, Object>?): Any? {

|

||||

// highlight-next-line

|

||||

// code goes here

|

||||

return null

|

||||

}

|

||||

@@ -151,13 +155,13 @@ import com.facebook.react.uimanager.ViewManager

|

||||

import com.mrousavy.camera.frameprocessor.FrameProcessorPlugin

|

||||

|

||||

class FaceDetectorFrameProcessorPluginPackage : ReactPackage {

|

||||

init {

|

||||

// highlight-start

|

||||

init {

|

||||

FrameProcessorPluginRegistry.addFrameProcessorPlugin("detectFaces") { options ->

|

||||

FaceDetectorFrameProcessorPlugin()

|

||||

}

|

||||

// highlight-end

|

||||

}

|

||||

// highlight-end

|

||||

|

||||

override fun createNativeModules(reactContext: ReactApplicationContext): List<NativeModule> {

|

||||

return emptyList()

|

||||

@@ -180,7 +184,7 @@ The Frame Processor Plugin will be exposed to JS through the `VisionCameraProxy`

|

||||

protected List<ReactPackage> getPackages() {

|

||||

@SuppressWarnings("UnnecessaryLocalVariable")

|

||||

List<ReactPackage> packages = new PackageList(this).getPackages();

|

||||

...

|

||||

// ...

|

||||

// highlight-next-line

|

||||

packages.add(new FaceDetectorFrameProcessorPluginPackage()); // <- add

|

||||

return packages;

|

||||

|

||||

@@ -60,7 +60,7 @@ For reference see the [CLI's docs](https://github.com/mateusz1913/vision-camera-

|

||||

CMSampleBufferRef buffer = frame.buffer;

|

||||

UIImageOrientation orientation = frame.orientation;

|

||||

// code goes here

|

||||

return @[];

|

||||

return nil;

|

||||

}

|

||||

|

||||

+ (void) load {

|

||||

@@ -89,56 +89,49 @@ The Frame Processor Plugin will be exposed to JS through the `VisionCameraProxy`

|

||||

|

||||

|

||||

|

||||

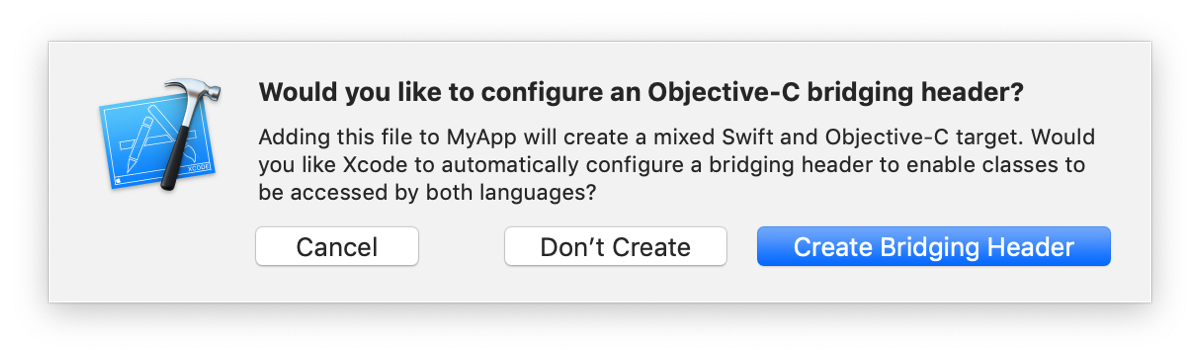

3. Inside the newly created Bridging Header, add the following code:

|

||||

|

||||

```objc

|

||||

#import <VisionCamera/FrameProcessorPlugin.h>

|

||||

#import <VisionCamera/Frame.h>

|

||||

```

|

||||

|

||||

4. In the Swift file, add the following code:

|

||||

3. In the Swift file, add the following code:

|

||||

|

||||

```swift

|

||||

import VisionCamera

|

||||

|

||||

@objc(FaceDetectorFrameProcessorPlugin)

|

||||

public class FaceDetectorFrameProcessorPlugin: FrameProcessorPlugin {

|

||||

|

||||

public override func callback(_ frame: Frame!,

|

||||

withArguments arguments: [String:Any]) -> Any {

|

||||

public override func callback(_ frame: Frame, withArguments arguments: [AnyHashable : Any]?) -> Any {

|

||||

let buffer = frame.buffer

|

||||

let orientation = frame.orientation

|

||||

// code goes here

|

||||

return []

|

||||

return nil

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

5. In your `AppDelegate.m`, add the following imports:

|

||||

4. Create an Objective-C source file that will be used to automatically register your plugin

|

||||

|

||||

```objc

|

||||

#import "YOUR_XCODE_PROJECT_NAME-Swift.h"

|

||||

#import <VisionCamera/FrameProcessorPlugin.h>

|

||||

#import <VisionCamera/FrameProcessorPluginRegistry.h>

|

||||

```

|

||||

|

||||

6. In your `AppDelegate.m`, add the following code to `application:didFinishLaunchingWithOptions:`:

|

||||

#import "YOUR_XCODE_PROJECT_NAME-Swift.h" // <--- replace "YOUR_XCODE_PROJECT_NAME" with the actual value of your xcode project name

|

||||

|

||||

```objc

|

||||

- (BOOL)application:(UIApplication *)application didFinishLaunchingWithOptions:(NSDictionary *)launchOptions

|

||||

@interface FaceDetectorFrameProcessorPlugin (FrameProcessorPluginLoader)

|

||||

@end

|

||||

|

||||

@implementation FaceDetectorFrameProcessorPlugin (FrameProcessorPluginLoader)

|

||||

|

||||

+ (void)load

|

||||

{

|

||||

// ...

|

||||

|

||||

// highlight-start

|

||||

[FrameProcessorPluginRegistry addFrameProcessorPlugin:@"detectFaces"

|

||||

withInitializer:^FrameProcessorPlugin*(NSDictionary* options) {

|

||||

withInitializer:^FrameProcessorPlugin* (NSDictionary* options) {

|

||||

return [[FaceDetectorFrameProcessorPlugin alloc] initWithOptions:options];

|

||||

}];

|

||||

// highlight-end

|

||||

|

||||

return [super application:application didFinishLaunchingWithOptions:launchOptions];

|

||||

}

|

||||

|

||||

@end

|

||||

```

|

||||

|

||||

7. **Implement your frame processing.** See [Example Plugin (Swift)](https://github.com/mrousavy/react-native-vision-camera/blob/main/package/example/ios/Frame%20Processor%20Plugins/Example%20Plugin%20%28Swift%29) for reference.

|

||||

5. **Implement your frame processing.** See [Example Plugin (Swift)](https://github.com/mrousavy/react-native-vision-camera/blob/main/package/example/ios/Frame%20Processor%20Plugins/Example%20Plugin%20%28Swift%29) for reference.

|

||||

|

||||

|

||||

</TabItem>

|

||||

|

||||

Reference in New Issue

Block a user