feature: Frame Processors (iOS) (#2)

* Clean up Frame Processor * Create FrameProcessorHolder * Create FrameProcessorDelegate in ObjC++ * Move frame processor to FrameProcessorDelegate * Decorate runtime, check for null * Update FrameProcessorDelegate.mm * Cleanup FrameProcessorBindings.mm * Fix RuntimeDecorator.h import * Update FrameProcessorDelegate.mm * "React" -> "React Helper" to avoid confusion * Rename folders again * Fix podspec flattening a lot of headers, causing REA nameclash * Fix header imports to avoid REA naming collision * Lazily initialize jsi::Runtime on DispatchQueue * Install frame processor bindings from Swift * First try to call jsi::Function (frame processor) 👀 * Call viewForReactTag on RCT main thread * Fix bridge accessing * Add more logs * Update CameraViewManager.swift * Add more TODOs * Re-indent .cpp files * Fix RCTTurboModule import podspec * Remove unnecessary include check for swift umbrella header * Merge branch 'main' into frame-processors * Docs: use static width for images (283) * Create validate-cpp.yml * Update a lot of packages to latest * Set SWIFT_VERSION to 5.2 in podspec * Create clean.sh * Delete unused C++ files * podspec: Remove CLANG_CXX_LANGUAGE_STANDARD and OTHER_CFLAGS * Update pod lockfiles * Regenerate lockfiles * Remove IOSLogger * Use NSLog * Create FrameProcessorManager (inherits from REA RuntimeManager) * Create reanimated::RuntimeManager shared_ptr * Re-integrate pods * Add react-native-reanimated >=2 peerDependency * Add metro-config * blacklist -> exclusionList * Try to call worklet * Fix jsi::Value* initializer * Call ShareableValue::adapt (makeShareable) with React/JS Runtime * Add null-checks * Lift runtime manager creation out of delegate, into bindings * Remove debug statement * Make RuntimeManager unique_ptr * Set _FRAME_PROCESSOR * Extract convertJSIFunctionToFrameProcessorCallback * Print frame * Merge branch 'main' into frame-processors * Reformat Swift code * Install reanimated from npm again * Re-integrate Pods * Dependabot: Also scan example/ and docs/ * Update validate-cpp.yml * Create FrameProcessorUtils * Create Frame.h * Abstract HostObject creation away * Fix types * Fix frame processor call * Add todo * Update lockfiles * Add C++ contributing instructions * Update CONTRIBUTING.md * Add android/src/main/cpp to cpplint * Update cpplint.sh * Fix a few cpplint errors * Fix globals * Fix a few more cpplint errors * Update App.tsx * Update AndroidLogger.cpp * Format * Fix cpplint script (check-cpp) * Try to simplify frame processor * y * Update FrameProcessorUtils.mm * Update FrameProcessorBindings.mm * Update CameraView.swift * Update CameraViewManager.m * Restructure everything * fix * Fix `@objc` export (make public) * Refactor installFrameProcessorBindings into FrameProcessorRuntimeManager * Add swift RCTBridge.runOnJS helper * Fix run(onJS) * Add pragma once * Add `&self` to lambda * Update FrameProcessorRuntimeManager.mm * reorder imports * Fix imports * forward declare * Rename extension * Destroy buffer after execution * Add FrameProcessorPluginRegistry base * Merge branch 'main' into frame-processors * Add frameProcessor to types * Update Camera.tsx * Fix rebase merge * Remove movieOutput * Use `useFrameProcessor` * Fix bad merge * Add additional ESLint rules * Update lockfiles * Update CameraViewManager.m * Add support for V8 runtime * Add frame processor plugins API * Print plugin invoke * Fix React Utils in podspec * Fix runOnJS swift name * Remove invalid redecl of `captureSession` * Use REA 2.1.0 which includes all my big PRs 🎉 * Update validate-cpp.yml * Update Podfile.lock * Remove Flipper * Fix dereferencing * Capture `self` by value. Fucking hell, what a dumb mistake. * Override a few HostObject functions * Expose isReady, width, height, bytesPerRow and planesCount * use hook again * Expose property names * FrameProcessor -> Frame * Update CameraView+RecordVideo.swift * Add Swift support for Frame Processors Plugins * Add macros for plugin installation * Add ObjC frame processor plugin * Correctly install frame processor plugins * Don't require custom name for macro * Check if plugin already exists * Implement QR Code Frame Processor Plugin in Swift * Adjust ObjC style frame processor macro * optimize * Add `frameProcessorFrameDropRate` * Fix types * Only log once * Log if it executes slowly * Implement `frameProcessorFps` * Implement manual encoded video recordings * Use recommended video settings * Add fileType types * Ignore if input is not ready for media data * Add completion handler * Add audio buffer sampling * Init only for video frame * use AVAssetWriterInputPixelBufferAdaptor * Remove AVAssetWriterInputPixelBufferAdaptor * Rotate VideoWriter * Always assume portrait orientation * Update RecordingSession.swift * Use a separate Queue for Audio * Format Swift * Update CameraView+RecordVideo.swift * Use `videoQueue` instead of `cameraQueue` * Move example plugins to example app * Fix hardcoded name in plugin macro * QRFrame... -> QRCodeFrame... * Update FrameProcessorPlugin.h * Add example frame processors to JS base * Update QRCodeFrameProcessorPluginSwift.m * Add docs to create FP Plugins * Update FRAME_PROCESSORS_CREATE.mdx * Update FRAME_PROCESSORS_CREATE.mdx * Use `AVAssetWriterInputPixelBufferAdaptor` for efficient pixel buffer recycling * Add customizable `pixelFormat` * Use native format if available * Update project.pbxproj * Set video width and height as source-pixel-buffer attributes * Catch * Update App.tsx * Don't explicitly set video dimensions, let CVPixelBufferPool handle it * Add a few logs * Cleanup * Update CameraView+RecordVideo.swift * Eagerly initialize asset writer to fix stutter at first frame * Use `cameraQueue` DispatchQueue to not block CaptureDataOutputDelegate * Fix duration calculation * cleanup * Cleanup * Swiftformat * Return available video codecs * Only show frame drop notification for video output * Remove photo and video codec functionality It was too much complexity and probably never used anyways. * Revert all android related changes for now * Cleanup * Remove unused header * Update AVAssetWriter.Status+descriptor.swift * Only call Frame Processor for Video Frames * Fix `if` * Add support for Frame Processor plugin parameters/arguments * Fix arg support * Move to JSIUtils.mm * Update JSIUtils.h * Update FRAME_PROCESSORS_CREATE.mdx * Update FRAME_PROCESSORS_CREATE.mdx * Upgrade packages for docs/ * fix docs * Rename * highlight lines * docs * community plugins * Update FRAME_PROCESSOR_CREATE_FINAL.mdx * Update FRAME_PROCESSOR_PLUGIN_LIST.mdx * Update FRAME_PROCESSOR_PLUGIN_LIST.mdx * Update dependencies (1/2) * Update dependencies (2/2) * Update Gemfile.lock * add FP docs * Update README.md * Make `lastFrameProcessor` private * add `frameProcessor` docs * fix docs * adjust docs * Update DEVICES.mdx * fix * s * Add logs demo * add metro restart note * Update FRAME_PROCESSOR_CREATE_PLUGIN_IOS.mdx * Mirror video device * Update AVCaptureVideoDataOutput+mirror.swift * Create .swift-version * Enable whole module optimization * Fix recording mirrored video * Swift format * Clean dictionary on `markInvalid` * Fix cleanup * Add docs for disabling frame processors * Update project.pbxproj * Revert "Update project.pbxproj" This reverts commit e67861e51b88b4888a6940e2d20388f3044211d0. * Log frame drop reason * Format * add more samples * Add clang-format * also check .mm * Revert "also check .mm" This reverts commit 8b9d5e2c29866b05909530d104f6633d6c49eadd. * Revert "Add clang-format" This reverts commit 7643ac808e0fc34567ea1f814e73d84955381636. * Use `kCVPixelFormatType_420YpCbCr8BiPlanarVideoRange` as default * Read matching video attributes from videoSettings * Add TODO * Swiftformat * Conditionally disable frame processors * Assert if trying to use frame processors when disabled * Add frame-processors demo gif * Allow disabling frame processors via `VISION_CAMERA_DISABLE_FRAME_PROCESSORS` * Update FrameProcessorRuntimeManager.mm * Update FRAME_PROCESSORS.mdx * Update project.pbxproj * Update FRAME_PROCESSORS_CREATE_OVERVIEW.mdx

This commit is contained in:

@@ -17,14 +17,16 @@ import useBaseUrl from '@docusaurus/useBaseUrl';

|

||||

|

||||

Often you'd want to animate specific props in the Camera. For example, if you'd want to create a custom zoom gesture, you can smoothly animate the Camera's `zoom` property.

|

||||

|

||||

Note: The `<Camera>` component does provide a natively implemented zoom gesture which you can enable with the `enableZoomGesture={true}` prop. This does not require any additional work, but if you want to setup a custom gesture, such as the one in Snapchat or Instagram where you move up your finger while recording, continue reading.

|

||||

The `<Camera>` component already provides a natively implemented zoom gesture which you can enable with the [`enableZoomGesture`](/docs/api/interfaces/cameraprops.cameraprops-1#enablezoomgesture) prop. This does not require any additional work, but if you want to setup a custom gesture, such as the one in Snapchat or Instagram where you move up your finger while recording, continue reading.

|

||||

|

||||

### Installing reanimated

|

||||

### Animation libraries

|

||||

|

||||

The following example uses [react-native-reanimated](https://github.com/software-mansion/react-native-reanimated) (v2) to animate the `zoom` property. Head over to their [Installation guide](https://docs.swmansion.com/react-native-reanimated/docs/installation) to install Reanimated if you haven't already.

|

||||

While you can use any animation library to animate the `zoom` property (or use no animation library at all) it is recommended to use [react-native-reanimated](https://github.com/software-mansion/react-native-reanimated) (v2) to achieve best performance. Head over to their [Installation guide](https://docs.swmansion.com/react-native-reanimated/docs/installation) to install Reanimated if you haven't already.

|

||||

|

||||

### Implementation

|

||||

|

||||

The following example implements a button which smoothly zooms to a random value using [react-native-reanimated](https://github.com/software-mansion/react-native-reanimated):

|

||||

|

||||

```tsx

|

||||

import Reanimated, {

|

||||

useAnimatedProps,

|

||||

@@ -72,13 +74,16 @@ export function App() {

|

||||

|

||||

### Explanation

|

||||

|

||||

1. The `Camera` is converted to a reanimated Camera using `Reanimated.createAnimatedComponent`

|

||||

1. The `Camera` was made animatable using `Reanimated.createAnimatedComponent`

|

||||

2. The `zoom` property is added to the whitelisted native props to make it animatable.

|

||||

> Note that this might not be needed in the future, see: [reanimated#1409](https://github.com/software-mansion/react-native-reanimated/pull/1409)

|

||||

3. Using [`useSharedValue`](https://docs.swmansion.com/react-native-reanimated/docs/api/useSharedValue), we're creating a shared value that holds the `zoom` property.

|

||||

4. Using the [`useAnimatedProps`](https://docs.swmansion.com/react-native-reanimated/docs/api/useAnimatedProps) hook, we apply the shared value to the animated props.

|

||||

3. Using [`useSharedValue`](https://docs.swmansion.com/react-native-reanimated/docs/api/useSharedValue), we're creating a shared value that holds the value for the `zoom` property.

|

||||

4. Using the [`useAnimatedProps`](https://docs.swmansion.com/react-native-reanimated/docs/api/useAnimatedProps) hook, we apply the shared value to Camera's `zoom` property.

|

||||

5. We apply the animated props to the `ReanimatedCamera` component's `animatedProps` property.

|

||||

|

||||

### Logarithmic scale

|

||||

|

||||

A Camera's `zoom` property is represented in a **logarithmic scale**. That means, increasing from `0` to `0.1` will appear to be a much larger offset than increasing from `0.9` to `1`. If you want to implement a zoom gesture (`<PinchGestureHandler>`, `<PanGestureHandler>`), try to flatten the `zoom` property to a **linear scale** by raising it **exponentially**. (`zoom.value ** 2`)

|

||||

|

||||

<br />

|

||||

|

||||

|

||||

@@ -126,7 +126,7 @@ function App() {

|

||||

```

|

||||

|

||||

:::info

|

||||

Note: If you don't care about fast resume times you can also fully unmount the `<Camera>` view instead, which will use a lot less memory (RAM).

|

||||

If you don't care about fast resume times you can also fully unmount the `<Camera>` view instead, which will use less memory (RAM).

|

||||

:::

|

||||

|

||||

<br />

|

||||

|

||||

@@ -98,3 +98,8 @@ function App() {

|

||||

return <Camera ref={camera} {...cameraProps} />

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

<br />

|

||||

|

||||

#### 🚀 Next section: [Troubleshooting](troubleshooting)

|

||||

|

||||

@@ -6,14 +6,10 @@ sidebar_label: Frame Processors

|

||||

|

||||

import useBaseUrl from '@docusaurus/useBaseUrl';

|

||||

|

||||

:::warning

|

||||

FRAME PROCESSORS ARE STILL WORK IN PROGRESS - SEE [#2](https://github.com/cuvent/react-native-vision-camera/pull/2)

|

||||

:::

|

||||

|

||||

<!-- TODO: Demo of QR code scanning or smth -->

|

||||

<div>

|

||||

<svg xmlns="http://www.w3.org/2000/svg" width="283" height="535" style={{ float: 'right' }}>

|

||||

<image href={useBaseUrl("img/demo.gif")} x="18" y="33" width="247" height="469" />

|

||||

<image href={useBaseUrl("img/frame-processors.gif")} x="18" y="33" width="247" height="469" />

|

||||

<image href={useBaseUrl("img/frame.png")} width="283" height="535" />

|

||||

</svg>

|

||||

</div>

|

||||

@@ -21,48 +17,147 @@ FRAME PROCESSORS ARE STILL WORK IN PROGRESS - SEE [#2](https://github.com/cuvent

|

||||

### What are frame processors?

|

||||

|

||||

Frame processors are functions that are written in JavaScript (or TypeScript) which can be used to **process frames the camera "sees"**.

|

||||

Inside those functions you can call **Frame Processor Plugins**, which are high performance native functions specifically designed for certain use-cases.

|

||||

|

||||

For example, you might want to create a QR code scanner _without ever writing native code while still achieving almost-native performance_. Since you can write the scanning part yourself, you can implement a custom QR code system like the one Snapchat uses for Snap-codes.

|

||||

For example, you might want to create a QR code scanner **without ever writing native code**, while still **achieving almost-native performance**:

|

||||

|

||||

<div align="center">

|

||||

<img src={useBaseUrl("img/snap-code.png")} width="15%" />

|

||||

</div>

|

||||

<br />

|

||||

```jsx

|

||||

function App() {

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

const qrCodes = scanQRCodes(frame)

|

||||

console.log(`Detected QR Codes: ${qrCodes}`)

|

||||

}, [])

|

||||

|

||||

return (

|

||||

<Camera

|

||||

{...cameraProps}

|

||||

frameProcessor={frameProcessor}

|

||||

/>

|

||||

)

|

||||

}

|

||||

```

|

||||

|

||||

Frame processors are by far not limited to QR code detection, other examples include:

|

||||

|

||||

* **AI** for **facial recognition**

|

||||

* **AI** for **object detection**

|

||||

* Using **Tensorflow**, **MLKit Vision** or other libraries (if they provide React Native JSI bindings in the form of "react-native-vision-camera plugins")

|

||||

* Creating **realtime video-chats** since you can directly send the camera frames over the network

|

||||

* Creating **snapchat-like filters**, e.g. draw a dog-mask filter over the user's face (WIP)

|

||||

* Using **Tensorflow**, **MLKit Vision** or other libraries

|

||||

* Creating **realtime video-chats** using **WebRTC** to directly send the camera frames over the network

|

||||

* Creating **snapchat-like filters**, e.g. draw a dog-mask filter over the user's face

|

||||

* Creating **color filters** with depth-detection

|

||||

* Using **custom C++ processors** exposed to JS for maximum performance

|

||||

|

||||

Because of the Frame Processor API's extensibility, you can even create your **custom code-scanner plugins** - for example you might want to support a custom code design such as **Snapchat's SnapCodes** or **Apple's AppClips**:

|

||||

|

||||

<div align="center">

|

||||

<img src={useBaseUrl("img/snap-code.png")} height={150} />

|

||||

<img src={useBaseUrl("img/appclip.png")} height={150} style={{ marginLeft: 50 }} />

|

||||

</div>

|

||||

|

||||

### Interacting with Frame Processors

|

||||

|

||||

Since Frame Processors run in Worklets, you can also easily read from, and assign to [**Shared Values**](https://docs.swmansion.com/react-native-reanimated/docs/shared-values):

|

||||

|

||||

```tsx {6}

|

||||

// represents position of the cat on the screen 🐈

|

||||

const catBounds = useSharedValue({ top: 0, left: 0, right: 0, bottom: 0 })

|

||||

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

catBounds.value = scanFrameForCat(frame)

|

||||

}, [])

|

||||

|

||||

const boxOverlayStyle = useAnimatedStyle(() => ({

|

||||

borderWidth: 1,

|

||||

borderColor: 'red',

|

||||

...catBounds.value

|

||||

}), [catBounds])

|

||||

|

||||

return (

|

||||

<View>

|

||||

<Camera {...cameraProps} frameProcessor={frameProcessor} />

|

||||

// draws a red rectangle on the screen which surrounds the cat

|

||||

<Reanimated.View style={boxOverlayStyle} />

|

||||

</View>

|

||||

)

|

||||

```

|

||||

|

||||

And you can also call back to the React-JS thread using [`runOnJS`](https://docs.swmansion.com/react-native-reanimated/docs/api/runOnJS):

|

||||

|

||||

```tsx {9}

|

||||

const onQRCodeDetected = useCallback((qrCode: string) => {

|

||||

navigation.push("ProductPage", { productId: qrCode })

|

||||

}, [])

|

||||

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

const qrCodes = scanQRCodes(frame)

|

||||

if (qrCodes.length > 0) {

|

||||

runOnJS(onQRCodeDetected)(qrCodes[0])

|

||||

}

|

||||

}, [onQRCodeDetected])

|

||||

```

|

||||

|

||||

### Technical

|

||||

|

||||

Frame processors are JS functions that will be **workletized** using [react-native-reanimated](https://github.com/software-mansion/react-native-reanimated). They are created on a **separate thread** using a separate Hermes/JSC Runtime and are **invoked synchronously** (using JSI) without ever going over the bridge.

|

||||

**Frame Processors** are JS functions that will be **workletized** using [react-native-reanimated](https://github.com/software-mansion/react-native-reanimated). They are created on a **custom camera thread** using a separate JavaScript Runtime (_"VisionCamera JS-Runtime"_) and are **invoked synchronously** (using JSI) without ever going over the bridge.

|

||||

|

||||

### Example

|

||||

**Frame Processor Plugins** are native functions (written in Objective-C, Swift, C++, Java or Kotlin) that are injected into the VisionCamera JS-Runtime. They can be **synchronously called** from your JS Frame Processors (using JSI) without ever going over the bridge.

|

||||

|

||||

```tsx

|

||||

function App() {

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

const qrCodes = scanQrCodes(frame)

|

||||

console.log(qrCodes)

|

||||

}, [])

|

||||

> Learn how to [**create Frame Processor Plugins**](frame-processors-plugins-overview)

|

||||

|

||||

return (

|

||||

<Camera frameProcessor={frameProcessor} {...cameraProps} />

|

||||

)

|

||||

}

|

||||

### Using Frame Processor Plugins

|

||||

|

||||

Frame Processor Plugins are distributed through npm. To install the [**vision-camera-qrcode-scanner**](https://github.com/mrousavy/vision-camera-qrcode-scanner) plugin, run:

|

||||

|

||||

```terminal

|

||||

npm i vision-camera-qrcode-scanner

|

||||

cd ios && pod install

|

||||

```

|

||||

|

||||

### Plugins

|

||||

Then add it to your `babel.config.js`. For the QR Code Scanner, this will be `__scanQRCodes`:

|

||||

|

||||

> TODO

|

||||

```js {6}

|

||||

module.exports = {

|

||||

plugins: [

|

||||

[

|

||||

'react-native-reanimated/plugin',

|

||||

{

|

||||

globals: ['__scanQRCodes'],

|

||||

},

|

||||

],

|

||||

```

|

||||

|

||||

:::note

|

||||

You have to restart metro-bundler for changes in the `babel.config.js` file to take effect.

|

||||

:::

|

||||

|

||||

That's it! 🎉 Now you can use it:

|

||||

|

||||

```ts

|

||||

const frameProcessor = useFrameProcessor((frame: Frame) => {

|

||||

'worklet'

|

||||

const codes = scanQRCodes(frame)

|

||||

// ...

|

||||

}, [])

|

||||

```

|

||||

|

||||

Check out [**Frame Processor community plugins**](frame-processor-plugin-list) to discover plugins!

|

||||

|

||||

### Disabling Frame Processors

|

||||

|

||||

The Frame Processor API spawns a secondary JavaScript Runtime which consumes a small amount of extra CPU and RAM. If you're not using Frame Processors at all, you can disable them by setting the `VISION_CAMERA_DISABLE_FRAME_PROCESSORS` flag. Inside your `project.pbxproj`, find the `GCC_PREPROCESSOR_DEFINITIONS` parameter and add the flag:

|

||||

|

||||

```txt {3}

|

||||

GCC_PREPROCESSOR_DEFINITIONS = (

|

||||

"DEBUG=1",

|

||||

"VISION_CAMERA_DISABLE_FRAME_PROCESSORS=1",

|

||||

"$(inherited)",

|

||||

);

|

||||

```

|

||||

|

||||

Make sure to add this to your Debug-, as well as your Release-configuration.

|

||||

|

||||

<br />

|

||||

|

||||

#### 🚀 Next section: [Zooming with Reanimated](animated)

|

||||

#### 🚀 Next section: [Zooming with Reanimated](animated) (or [creating a Frame Processor Plugin](frame-processors-plugins-overview))

|

||||

|

||||

149

docs/docs/guides/FRAME_PROCESSORS_CREATE_OVERVIEW.mdx

Normal file

149

docs/docs/guides/FRAME_PROCESSORS_CREATE_OVERVIEW.mdx

Normal file

@@ -0,0 +1,149 @@

|

||||

---

|

||||

id: frame-processors-plugins-overview

|

||||

title: Creating Frame Processor Plugins

|

||||

sidebar_label: Overview

|

||||

---

|

||||

|

||||

import useBaseUrl from '@docusaurus/useBaseUrl';

|

||||

|

||||

## Overview

|

||||

|

||||

Frame Processor Plugins are **native functions** which can be directly called from a JS Frame Processor. (See [Frame Processors](frame-processors))

|

||||

|

||||

They **receive a frame from the Camera** as an input and can return any kind of output. For example, a `scanQRCodes` function returns an array of detected QR code strings in the frame:

|

||||

|

||||

```tsx {4-5}

|

||||

function App() {

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

const qrCodes = scanQRCodes(frame)

|

||||

_log(`QR Codes in Frame: ${qrCodes}`)

|

||||

}, [])

|

||||

|

||||

return (

|

||||

<Camera frameProcessor={frameProcessor} {...cameraProps} />

|

||||

)

|

||||

}

|

||||

```

|

||||

|

||||

To achieve **maximum performance**, the `scanQRCodes` function is written in a native language (e.g. Objective-C), but it will be directly called from the VisionCamera Frame Processor JavaScript-Runtime.

|

||||

|

||||

### Execution

|

||||

|

||||

Frame Processors will be **synchronously** called for each frame the Camera sees and have to finish executing before the next frame arrives, otherwise the next frame(s) will be dropped. For a frame rate of **30 FPS**, you have about **33ms** to finish processing frames. Use [`frameProcessorFps`](../api/interfaces/cameraprops.cameraprops-1#frameprocessorfps) to throttle the frame processor's FPS. For a QR Code Scanner, **5 FPS** might suffice.

|

||||

|

||||

### Return Types

|

||||

|

||||

Frame Processors can return any primitive value that is representable in JS. So for Objective-C that maps to:

|

||||

|

||||

| Objective-C Type | JS Type |

|

||||

|--------------------------|----------------------|

|

||||

| `NSNumber` | `number` |

|

||||

| `NSNumber` (boolean) | `boolean` |

|

||||

| `NSString` | `string` |

|

||||

| `NSArray` | `[]` |

|

||||

| `NSDictionary` | `{}` |

|

||||

| `nil` / `NSNull` | `undefined` |

|

||||

| `RCTResponseSenderBlock` | `(any, any) => void` |

|

||||

|

||||

The values will automatically be converted to JS values, so the following Objective-C frame processor:

|

||||

|

||||

```objc

|

||||

static inline id detectObject(CMSampleBufferRef buffer, NSArray args) {

|

||||

return @"cat";

|

||||

}

|

||||

```

|

||||

|

||||

Returns a `string` in JS:

|

||||

|

||||

```js

|

||||

export function detectObject(frame: Frame): string {

|

||||

'worklet';

|

||||

const result = __detectObject(frame);

|

||||

_log(result) // <-- "cat"

|

||||

}

|

||||

```

|

||||

|

||||

### Parameters

|

||||

|

||||

Frame Processors can also accept parameters, following the same type convention as [return values](#return-types):

|

||||

|

||||

```ts

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

const codes = scanCodes(frame, ['qr', 'barcode'])

|

||||

}, [])

|

||||

```

|

||||

|

||||

Or with multiple parameters:

|

||||

|

||||

```ts

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

const codes = scanCodes(frame, true, 'hello-world', 42)

|

||||

}, [])

|

||||

```

|

||||

|

||||

### Long-running Frame Processors

|

||||

|

||||

If your Frame Processor takes longer than a single frame interval to execute, or runs asynchronously, you can create a **copy of the frame** and dispatch the actual frame processing to a **separate thread**.

|

||||

|

||||

For example, a realtime video chat application might use WebRTC to send the frames to the server. I/O operations (networking) are asynchronous, and we don't _need_ to wait for the upload to succeed before pushing the next frame, so we copy the frame and perform the upload on another Thread.

|

||||

|

||||

```objc

|

||||

static dispatch_queue_t queue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0ul);

|

||||

|

||||

static inline id sendFrameToWebRTC(CMSampleBufferRef buffer, NSArray args) {

|

||||

CMSampleBufferRef bufferCopy;

|

||||

CMSampleBufferCreateCopy(kCFAllocatorDefault, &buffer, &bufferCopy);

|

||||

|

||||

dispatch_async(queue, ^{

|

||||

NSString* serverURL = (NSString*)args[0];

|

||||

[WebRTC uploadFrame:bufferCopy toServer:serverURL];

|

||||

});

|

||||

|

||||

return nil;

|

||||

}

|

||||

```

|

||||

|

||||

### Async Frame Processors with Event Emitters

|

||||

|

||||

You might also run some very complex AI algorithms which are not fast enough to smoothly run at **30 FPS** (**33ms**). To not drop any frames you can create a custom "frame queue" which processes the copied frames and calls back into JS via a React event emitter. For this you'll have to create a Native Module that handles the asynchronous native -> JS communication, see ["Sending events to JavaScript" (Android)](https://reactnative.dev/docs/native-modules-android#sending-events-to-javascript) and ["Sending events to JavaScript" (iOS)](https://reactnative.dev/docs/native-modules-ios#sending-events-to-javascript).

|

||||

|

||||

This might look like this for the user:

|

||||

|

||||

```tsx

|

||||

function App() {

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

SomeAI.process(frame) // does not block frame processor, runs async

|

||||

}, [])

|

||||

|

||||

useEffect(() => {

|

||||

SomeAI.addListener((results) => {

|

||||

console.log(`AI results: ${results}`)

|

||||

})

|

||||

}, [])

|

||||

|

||||

return (

|

||||

<Camera frameProcessor={frameProcessor} {...cameraProps} />

|

||||

)

|

||||

}

|

||||

```

|

||||

|

||||

This way you can handle queueing up the frames yourself and asynchronously call back into JS at some later point in time using event emitters.

|

||||

|

||||

### Benchmarking Frame Processor Plugins

|

||||

|

||||

Your Frame Processor Plugins have to be fast. VisionCamera automatically detects slow Frame Processors and outputs relevant information in the native console (Xcode: **Debug Area**, Android Studio: **Logcat**):

|

||||

|

||||

<div align="center">

|

||||

<img src={useBaseUrl("img/slow-log.png")} width="80%" />

|

||||

</div>

|

||||

<div align="center">

|

||||

<img src={useBaseUrl("img/slow-log-2.png")} width="80%" />

|

||||

</div>

|

||||

|

||||

<br />

|

||||

|

||||

#### 🚀 Create your first Frame Processor Plugin for [iOS](frame-processors-plugins-ios) or [Android](frame-processors-plugins-android)!

|

||||

74

docs/docs/guides/FRAME_PROCESSOR_CREATE_FINAL.mdx

Normal file

74

docs/docs/guides/FRAME_PROCESSOR_CREATE_FINAL.mdx

Normal file

@@ -0,0 +1,74 @@

|

||||

---

|

||||

id: frame-processors-plugins-final

|

||||

title: Finish creating your Frame Processor Plugin

|

||||

sidebar_label: Finish creating your Frame Processor Plugin

|

||||

---

|

||||

|

||||

## Make your Frame Processor Plugin available to JS

|

||||

|

||||

To make the Frame Processor Plugin available to the Frame Processor Worklet Runtime, create the following wrapper function in JS/TS:

|

||||

|

||||

```ts

|

||||

import type { Frame } from 'react-native-vision-camera';

|

||||

|

||||

/**

|

||||

* Scans QR codes.

|

||||

*/

|

||||

export function scanQRCodes(frame: Frame): string[] {

|

||||

'worklet';

|

||||

return __scanQRCodes(frame);

|

||||

}

|

||||

```

|

||||

|

||||

Users will then have to add the Frame Processor Plugin's name to their `babel.config.js`.

|

||||

|

||||

For the QR Code Scanner, this will be `__scanQRCodes`:

|

||||

|

||||

```js {6}

|

||||

module.exports = {

|

||||

plugins: [

|

||||

[

|

||||

'react-native-reanimated/plugin',

|

||||

{

|

||||

globals: ['__scanQRCodes'],

|

||||

},

|

||||

],

|

||||

```

|

||||

|

||||

:::note

|

||||

You have to restart metro-bundler for changes in the `babel.config.js` file to take effect.

|

||||

:::

|

||||

|

||||

### Test it!

|

||||

|

||||

Simply call the wrapper Worklet in your Frame Processor:

|

||||

|

||||

```tsx {4}

|

||||

function App() {

|

||||

const frameProcessor = useFrameProcessor((frame) => {

|

||||

'worklet'

|

||||

const qrCodes = scanQRCodes(frame)

|

||||

_log(`QR Codes in Frame: ${qrCodes}`)

|

||||

}, [])

|

||||

|

||||

return (

|

||||

<Camera frameProcessor={frameProcessor} {...cameraProps} />

|

||||

)

|

||||

}

|

||||

```

|

||||

|

||||

### Next Steps

|

||||

|

||||

If you want to distribute your Frame Processor Plugin, simply use npm.

|

||||

|

||||

1. Create a blank Native Module using [bob](https://github.com/callstack/react-native-builder-bob) or [create-react-native-module](https://github.com/brodybits/create-react-native-module)

|

||||

2. Name it `vision-camera-plugin-xxxxx` where `xxxxx` is the name of your plugin

|

||||

3. Remove all the source files for the Example Native Module

|

||||

4. Implement the Frame Processor Plugin in the iOS, Android and JS/TS Codebase using the guides above

|

||||

5. Add installation instructions to let users know they have to add your frame processor in the `babel.config.js` configuration.

|

||||

6. Publish the plugin to npm. Users will only have to install the plugin using `npm i vision-camera-plugin-xxxxx` and add it to their `babel.config.js` file.

|

||||

7. [Add the plugin to the **official VisionCamera plugin list**](https://github.com/cuvent/react-native-vision-camera/edit/main/docs/docs/guides/FRAME_PROCESSOR_PLUGIN_LIST.mdx) for more visibility

|

||||

|

||||

<br />

|

||||

|

||||

#### 🚀 Next section: [Browse Community Plugins](frame-processor-plugin-list)

|

||||

17

docs/docs/guides/FRAME_PROCESSOR_CREATE_PLUGIN_ANDROID.mdx

Normal file

17

docs/docs/guides/FRAME_PROCESSOR_CREATE_PLUGIN_ANDROID.mdx

Normal file

@@ -0,0 +1,17 @@

|

||||

---

|

||||

id: frame-processors-plugins-android

|

||||

title: Creating Frame Processor Plugins for Android

|

||||

sidebar_label: Creating Frame Processor Plugins (Android)

|

||||

---

|

||||

|

||||

import useBaseUrl from '@docusaurus/useBaseUrl';

|

||||

import Tabs from '@theme/Tabs';

|

||||

import TabItem from '@theme/TabItem';

|

||||

|

||||

:::warning

|

||||

FRAME PROCESSORS ARE NOT YET AVAILABLE FOR ANDROId. SEE #?? FOR PROGRESS UPDATES

|

||||

:::

|

||||

|

||||

<br />

|

||||

|

||||

#### 🚀 Next section: [Finish creating your Frame Processor Plugin](frame-processors-plugins-final) (or [add iOS support to your Frame Processor Plugin](frame-processors-plugins-ios))

|

||||

102

docs/docs/guides/FRAME_PROCESSOR_CREATE_PLUGIN_IOS.mdx

Normal file

102

docs/docs/guides/FRAME_PROCESSOR_CREATE_PLUGIN_IOS.mdx

Normal file

@@ -0,0 +1,102 @@

|

||||

---

|

||||

id: frame-processors-plugins-ios

|

||||

title: Creating Frame Processor Plugins for iOS

|

||||

sidebar_label: Creating Frame Processor Plugins (iOS)

|

||||

---

|

||||

|

||||

import Tabs from '@theme/Tabs';

|

||||

import TabItem from '@theme/TabItem';

|

||||

|

||||

## Creating a Frame Processor

|

||||

|

||||

The Frame Processor Plugin API is built to be as extensible as possible, which allows you to create custom Frame Processor Plugins.

|

||||

In this guide we will create a custom QR Code Scanner Plugin which can be used from JS.

|

||||

|

||||

iOS Frame Processor Plugins can be written in either **Objective-C** or **Swift**.

|

||||

|

||||

|

||||

<Tabs

|

||||

defaultValue="objc"

|

||||

values={[

|

||||

{label: 'Objective-C', value: 'objc'},

|

||||

{label: 'Swift', value: 'swift'}

|

||||

]}>

|

||||

<TabItem value="objc">

|

||||

|

||||

1. Open your Project in Xcode

|

||||

2. Create an Objective-C source file, for the QR Code Plugin this will be called `QRCodeFrameProcessorPlugin.m`.

|

||||

3. Add the following code:

|

||||

|

||||

```objc {9}

|

||||

#import <VisionCamera/FrameProcessorPlugin.h>

|

||||

|

||||

@interface QRCodeFrameProcessorPlugin : NSObject

|

||||

@end

|

||||

|

||||

@implementation QRCodeFrameProcessorPlugin

|

||||

|

||||

static inline id scanQRCodes(CMSampleBufferRef buffer, NSArray args) {

|

||||

// code goes here

|

||||

return @[];

|

||||

}

|

||||

|

||||

VISION_EXPORT_FRAME_PROCESSOR(scanQRCodes)

|

||||

|

||||

@end

|

||||

```

|

||||

|

||||

4. **Implement your Frame Processing.** See the [QR Code Plugin (Objective-C)](https://github.com/cuvent/react-native-vision-camera/blob/main/example/ios/Frame%20Processor%20Plugins/QR%20Code%20Plugin%20%28Objective%2DC%29) for reference.

|

||||

|

||||

:::note

|

||||

The JS function name will be equal to the Objective-C function name you choose (with a `__` prefix). Make sure it is unique across other Frame Processor Plugins, and doesn't shadow a JS runtime variable.

|

||||

:::

|

||||

|

||||

</TabItem>

|

||||

<TabItem value="swift">

|

||||

|

||||

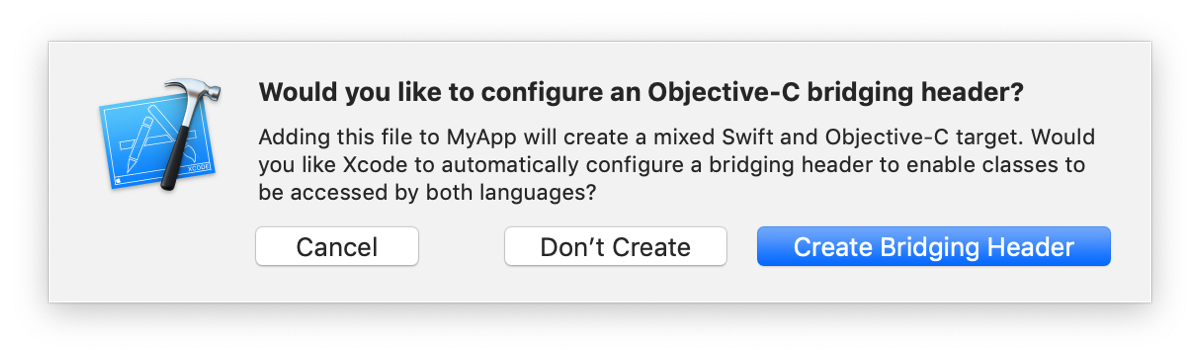

1. Create a Swift file, for the QR Code Plugin this will be `QRCodeFrameProcessorPlugin.swift`. If Xcode asks you to create a Bridging Header, press **create**.

|

||||

|

||||

|

||||

|

||||

2. Inside the newly created Bridging Header, add the following code:

|

||||

|

||||

```objc

|

||||

#import <VisionCamera/FrameProcessorPlugin.h>

|

||||

```

|

||||

|

||||

3. Create an Objective-C source file with the same name as the Swift file, for the QR Code Plugin this will be `QRCodeFrameProcessorPlugin.m`. Add the following code:

|

||||

|

||||

```objc

|

||||

#import <VisionCamera/FrameProcessorPlugin.h>

|

||||

|

||||

@interface VISION_EXPORT_SWIFT_FRAME_PROCESSOR(scanQRCodes, QRCodeFrameProcessorPlugin)

|

||||

@end

|

||||

```

|

||||

|

||||

:::note

|

||||

The first parameter in the Macro specifies the JS function name. Make sure it is unique across other Frame Processors, and doesn't shadow a JS runtime variable (such as `Map`, `Number`, ...)

|

||||

:::

|

||||

|

||||

4. In the Swift file, add the following code:

|

||||

|

||||

```swift {6}

|

||||

@objc(QRCodeFrameProcessorPlugin)

|

||||

public class QRCodeFrameProcessorPlugin: NSObject, FrameProcessorPluginBase {

|

||||

|

||||

@objc

|

||||

public static func callback(_: CMSampleBuffer!, withArgs _: [Any]!) -> Any! {

|

||||

// code goes here

|

||||

return []

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

5. **Implement your frame processing.** See [QR Code Plugin (Swift)](https://github.com/cuvent/react-native-vision-camera/blob/main/example/ios/Frame%20Processor%20Plugins/QR%20Code%20Plugin%20%28Swift%29) for reference.

|

||||

|

||||

|

||||

</TabItem>

|

||||

</Tabs>

|

||||

|

||||

<br />

|

||||

|

||||

#### 🚀 Next section: [Finish creating your Frame Processor Plugin](frame-processors-plugins-final) (or [add Android support to your Frame Processor Plugin](frame-processors-plugins-android))

|

||||

51

docs/docs/guides/FRAME_PROCESSOR_PLUGIN_LIST.mdx

Normal file

51

docs/docs/guides/FRAME_PROCESSOR_PLUGIN_LIST.mdx

Normal file

@@ -0,0 +1,51 @@

|

||||

---

|

||||

id: frame-processor-plugin-list

|

||||

title: Community Plugins

|

||||

sidebar_label: Community Plugins

|

||||

---

|

||||

|

||||

These are VisionCamera Frame Processor Plugins created by the community.

|

||||

|

||||

## Installing a Plugin

|

||||

|

||||

1. Install using npm:

|

||||

|

||||

```

|

||||

npm i vision-camera-xxxxx

|

||||

```

|

||||

|

||||

2. Add the native function's name to your `babel.config.js`. (See their README for instructions)

|

||||

|

||||

:::note

|

||||

You have to restart metro-bundler for changes in the `babel.config.js` file to take effect.

|

||||

:::

|

||||

|

||||

## Plugin List

|

||||

|

||||

* [mrousavy/**vision-camera-qrcode-scanner**](https://github.com/mrousavy/vision-camera-qrcode-scanner): A fast QR code scanner using the Apple Vision API.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

<!-- Add your Frame Processor Plugin here! -->

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

<br />

|

||||

<br />

|

||||

<br />

|

||||

<p align="center">

|

||||

<b>

|

||||

<a href="https://github.com/cuvent/react-native-vision-camera/edit/main/docs/docs/guides/FRAME_PROCESSOR_PLUGIN_LIST.mdx">Click here</a> to add your Frame Processor Plugin to this list!

|

||||

</b>

|

||||

</p>

|

||||

<br />

|

||||

<br />

|

||||

<br />

|

||||

|

||||

|

||||

#### 🚀 Next section: [Zooming with Reanimated](animated)

|

||||

@@ -13,13 +13,15 @@ import useBaseUrl from '@docusaurus/useBaseUrl';

|

||||

|

||||

## Installing the library

|

||||

|

||||

Install react-native-vision-camera through npm:

|

||||

Install [**react-native-vision-camera**](https://www.npmjs.com/package/react-native-vision-camera) through npm:

|

||||

|

||||

```sh

|

||||

```terminal

|

||||

npm i react-native-vision-camera

|

||||

npx pod-install

|

||||

```

|

||||

|

||||

VisionCamera requires **iOS 11 or higher**, and **Android-SDK version 21 or higher**. See [Troubleshooting](/docs/guides/troubleshooting) if you're having installation issues.

|

||||

|

||||

## Updating manifests

|

||||

|

||||

To use a Camera or Microphone you must first specify that your app requires camera and microphone permissions.

|

||||

@@ -35,24 +37,6 @@ Open your project's `Info.plist` and add the following lines inside the outermos

|

||||

<string>$(PRODUCT_NAME) needs access to your Microphone to record videos with audio.</string>

|

||||

```

|

||||

|

||||

#### Compatibility

|

||||

|

||||

VisionCamera is written in Swift. If your project is written in Objective-C, you have to create a Bridging Header first:

|

||||

|

||||

1. Open your project (`.xcworkspace`) in Xcode

|

||||

2. Press **File** > **New** > **File** (<kbd>⌘</kbd>+<kbd>N</kbd>)

|

||||

3. Select **Swift File** and press **Next**

|

||||

4. Choose whatever name you want, e.g. `File.swift` and press **Create**

|

||||

5. Press **Create Bridging Header** when promted.

|

||||

|

||||

Also, make sure you're using Swift 5.2 or above:

|

||||

|

||||

1. Open `project.pbxproj` in a Text Editor

|

||||

2. If the `LIBRARY_SEARCH_PATH` value is set, make sure there is no explicit reference to Swift-5.0. If there is, remove it. See [this StackOverflow answer](https://stackoverflow.com/a/66281846/1123156).

|

||||

3. If the `SWIFT_VERSION` value is set, make sure it is set to `5.2` or higher.

|

||||

|

||||

> See [Troubleshooting](/docs/guides/troubleshooting) if you're having problems

|

||||

|

||||

### Android

|

||||

|

||||

Open your project's `AndroidManifest.xml` and add the following lines inside the `<manifest>` tag:

|

||||

@@ -62,22 +46,7 @@ Open your project's `AndroidManifest.xml` and add the following lines inside the

|

||||

<uses-permission android:name="android.permission.RECORD_AUDIO" />

|

||||

```

|

||||

|

||||

#### Compatibility

|

||||

|

||||

VisionCamera requires a minimum Android SDK version of **21 or higher**, and a target SDK version of **30 or higher**. See [the example app's `build.gradle`](https://github.com/cuvent/react-native-vision-camera/blob/main/example/android/build.gradle#L6-L9) for reference.

|

||||

|

||||

Open your project's `build.gradle`, and set the following values:

|

||||

|

||||

```groovy

|

||||

buildToolsVersion = "30.0.0"

|

||||

minSdkVersion = 21

|

||||

compileSdkVersion = 30

|

||||

targetSdkVersion = 30

|

||||

```

|

||||

|

||||

> See [Troubleshooting](/docs/guides/troubleshooting) if you're having problems

|

||||

|

||||

## Permissions

|

||||

## Getting/Requesting Permissions

|

||||

|

||||

VisionCamera also provides functions to easily get and request Microphone and Camera permissions.

|

||||

|

||||

@@ -92,7 +61,7 @@ const microphonePermission = await Camera.getMicrophonePermissionStatus()

|

||||

|

||||

A permission status can have the following values:

|

||||

|

||||

* `authorized`: Your app is authorized to use said permission. Continue with mounting the `<Camera>` view.

|

||||

* `authorized`: Your app is authorized to use said permission. Continue with [**using the `<Camera>` view**](#use-the-camera-view).

|

||||

* `not-determined`: Your app has not yet requested permission from the user. [Continue by calling the **request** functions.](#requesting-permissions)

|

||||

* `denied`: Your app has already requested permissions from the user, but was explicitly denied. You cannot use the **request** functions again, but you can use the [`Linking` API](https://reactnative.dev/docs/linking#opensettings) to redirect the user to the Settings App where he can manually grant the permission.

|

||||

* `restricted`: (iOS only) Your app cannot use the Camera or Microphone because that functionality has been restricted, possibly due to active restrictions such as parental controls being in place.

|

||||

@@ -101,8 +70,8 @@ A permission status can have the following values:

|

||||

|

||||

Use the **request** functions to prompt the user to give your app permission to use the Camera or Microphone.

|

||||

|

||||

:::caution

|

||||

Note: You can only use **request** functions if the current permission status is `not-determined`.

|

||||

:::note

|

||||

The **request** functions only have effect if the current permission status is `not-determined`.

|

||||

:::

|

||||

|

||||

```ts

|

||||

@@ -112,8 +81,29 @@ const newMicrophonePermission = await Camera.requestMicrophonePermission()

|

||||

|

||||

The permission request status can have the following values:

|

||||

|

||||

* `authorized`: Your app is authorized to use said permission. Continue with mounting the `<Camera>` view.

|

||||

* `authorized`: Your app is authorized to use said permission. Continue with [**using the `<Camera>` view**](#use-the-camera-view).

|

||||

* `denied`: The user explicitly denied the permission request alert. You cannot use the **request** functions again, but you can use the [`Linking` API](https://reactnative.dev/docs/linking#opensettings) to redirect the user to the Settings App where he can manually grant the permission.

|

||||

* `restricted`: (iOS only) Your app cannot use the Camera or Microphone because that functionality has been restricted, possibly due to active restrictions such as parental controls being in place.

|

||||

|

||||

## Use the `<Camera>` view

|

||||

|

||||

If your app has permission to use the Camera and Microphone, simply use the [`useCameraDevices(...)`](/docs/api/modules/hooks_usecameradevices) hook to get a Camera device (see [Camera Devices](/docs/guides/devices)) and mount the `<Camera>` view:

|

||||

|

||||

```tsx

|

||||

function App() {

|

||||

const devices = useCameraDevices()

|

||||

const device = devices.back

|

||||

|

||||

if (device == null) return <LoadingView />

|

||||

return (

|

||||

<Camera

|

||||

style={StyleSheet.absoluteFill}

|

||||

device={device}

|

||||

isActive={true}

|

||||

/>

|

||||

)

|

||||

}

|

||||

```

|

||||

|

||||

<br />

|

||||

|

||||

|

||||

@@ -59,7 +59,7 @@ Before opening an issue, make sure you try the following:

|

||||

distributionUrl=https\://services.gradle.org/distributions/gradle-6.5-all.zip

|

||||

```

|

||||

5. If you're having runtime issues, check the logs in Android Studio/Logcat to find out more. In Android Studio, go to **View** > **Tool Windows** > **Logcat** (<kbd>⌘</kbd>+<kbd>6</kbd>) or run `adb logcat` in Terminal.

|

||||

6. If a camera device is not being returned by [`Camera.getAvailableCameraDevices()`](/docs/api/classes/camera.camera-1#getavailablecameradevices), make sure it meets the minimum requirements - that is minum supported harwdware level of `LIMITED` and above. See [this section in the Android docs](https://developer.android.com/reference/android/hardware/camera2/CameraDevice#reprocessing) for more information.

|

||||

6. If a camera device is not being returned by [`Camera.getAvailableCameraDevices()`](/docs/api/classes/camera.camera-1#getavailablecameradevices), make sure it meets the minimum requirements - that is a minum supported harwdware level of `LIMITED` and above. See [this section in the Android docs](https://developer.android.com/reference/android/hardware/camera2/CameraDevice#reprocessing) for more information.

|

||||

|

||||

## Issues

|

||||

|

||||

|

||||

@@ -13,6 +13,9 @@ module.exports = {

|

||||

apiKey: 'ab7f44570bb62d0e07c0f7d92312ed1a',

|

||||

indexName: 'react-native-vision-camera',

|

||||

},

|

||||

prism: {

|

||||

additionalLanguages: ['swift'],

|

||||

},

|

||||

navbar: {

|

||||

title: 'VisionCamera',

|

||||

logo: {

|

||||

|

||||

@@ -12,8 +12,8 @@

|

||||

"clear": "docusaurus clear"

|

||||

},

|

||||

"dependencies": {

|

||||

"@docusaurus/core": "2.0.0-alpha.72",

|

||||

"@docusaurus/preset-classic": "2.0.0-alpha.72",

|

||||

"@docusaurus/core": "2.0.0-alpha.75",

|

||||

"@docusaurus/preset-classic": "2.0.0-alpha.75",

|

||||

"@mdx-js/react": "^1.6.21",

|

||||

"clsx": "^1.1.1",

|

||||

"react": "^17.0.1",

|

||||

@@ -32,9 +32,9 @@

|

||||

]

|

||||

},

|

||||

"devDependencies": {

|

||||

"docusaurus-plugin-typedoc": "^0.12.0",

|

||||

"typedoc": "^0.20.34",

|

||||

"typedoc-plugin-markdown": "^3.6.0",

|

||||

"docusaurus-plugin-typedoc": "^0.13.0",

|

||||

"typedoc": "^0.20.36",

|

||||

"typedoc-plugin-markdown": "^3.7.2",

|

||||

"typescript": "^4.2.3"

|

||||

}

|

||||

}

|

||||

|

||||

@@ -1,6 +1,26 @@

|

||||

module.exports = {

|

||||

visionSidebar: {

|

||||

Guides: ['guides/setup', 'guides/devices', 'guides/formats', 'guides/capturing', 'guides/frame-processors', 'guides/animated', 'guides/errors', 'guides/troubleshooting'],

|

||||

Guides: [

|

||||

'guides/setup',

|

||||

'guides/devices',

|

||||

'guides/formats',

|

||||

'guides/capturing',

|

||||

'guides/frame-processors',

|

||||

{

|

||||

type: 'category',

|

||||

label: 'Creating Frame Processor Plugins',

|

||||

items: [

|

||||

'guides/frame-processors-plugins-overview',

|

||||

'guides/frame-processors-plugins-ios',

|

||||

'guides/frame-processors-plugins-android',

|

||||

'guides/frame-processors-plugins-final',

|

||||

'guides/frame-processor-plugin-list'

|

||||

]

|

||||

},

|

||||

'guides/animated',

|

||||

'guides/errors',

|

||||

'guides/troubleshooting',

|

||||

],

|

||||

API: require('./typedoc-sidebar.js'),

|

||||

},

|

||||

};

|

||||

|

||||

BIN

docs/static/img/appclip.png

vendored

Normal file

BIN

docs/static/img/appclip.png

vendored

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 30 KiB |

BIN

docs/static/img/frame-processors.gif

vendored

Normal file

BIN

docs/static/img/frame-processors.gif

vendored

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 8.6 MiB |

BIN

docs/static/img/slow-log-2.png

vendored

Normal file

BIN

docs/static/img/slow-log-2.png

vendored

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 20 KiB |

BIN

docs/static/img/slow-log.png

vendored

Normal file

BIN

docs/static/img/slow-log.png

vendored

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 29 KiB |

4269

docs/yarn.lock

4269

docs/yarn.lock

File diff suppressed because it is too large

Load Diff

Reference in New Issue

Block a user