feat: Frame Processors for Android (#196)

* Create android gradle build setup * Fix `prefab` config * Add `pickFirst **/*.so` to example build.gradle * fix REA path * cache gradle builds * Update validate-android.yml * Create Native Proxy * Copy REA header * implement ctor * Rename CameraViewModule -> FrameProcessorRuntimeManager * init FrameProcessorRuntimeManager * fix name * Update FrameProcessorRuntimeManager.h * format * Create AndroidErrorHandler.h * Initialize runtime and install JSI funcs * Update FrameProcessorRuntimeManager.cpp * Update CameraViewModule.kt * Make CameraView hybrid C++ class to find view & set frame processor * Update FrameProcessorRuntimeManager.cpp * pass function by rvalue * pass by const && * extract hermes and JSC REA * pass `FOR_HERMES` * correctly prepare JSC and Hermes * Update CMakeLists.txt * add missing hermes include * clean up imports * Create JImageProxy.h * pass ImageProxy to JNI as `jobject` * try use `JImageProxy` C++ wrapper type * Use `local_ref<JImageProxy>` * Create `JImageProxyHostObject` for JSI interop * debug call to frame processor * Unset frame processor * Fix CameraView native part not being registered * close image * use `jobject` instead of `JImageProxy` for now :( * fix hermes build error * Set enable FP callback * fix JNI call * Update CameraView.cpp * Get Format * Create plugin abstract * Make `FrameProcessorPlugin` a hybrid object * Register plugin CXX * Call `registerPlugin` * Catch * remove JSI * Create sample QR code plugin * register plugins * Fix missing JNI binding * Add `mHybridData` * prefix name with two underscores (`__`) * Update CameraPage.tsx * wrap `ImageProxy` in host object * Use `jobject` for HO box * Update JImageProxy.h * reinterpret jobject * Try using `JImageProxy` instead of `jobject` * Update JImageProxy.h * get bytes per row and plane count * Update CameraView.cpp * Return base * add some docs and JNI JSI conversion * indent * Convert JSI value to JNI jobject * using namespace facebook * Try using class * Use plain old Object[] * Try convert JNI -> JSI * fix decl * fix bool init * Correctly link folly * Update CMakeLists.txt * Convert Map to Object * Use folly for Map and Array * Return `alias_ref<jobject>` instead of raw `jobject` * fix JNI <-> JSI conversion * Update JSIJNIConversion.cpp * Log parameters * fix params index offset * add more test cases * Update FRAME_PROCESSORS_CREATE_OVERVIEW.mdx * fix types * Rename to example plugin * remove support for hashmap * Try use HashMap iterable fbjni binding * try using JReadableArray/JReadableMap * Fix list return values * Update JSIJNIConversion.cpp * Update JSIJNIConversion.cpp * (iOS) Rename ObjC QR Code Plugin to Example Plugin * Rename Swift plugin QR -> Example * Update ExamplePluginSwift.swift * Fix Map/Dictionary logging format * Update ExampleFrameProcessorPlugin.m * Reconfigure session if frame processor changed * Handle use-cases via `maxUseCasesCount` * Don't crash app on `configureSession` error * Document "use-cases" * Update DEVICES.mdx * fix merge * Make `const &` * iOS: Automatically enable `video` if a `frameProcessor` is set * Update CameraView.cpp * fix docs * Automatically fallback to snapshot capture if `supportsParallelVideoProcessing` is false. * Fix lookup * Update CameraView.kt * Implement `frameProcessorFps` * Finalize Frame Processor Plugin Hybrid * Update CameraViewModule.kt * Support `flash` on `takeSnapshot()` * Update docs * Add docs * Update CameraPage.tsx * Attribute NonNull * remove unused imports * Add Android docs for Frame Processors * Make JNI HashMap <-> JSI Object conversion faster directly access `toHashMap` instead of going through java * add todo * Always run `prepareJSC` and `prepareHermes` * switch jsc and hermes * Specify ndkVersion `21.4.7075529` * Update gradle.properties * Update gradle.properties * Create .aar * Correctly prepare android package * Update package.json * Update package.json * remove `prefab` build feature * split * Add docs for registering the FP plugin * Add step for dep * Update CaptureButton.tsx * Move to `reanimated-headers/` * Exclude reanimated-headers from cpplint * disable `build/include_order` rule * cpplint fixes * perf: Make `JSIJNIConversion` a `namespace` instead of `class` * Ignore runtime/references for `convert` funcs * Build Android .aar in CI * Run android build script only on `prepack` * Update package.json * Update package.json * Update build-android-npm-package.sh * Move to `yarn build` * Also install node_modules in example step * Update validate-android.yml * sort imports * fix torch * Run ImageAnalysis on `FrameProcessorThread` * Update Errors.kt * Add clean android script * Upgrade reanimated to 2.3.0-alpha.1 * Revert "Upgrade reanimated to 2.3.0-alpha.1" This reverts commit c1d3bed5e03728d0b5e335a359524ff4f56f5035. * ⚠️ TEMP FIX: hotfix reanimated build.gradle * Update CameraView+TakeSnapshot.kt * ⚠️ TEMP FIX: Disable ktlint action for now * Update clean.sh * Set max heap size to 4g * rebuild lockfiles * Update Podfile.lock * rename * Build lib .aar before example/

This commit is contained in:

@@ -73,7 +73,7 @@ While taking snapshots is faster than taking photos, the resulting image has way

|

||||

:::

|

||||

|

||||

:::note

|

||||

The `takeSnapshot` function also works with `photo={false}`. For this reason, devices that do not support photo and video capture at the same time (see ["The `supportsPhotoAndVideoCapture` prop"](/docs/guides/devices/#the-supportsphotoandvideocapture-prop)) can use `video={true}` and fall back to snapshot capture for photos. (See ["Taking Snapshots"](/docs/guides/capturing#taking-snapshots))

|

||||

The `takeSnapshot` function also works with `photo={false}`. For this reason VisionCamera will automatically fall-back to snapshot capture if you are trying to use more use-cases than the Camera natively supports. (see ["The `supportsParallelVideoProcessing` prop"](/docs/guides/devices#the-supportsparallelvideoprocessing-prop))

|

||||

:::

|

||||

|

||||

## Recording Videos

|

||||

|

||||

@@ -98,13 +98,25 @@ function App() {

|

||||

}

|

||||

```

|

||||

|

||||

### The `supportsPhotoAndVideoCapture` prop

|

||||

### The `supportsParallelVideoProcessing` prop

|

||||

|

||||

Camera devices provide the [`supportsPhotoAndVideoCapture` property](/docs/api/interfaces/cameradevice.cameradevice-1#supportsphotoandvideocapture) which determines whether the device supports enabling photo- and video-capture at the same time.

|

||||

While every iOS device supports this feature, there are some older Android devices which only allow enabling one of each - either photo capture or video capture. (Those are `LEGACY` devices, see [this table](https://developer.android.com/reference/android/hardware/camera2/CameraDevice#regular-capture).)

|

||||

Camera devices provide the [`supportsParallelVideoProcessing` property](/docs/api/interfaces/cameradevice.cameradevice-1#supportsparallelvideoprocessing) which determines whether the device supports using Video Recordings (`video={true}`) and Frame Processors (`frameProcessor={...}`) at the same time.

|

||||

|

||||

If this property is `false`, you can only enable `video` or add a `frameProcessor`, but not both.

|

||||

|

||||

* On iOS this value is always `true`.

|

||||

* On newer Android devices this value is always `true`.

|

||||

* On older Android devices this value is `false` if the Camera's hardware level is `LEGACY` or `LIMITED`, `true` otherwise. (See [`INFO_SUPPORTED_HARDWARE_LEVEL`](https://developer.android.com/reference/android/hardware/camera2/CameraCharacteristics#INFO_SUPPORTED_HARDWARE_LEVEL) or [the tables at "Regular capture"](https://developer.android.com/reference/android/hardware/camera2/CameraDevice#regular-capture))

|

||||

|

||||

#### Examples

|

||||

|

||||

* An app that only supports **taking photos** works on every Camera device because this only affects video processing.

|

||||

* An app that supports **taking photos** and **videos** works on every Camera device because only a single video processing feature is used (`video`).

|

||||

* An app that only uses **Frame Processors** (no taking photos or videos) works on every Camera device because it only uses a single video processing feature (`frameProcessor`).

|

||||

* An app that uses **Frame Processors** and supports **taking photos** and **videos** only works on Camera devices where `supportsParallelVideoProcessing` is `true`. (iPhones and newer Android Phones)

|

||||

|

||||

:::note

|

||||

If `supportsPhotoAndVideoCapture` is `false` but you still need photo- and video-capture at the same time, you can fall back to _snapshot capture_ (see [**"Taking Snapshots"**](/docs/guides/capturing#taking-snapshots)) instead.

|

||||

Actually the limitation also affects the `photo` feature, but VisionCamera will automatically fall-back to **Snapshot capture** if you are trying to use multiple features (`photo` + `video` + `frameProcessor`) and they are not natively supported. (See ["Taking Snapshots"](/docs/guides/capturing#taking-snapshots))

|

||||

:::

|

||||

|

||||

<br />

|

||||

|

||||

@@ -34,11 +34,11 @@ The Frame Processor Plugin Registry API automatically manages type conversion fr

|

||||

|

||||

| JS Type | Objective-C Type | Java Type |

|

||||

|----------------------|-------------------------------|----------------------------|

|

||||

| `number` | `NSNumber*` (double) | `double` |

|

||||

| `boolean` | `NSNumber*` (boolean) | `boolean` |

|

||||

| `number` | `NSNumber*` (double) | `Double` |

|

||||

| `boolean` | `NSNumber*` (boolean) | `Boolean` |

|

||||

| `string` | `NSString*` | `String` |

|

||||

| `[]` | `NSArray*` | `Array<Object>` |

|

||||

| `{}` | `NSDictionary*` | `HashMap<Object>` |

|

||||

| `[]` | `NSArray*` | `ReadableNativeArray` |

|

||||

| `{}` | `NSDictionary*` | `ReadableNativeMap` |

|

||||

| `undefined` / `null` | `nil` | `null` |

|

||||

| `(any, any) => void` | [`RCTResponseSenderBlock`][4] | `(Object, Object) -> void` |

|

||||

| [`Frame`][1] | [`Frame*`][2] | [`ImageProxy`][3] |

|

||||

|

||||

@@ -63,11 +63,12 @@ If you want to distribute your Frame Processor Plugin, simply use npm.

|

||||

|

||||

1. Create a blank Native Module using [bob](https://github.com/callstack/react-native-builder-bob) or [create-react-native-module](https://github.com/brodybits/create-react-native-module)

|

||||

2. Name it `vision-camera-plugin-xxxxx` where `xxxxx` is the name of your plugin

|

||||

3. Remove all the source files for the Example Native Module

|

||||

4. Implement the Frame Processor Plugin in the iOS, Android and JS/TS Codebase using the guides above

|

||||

5. Add installation instructions to let users know they have to add your frame processor in the `babel.config.js` configuration.

|

||||

6. Publish the plugin to npm. Users will only have to install the plugin using `npm i vision-camera-plugin-xxxxx` and add it to their `babel.config.js` file.

|

||||

7. [Add the plugin to the **official VisionCamera plugin list**](https://github.com/mrousavy/react-native-vision-camera/edit/main/docs/docs/guides/FRAME_PROCESSOR_PLUGIN_LIST.mdx) for more visibility

|

||||

3. Remove the generated template code from the Example Native Module

|

||||

4. Add VisionCamera to `peerDependencies`: `"react-native-vision-camera": ">= 2"`

|

||||

5. Implement the Frame Processor Plugin in the iOS, Android and JS/TS Codebase using the guides above

|

||||

6. Add installation instructions to the `README.md` to let users know they have to add your frame processor in the `babel.config.js` configuration.

|

||||

7. Publish the plugin to npm. Users will only have to install the plugin using `npm i vision-camera-plugin-xxxxx` and add it to their `babel.config.js` file.

|

||||

8. [Add the plugin to the **official VisionCamera plugin list**](https://github.com/mrousavy/react-native-vision-camera/edit/main/docs/docs/guides/FRAME_PROCESSOR_PLUGIN_LIST.mdx) for more visibility

|

||||

|

||||

<br />

|

||||

|

||||

|

||||

@@ -4,14 +4,126 @@ title: Creating Frame Processor Plugins for Android

|

||||

sidebar_label: Creating Frame Processor Plugins (Android)

|

||||

---

|

||||

|

||||

import useBaseUrl from '@docusaurus/useBaseUrl';

|

||||

import Tabs from '@theme/Tabs';

|

||||

import TabItem from '@theme/TabItem';

|

||||

|

||||

:::warning

|

||||

Frame Processors are not yet available for Android.

|

||||

## Creating a Frame Processor

|

||||

|

||||

The Frame Processor Plugin API is built to be as extensible as possible, which allows you to create custom Frame Processor Plugins.

|

||||

In this guide we will create a custom QR Code Scanner Plugin which can be used from JS.

|

||||

|

||||

Android Frame Processor Plugins can be written in either **Java**, **Kotlin** or **C++ (JNI)**.

|

||||

|

||||

<Tabs

|

||||

defaultValue="java"

|

||||

values={[

|

||||

{label: 'Java', value: 'java'},

|

||||

{label: 'Kotlin', value: 'kotlin'}

|

||||

]}>

|

||||

<TabItem value="java">

|

||||

|

||||

1. Open your Project in Android Studio

|

||||

2. Create a Java source file, for the QR Code Plugin this will be called `QRCodeFrameProcessorPlugin.java`.

|

||||

3. Add the following code:

|

||||

|

||||

```java {8}

|

||||

import androidx.camera.core.ImageProxy;

|

||||

import com.mrousavy.camera.frameprocessor.FrameProcessorPlugin;

|

||||

|

||||

public class QRCodeFrameProcessorPlugin extends FrameProcessorPlugin {

|

||||

|

||||

@Override

|

||||

public Object callback(ImageProxy image, Object[] params) {

|

||||

// code goes here

|

||||

return null;

|

||||

}

|

||||

|

||||

QRCodeFrameProcessorPlugin() {

|

||||

super("scanQRCodes");

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

:::note

|

||||

The JS function name will be equal to the name you pass to the `super(...)` call (with a `__` prefix). Make sure it is unique across other Frame Processor Plugins.

|

||||

:::

|

||||

|

||||

4. **Implement your Frame Processing.** See the [Example Plugin (Java)](https://github.com/mrousavy/react-native-vision-camera/blob/main/example/android/app/src/main/java/com/mrousavy/camera/example/ExampleFrameProcessorPlugin.java) for reference.

|

||||

5. Create a new Java file which registers the Frame Processor Plugin in a React Package, for the QR Code Scanner plugin this file will be called `QRCodeFrameProcessorPluginPackage.java`:

|

||||

|

||||

```java {12}

|

||||

import com.facebook.react.ReactPackage;

|

||||

import com.facebook.react.bridge.NativeModule;

|

||||

import com.facebook.react.bridge.ReactApplicationContext;

|

||||

import com.facebook.react.uimanager.ViewManager;

|

||||

import com.mrousavy.camera.frameprocessor.FrameProcessorPlugin;

|

||||

import javax.annotation.Nonnull;

|

||||

|

||||

public class QRCodeFrameProcessorPluginPackage implements ReactPackage {

|

||||

@NonNull

|

||||

@Override

|

||||

public List<NativeModule> createNativeModules(@NonNull ReactApplicationContext reactContext) {

|

||||

FrameProcessorPlugin.register(new QRCodeFrameProcessorPlugin());

|

||||

return Collections.emptyList();

|

||||

}

|

||||

|

||||

@Nonnull

|

||||

@Override

|

||||

public List<ViewManager> createViewManagers(@Nonnull ReactApplicationContext reactContext) {

|

||||

return Collections.emptyList();

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

</TabItem>

|

||||

<TabItem value="kotlin">

|

||||

|

||||

1. Open your Project in Android Studio

|

||||

2. Create a Kotlin source file, for the QR Code Plugin this will be called `QRCodeFrameProcessorPlugin.kt`.

|

||||

3. Add the following code:

|

||||

|

||||

```kotlin {7}

|

||||

import androidx.camera.core.ImageProxy

|

||||

import com.mrousavy.camera.frameprocessor.FrameProcessorPlugin

|

||||

|

||||

class ExampleFrameProcessorPluginKotlin: FrameProcessorPlugin("scanQRCodes") {

|

||||

|

||||

override fun callback(image: ImageProxy, params: Array<Any>): Any? {

|

||||

// code goes here

|

||||

return null

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

:::note

|

||||

The JS function name will be equal to the name you pass to the `FrameProcessorPlugin(...)` call (with a `__` prefix). Make sure it is unique across other Frame Processor Plugins.

|

||||

:::

|

||||

|

||||

4. **Implement your Frame Processing.** See the [Example Plugin (Java)](https://github.com/mrousavy/react-native-vision-camera/blob/main/example/android/app/src/main/java/com/mrousavy/camera/example/ExampleFrameProcessorPlugin.java) for reference.

|

||||

5. Create a new Kotlin file which registers the Frame Processor Plugin in a React Package, for the QR Code Scanner plugin this file will be called `QRCodeFrameProcessorPluginPackage.kt`:

|

||||

|

||||

```kotlin {9}

|

||||

import com.facebook.react.ReactPackage

|

||||

import com.facebook.react.bridge.NativeModule

|

||||

import com.facebook.react.bridge.ReactApplicationContext

|

||||

import com.facebook.react.uimanager.ViewManager

|

||||

import com.mrousavy.camera.frameprocessor.FrameProcessorPlugin

|

||||

|

||||

class QRCodeFrameProcessorPluginPackage : ReactPackage {

|

||||

override fun createNativeModules(reactContext: ReactApplicationContext): List<NativeModule> {

|

||||

FrameProcessorPlugin.register(ExampleFrameProcessorPluginKotlin())

|

||||

return emptyList()

|

||||

}

|

||||

|

||||

override fun createViewManagers(reactContext: ReactApplicationContext): List<ViewManager<*, *>> {

|

||||

return emptyList()

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

</TabItem>

|

||||

</Tabs>

|

||||

|

||||

<br />

|

||||

|

||||

#### 🚀 Next section: [Finish creating your Frame Processor Plugin](frame-processors-plugins-final) (or [add iOS support to your Frame Processor Plugin](frame-processors-plugins-ios))

|

||||

|

||||

@@ -48,7 +48,7 @@ VISION_EXPORT_FRAME_PROCESSOR(scanQRCodes)

|

||||

@end

|

||||

```

|

||||

|

||||

4. **Implement your Frame Processing.** See the [QR Code Plugin (Objective-C)](https://github.com/mrousavy/react-native-vision-camera/blob/main/example/ios/Frame%20Processor%20Plugins/QR%20Code%20Plugin%20%28Objective%2DC%29) for reference.

|

||||

4. **Implement your Frame Processing.** See the [Example Plugin (Objective-C)](https://github.com/mrousavy/react-native-vision-camera/blob/main/example/ios/Frame%20Processor%20Plugins/Example%20Plugin%20%28Objective%2DC%29) for reference.

|

||||

|

||||

:::note

|

||||

The JS function name will be equal to the Objective-C function name you choose (with a `__` prefix). Make sure it is unique across other Frame Processor Plugins.

|

||||

@@ -57,18 +57,19 @@ The JS function name will be equal to the Objective-C function name you choose (

|

||||

</TabItem>

|

||||

<TabItem value="swift">

|

||||

|

||||

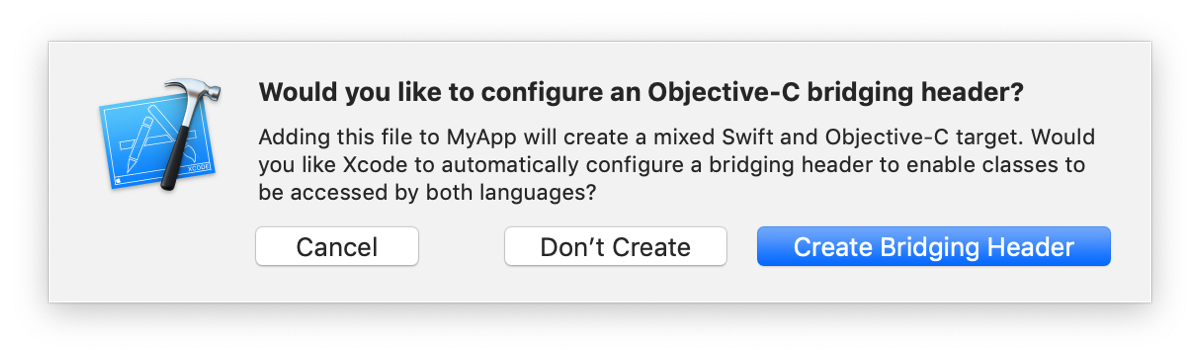

1. Create a Swift file, for the QR Code Plugin this will be `QRCodeFrameProcessorPlugin.swift`. If Xcode asks you to create a Bridging Header, press **create**.

|

||||

1. Open your Project in Xcode

|

||||

2. Create a Swift file, for the QR Code Plugin this will be `QRCodeFrameProcessorPlugin.swift`. If Xcode asks you to create a Bridging Header, press **create**.

|

||||

|

||||

|

||||

|

||||

2. Inside the newly created Bridging Header, add the following code:

|

||||

3. Inside the newly created Bridging Header, add the following code:

|

||||

|

||||

```objc

|

||||

#import <VisionCamera/FrameProcessorPlugin.h>

|

||||

#import <VisionCamera/Frame.h>

|

||||

```

|

||||

|

||||

3. Create an Objective-C source file with the same name as the Swift file, for the QR Code Plugin this will be `QRCodeFrameProcessorPlugin.m`. Add the following code:

|

||||

4. Create an Objective-C source file with the same name as the Swift file, for the QR Code Plugin this will be `QRCodeFrameProcessorPlugin.m`. Add the following code:

|

||||

|

||||

```objc

|

||||

#import <VisionCamera/FrameProcessorPlugin.h>

|

||||

@@ -81,7 +82,7 @@ The JS function name will be equal to the Objective-C function name you choose (

|

||||

The first parameter in the Macro specifies the JS function name. Make sure it is unique across other Frame Processors.

|

||||

:::

|

||||

|

||||

4. In the Swift file, add the following code:

|

||||

5. In the Swift file, add the following code:

|

||||

|

||||

```swift {8}

|

||||

@objc(QRCodeFrameProcessorPlugin)

|

||||

@@ -97,7 +98,7 @@ public class QRCodeFrameProcessorPlugin: NSObject, FrameProcessorPluginBase {

|

||||

}

|

||||

```

|

||||

|

||||

5. **Implement your frame processing.** See [QR Code Plugin (Swift)](https://github.com/mrousavy/react-native-vision-camera/blob/main/example/ios/Frame%20Processor%20Plugins/QR%20Code%20Plugin%20%28Swift%29) for reference.

|

||||

6. **Implement your frame processing.** See [Example Plugin (Swift)](https://github.com/mrousavy/react-native-vision-camera/blob/main/example/ios/Frame%20Processor%20Plugins/Example%20Plugin%20%28Swift%29) for reference.

|

||||

|

||||

|

||||

</TabItem>

|

||||

|

||||

@@ -14,7 +14,7 @@ module.exports = {

|

||||

indexName: 'react-native-vision-camera',

|

||||

},

|

||||

prism: {

|

||||

additionalLanguages: ['swift'],

|

||||

additionalLanguages: ['swift', 'java', 'kotlin'],

|

||||

},

|

||||

navbar: {

|

||||

title: 'VisionCamera',

|

||||

|

||||

Reference in New Issue

Block a user